diff --git a/.github/workflows/deploy-preview.yml b/.github/workflows/deploy-preview.yml

new file mode 100644

index 00000000..6ccba677

--- /dev/null

+++ b/.github/workflows/deploy-preview.yml

@@ -0,0 +1,27 @@

+

+name: Dispatch Preview Update

+on:

+ push:

+ branches: [development]

+

+jobs:

+ dispatch:

+ runs-on: ubuntu-latest

+ steps:

+ - name: Setup SSH Keys and known_hosts

+ uses: webfactory/ssh-agent@v0.5.3

+ with:

+ ssh-private-key: ${{ secrets.DEPLOY_KEY }}

+

+

+ - name: Pull new posts

+ run: |

+ git clone --recursive git@github.com:CHTC/article-preview.git

+ cd article-preview

+ git config user.name "GitHub Actions"

+ git config user.email "actions@github.com"

+ git submodule update --remote

+ git add _posts

+ git remote -v

+ git commit -m "Article Submodule Updated"

+ git push git@github.com:CHTC/article-preview.git

diff --git a/2022-06-30-Opotowsky.md b/2022-06-30-Opotowsky.md

new file mode 100644

index 00000000..8d8ca394

--- /dev/null

+++ b/2022-06-30-Opotowsky.md

@@ -0,0 +1,65 @@

+---

+title: "Expediting Nuclear Forensics and Security Using High Through Computing"

+

+author: Hannah Cheren

+

+publish_on:

+ - htcondor

+ - path

+ - chtc

+

+type: user

+

+canonical_url: https://osg-htc.org/spotlights/Opotowsky.html

+

+image:

+ path: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Opotowsky-card.jpeg"

+ alt: Photo by Dan Myers on Unsplash

+

+description: Arrielle C. Opotowsky, a 2021 Ph.D. graduate from the University of Wisconsin-Madison's Department of Engineering Physics, describes how she utilized high throughput computing to expedite nuclear forensics investigations.

+excerpt: Arrielle C. Opotowsky, a 2021 Ph.D. graduate from the University of Wisconsin-Madison's Department of Engineering Physics, describes how she utilized high throughput computing to expedite nuclear forensics investigations.

+

+card_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Opotowsky-card.jpeg"

+card_alt: Arrielle C. Opotowsky, a 2021 Ph.D. graduate from the University of Wisconsin-Madison's Department of Engineering Physics, describes how she utilized high throughput computing to expedite nuclear forensics investigations.

+

+banner_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Opotowsky-card.jpeg"

+banner_alt: Arrielle C. Opotowsky, a 2021 Ph.D. graduate from the University of Wisconsin-Madison's Department of Engineering Physics, describes how she utilized high throughput computing to expedite nuclear forensics investigations.

+---

+ ***Arrielle C. Opotowsky, a 2021 Ph.D. graduate from the University of Wisconsin-Madison's Department of Engineering Physics, describes how she utilized high throughput computing to expedite nuclear forensics investigations.***

+

+

+

+ Photo by Dan Myers on Unsplash.

+

+

+

+

+ Arrielle C. Opotowsky, 2021 Ph.D. graduate from the University of Wisconsin-Madison's Department of Engineering Physics

+

+

+ "Each year, there can be from two to twenty incidents related to the malicious use of nuclear materials,” including theft, sabotage, illegal transfer, and even terrorism, [Arrielle C. Opotowsky](http://scifun.org/Thesis_Awards/opotowsky.html) direly warned. Opotowsky, a 2021 Ph.D. graduate from the University of Wisconsin-Madison's Department of Engineering Physics, immediately grabbed the audience’s attention at [HTCondor Week 2022](https://agenda.hep.wisc.edu/event/1733/timetable/?view=standard).

+

+ Opotowsky's work focuses on nuclear forensics. Preventing nuclear terrorism is the primary concern of nuclear security, and nuclear forensics is “the *response* side to a nuclear event occurring,” Opotowsky explains. Typically in a nuclear forensics investigation, specific measurements need to be processed; unfortunately, some of these measurements can take months to process. Opotowsky calls this “slow measure” general mass spectrometry. Although this measurement can help point investigators in the right direction, they wouldn’t be able to do until long after the incident has occurred.

+

+ In trying to learn how she could expedite a nuclear forensics investigation, Opotowsky wanted to see if Gamma Spectroscopy, a “fast measurement,” could be the solution. This measure can potentially point investigators in the right direction, but in days rather than months.

+

+ To test whether this “fast measurement” could expedite a nuclear forensics investigation compared to a “slow measurement,” Opotowsky created a workflow and compared the two measurements.

+

+ While Opotowsky was a graduate student working on this problem, the workflow she created was running on her personal computer and suddenly stopped working. In a panic, she went to her advisor, [Paul Wilson](https://directory.engr.wisc.edu/ep/faculty/wilson_paul), for help, and he pointed her to the UW-Madison Center for High Throughput Computing (CHTC).

+

+ CHTC Research Computing Facilitators came to her aid, and “the support was phenomenal – there was a one-on-one introduction and a tutorial and incredible help via emails and office hours…I had a ton of help along the way.”

+

+ She needed capacity from the CHTC because she used a machine-learning workflow and 10s of case variations. She had a relatively large training database because she used several algorithms and hyperparameter variations and wanted to predict several labels. The sheer magnitude of these training databases is the leading reason why Opotowsky needed the services of the CHTC.

+

+ She used two computation categories, the second of which required a specific capability offered by the CHTC - the ability to scale out a large problem into an ensemble of smaller jobs running in parallel. With 500,000 total entries in the databases and a limit of 10,000 jobs per case submission, Opotowsky split the computations into fifty calculations per job. This method resulted in lower memory needs per job, each taking only a few minutes to run.

+

+ “I don’t think my research would have been possible” without HTC, Opotowsky noted as she reflected on how the CHTC impacted her research. “The main component of my research driving my need [for the CHTC] was the size of my database. It would’ve had to be smaller, have fewer parameter variations, and that ‘fast’ measurement was like a ‘real-world’ scenario; I wouldn’t have been able to have that.”

+

+ Little did Opotowsky know that her experience using HTC would also benefit her professionally. Having HTC experience has helped Opotowsky in job interviews and securing her current position in nuclear security. As a nuclear methods software engineer, “knowledge of designing code and interacting with job submission systems is something I use all the time,” she comments, “[learning HTC] was a wonderful experience to gain” from both a researcher and professional point of view.

+

+

+...

+

+ *Watch a video recording of Arrielle C. Opotowsky’s talk at HTCondor Week 2022, and browse her [slides](https://agenda.hep.wisc.edu/event/1733/contributions/25511/attachments/8299/9577/HTCondorWeek_AOpotowsky.pdf).*

+

+

diff --git a/2022-07-06-Wilcots.md b/2022-07-06-Wilcots.md

new file mode 100644

index 00000000..19e0fe36

--- /dev/null

+++ b/2022-07-06-Wilcots.md

@@ -0,0 +1,124 @@

+---

+title: "Keynote Address: The Future of Radio Astronomy Using High Throughput Computing"

+

+author: Hannah Cheren

+

+publish_on:

+ - htcondor

+ - path

+ - chtc

+

+type: user

+

+canonical_url: https://htcondor.org/featured-users/2022-07-06-Wilcots.html

+

+image:

+ path: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Wilcots-card.png"

+ alt: Image of the black hole in the center of our Milky Way galaxy.

+

+description: Eric Wilcots, UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy, dazzles the HTCondor Week 2022 audience.

+excerpt: Eric Wilcots, UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy, dazzles the HTCondor Week 2022 audience.

+

+card_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Wilcots-card.png"

+card_alt: Eric Wilcots, UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy, dazzles the HTCondor Week 2022 audience.

+

+banner_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Wilcots-card.png"

+banner_alt: Eric Wilcots, UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy, dazzles the HTCondor Week 2022 audience.

+---

+ ***Eric Wilcots, UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy, dazzles the HTCondor Week 2022 audience.***

+

+

+

+ Image of the black hole in the center of our Milky Way galaxy.

+

+

+

+

+ Eric Wilcots

+

+

+ “My job here is to…inspire you all with a sense of the discoveries to come that will need to be enabled by,” high throughput computing (HTC), Eric Wilcots opened his keynote for HTCondor Week 2022. Wilcots is the UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy.

+

+ Wilcots points out that the black hole image (shown above) is a remarkable feat in the world of astronomy. “Only the third such black hole imaged in this way by the Event Horizon Telescope,” and it was made possible with the help of the HTCondor Software Suite (HTCSS).

+

+ **Beginning to build the future**

+

+ Wilcots described how in the 1940s, a group of universities recognized that no single university could build a radio telescope necessary to advance science. To access these kinds of telescopes, the universities would need to have the national government involved, as it was the only one with this capability at that time. In 1946, these universities created Associated Universities Incorporated (AUI), which eventually became the management agency for the National Radio Astronomy Observatory (NRAO).

+

+ Advances in radio astronomy rely on current technology available to experts in this field. Wilcots explained that “the science demands more sensitivity, more resolution, and the ability to map large chunks of the sky simultaneously.” New and emerging technologies must continue pushing forward to discover the next big thing in radio astronomy.

+

+ This next generation of science requires more sensitive technology with higher spectra resolution than the Karl G. Jansky Very Large Array (JVLA) can provide. It also requires sensitivity in a particular chunk of the spectrum that neither the JVLA nor Atacama Large Millimeter/submillimeter Array (ALMA) can achieve. Wilcots described just what piece of technology astronomers and engineers need to create to reach this level of sensitivity. “We’re looking to build the Next Generation Very Large Array (ngVLA)...an instrument that will cover a huge chunk of spectrum from 1 GHz to 116 GHz.”

+

+ **The fundamentals of the ngVLA**

+

+ “The unique and wonderful thing about interferometry, or the basis of radio astronomy,” Wilcots discussed, “is the ability to have many individual detectors or dishes to form a telescope.” Each dish collects signals, creating an image or spectrum of the sky when combined. Because of this capability, engineers working on these detectors can begin to collect signals right away, and as more dishes get added, the telescope grows larger and larger.

+

+ Many individual detectors also mean lots of flexibility in the telescope arrays built, Wilcots explained. Here, the idea is to do several different arrays to make up one telescope. A particular scientific case drives each of these arrays:

+ - Main Array: a dish that you can control and point accurately but is also robust; it’ll be the workhorse of the ngVLA, simultaneously capable of high sensitivity and high-resolution observations.

+ - Short Baseline Array: dishes that are very close together, which allows you to have a large field of view of the sky.

+ - Long Baseline Array: spread out across the continental United States. The idea here is the longer the baseline, the higher the resolution. Dishes that are well separated allow the user to get spectacular spatial resolution of the sky. For example, the Event Horizon Telescope that took the image of the black hole is a telescope that spans the globe, which is the longest baseline we can get without putting it into orbit.

+

+

+

+ The ngVLA will be spread out over the southwest United States and Mexico.

+

+

+ A consensus study report called Pathways to Discovery in Astronomy and Astrophysics for the 2020s (Astro2020) identified the ngVLA as a high priority. The construction of this telescope should begin this decade and be completed by the middle of the 2020s.

+

+ **Future of radio astronomy: planet formation**

+

+ An area of research that radio astronomers are interested in examining in the future is imaging the formation of planets, Wilcot notes. Right now, astronomers can detect a planet’s presence and deduce specific characteristics, but being able to detect a planet directly is the next huge priority.

+

+

+

+ A planetary system forming

+

+

+ One place astronomers might be able to do this with something like the ngVLA is in the early phases of planet formation within a planetary system. The thermal emissions from this process are bright enough to be detected by a telescope like the ngVLA. So the idea is to use this telescope to map an image of nearby planetary systems and begin to image the early stages of planet formation directly. A catalog of these planets forming will allow astronomers to understand what happens when planetary systems, like our own, form.

+

+ **Future of radio astronomy: molecular systems**

+

+ Wilcots explains that radio astronomers have discovered the spectral signature of innumerable molecules within the past fifty years. The ngVLA is being designed to probe, detect, catalog, and understand the origin of complex molecules and what they might tell us about star and planet formation. Wilcots comments in his talk that “this type of work is spawning a new type of science…a remarkable new discipline of astrobiology is emerging from our ability to identify and trace complex organic molecules.”

+

+ **Future of radio astronomy: galaxy completion**

+

+ Next, Wilcots discusses that radio astronomers want to understand how stars form in the first place and the processes that drive the collapse of clouds of gas into regions of star formations.

+

+

+

+ An image of a blue spiral from the VLA of a nearby spiral galaxy is on the left. On the right an optical extent of the galaxy.

+

+

+ The gas in a galaxy tends to extend well beyond the visible part of the galaxy, and this enormous gas reservoir is how the galaxy can make stars.

+

+ Astronomers like Wilcots want to know where the gas is, what drives that process of converting the gas into stars, what role the environment might play, and finally, what makes a galaxy stop creating stars.

+

+ ngVLA will be able to answer these questions as it combines the sensitivity and spatial resolution needed to take images of gas clouds in nearby galaxies while also capturing the full extent of that gas.

+

+ **Future of radio astronomy: black holes**

+

+ Wilcots’ look into the future of radio astronomy finishes with the idea and understanding of black holes.

+

+ Multi-messenger astrophysics helps experts recognize that information about the universe is not simply electromagnetic, as it is known best; there is more than one way astronomers can look at the universe.

+

+ More recently, astronomers have been looking at gravitational waves. In particular, they’ve been looking at how they can find a way to detect the gravitational waves produced by two black holes orbiting around one another to determine each black hole’s mass and learn something about them. As the recent EHT images show, we need radio telescopes' high resolution and sensitivity to understand the nature of black holes fully.

+

+ **A look toward the future**

+

+ The next step is for the NRAO to create a prototype of the dishes they want to install for the telescope. Then, it’s just a question of whether or not they can build and install enough dishes to deliver this instrument to its full capacity. Wilcots elaborates, “we hope to transition to full scientific operations by the middle of next decade (the 2030s).”

+

+ The distinguished administrator expressed that “something that’s haunted radio astronomy for a while is that to do the imaging, you have to ‘be in the club,’ ” meaning that not just anyone can access the science coming out of these telescopes. The goal of the NRAO moving forward is to create science-ready data products so that this information can be more widely available to anyone, not just those with intimate knowledge of the subject.

+

+ This effort to make this science more accessible has been part of a budding collaboration between UW-Madison, the NRAO, and a consortium of Historically Black Colleges and Universities and other Minority Serving Institutions in what is called Project RADIAL.

+

+ “The idea behind RADIAL is to broaden the community; not just of individuals engaged in radio astronomy, but also of individuals engaged in the computing that goes into doing the great kind of science we have,” Wilcots explains.

+

+ On the UW-Madison campus in the Summer of 2022, half a dozen undergraduate students from the RADIAL consortium will be on campus doing summer research. The goal is to broaden awareness and increase the participation of communities not typically involved in these discussions in the kind of research in the radial astronomy field.

+

+ “We laid the groundwork for a partnership with a number of these institutions, and that partnership is alive and well,” Wilcots remarks, “so stay tuned for more of that, and we will be advancing that in the upcoming years.”

+

+...

+

+ *Watch a video recording of Eric Wilcots’ talk at HTCondor Week 2022.*

+

+

diff --git a/2022-07-18-EOL-OSG.md b/2022-07-18-EOL-OSG.md

new file mode 100644

index 00000000..5d1f00a6

--- /dev/null

+++ b/2022-07-18-EOL-OSG.md

@@ -0,0 +1,47 @@

+---

+title: "Retirements and New Beginnings: The Transition to Tokens"

+

+author: Hannah Cheren

+

+publish_on:

+ - osg

+ - path

+ - htcondor

+

+type: news

+

+canonical_url: https://osg-htc.org/spotlights/EOL-OSG.html

+

+image:

+ path:

+ alt:

+

+description: May 1, 2022, officially marked the retirement of OSG 3.5, GridFTP, and GSI dependencies. OSG 3.6, up and running since February of 2021, is prepared for usage and took its place, relying on WebDAV and bearer tokens.

+excerpt: May 1, 2022, officially marked the retirement of OSG 3.5, GridFTP, and GSI dependencies. OSG 3.6, up and running since February of 2021, is prepared for usage and took its place, relying on WebDAV and bearer tokens.

+

+card_src:

+card_alt:

+

+banner_src:

+banner_alt:

+---

+

+ ***May 1, 2022, officially marked the retirement of OSG 3.5, GridFTP, and GSI dependencies. OSG 3.6, up and running since February of 2021, is prepared for usage and took its place, relying on WebDAV and bearer tokens.***

+

+ In December of 2019, OSG announced its plan to transition towards bearer tokens and WebDAV-based file transfer, which would ultimately culminate in the retirement of OSG 3.5. Nearly two and a half years later, after significant development and work with collaborators on the transition, OSG marked the end of support for OSG 3.5.

+

+ OSG celebrated the successful and long-planned OSG 3.5 retirement and transition to OSG 3.6, the first version of the OSG Software Stack without any Globus dependencies. Instead, it relies on WebDAV (an extension to HTTP/S allowing for distributed authoring and versioning of files) and bearer tokens.

+

+ Jeff Dost, OSG Coordinator of Operations, reports that the transition “was a big success!” Ultimately, OSG made the May 1st deadline without having to backtrack and put out new fires. Dost notes, however, that “the transition was one of the most difficult ones I can remember in the ten plus years of working with OSG, due to all the coordination needed.”

+

+ Looking back, for nearly fifteen years, communications in OSG were secured with X.509 certificates and proxies via Globus Security Infrastructure (GSI) as an Authentication and Authorization Infrastructure (AAI).

+

+ Then, in June of 2017, Globus announced the end of support for its open-source Toolkit that the OSG depended on. In October, they established the Grid Community Forum (GCF) to continue supporting the Toolkit to ensure that research could continue uninterrupted.

+

+ While the OSG continued contributing to the GCT, the long-term goal was to transition the research community from these approaches to token-based pilot job authentication instead of X.509 proxy authentication.

+

+ A more detailed document of the OSG-LHC GridFTP and GSI migration plans can be found in [this document](https://docs.google.com/document/d/1DAFeAaUmHHVcJGZMTIDUtLs9koCruQRDY1sJq1opeNs/edit#heading=h.6f8tit251wrg). Please visit the GridFTP and GSI Migration [FAQ page](https://osg-htc.org/technology/policy/gridftp-gsi-migration/index.html) if you have any questions. For more information and news about OSG 3.6, please visit the [OSG 3.6 News](https://osg-htc.org/docs/release/osg-36/) release documentation page.

+

+...

+

+ *If you have any questions about the retirement of OSG 3.5 or the implementation of OSG 3.6, please contact help@opensciencegrid.org.*

diff --git a/2022-07-18-Messick.md b/2022-07-18-Messick.md

new file mode 100644

index 00000000..81219e5d

--- /dev/null

+++ b/2022-07-18-Messick.md

@@ -0,0 +1,65 @@

+---

+title: "LIGO's Search for Gravitational Waves Signals Using HTCondor"

+

+author: Hannah Cheren

+

+publish_on:

+ - htcondor

+ - path

+ - chtc

+

+type: user

+

+canonical_url: https://htcondor.org/featured-users/2022-07-06-Messick.html

+

+image:

+ path: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Messick-card.png"

+ alt: Image of two black holes from Cody Messick’s presentation slides.

+

+description: Cody Messick, a Postdoc at the Massachusetts Institute of Technology (MIT) working for the LIGO lab, describes LIGO's use of HTCondor to search for new gravitational wave sources.

+excerpt: Cody Messick, a Postdoc at the Massachusetts Institute of Technology (MIT) working for the LIGO lab, describes LIGO's use of HTCondor to search for new gravitational wave sources.

+

+card_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Messick-card.png"

+card_alt: Image of two black holes from Cody Messick’s presentation slides.

+

+banner_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Messick-card.png"

+banner_alt: Image of two black holes from Cody Messick’s presentation slides.

+---

+ ***Cody Messick, a Postdoc at the Massachusetts Institute of Technology (MIT) working for the LIGO lab, describes LIGO's use of HTCondor to search for new gravitational wave sources.***

+

+

+

+ Image of two black holes. Photo credit: Cody Messick’s presentation slides.

+

+

+ High-throughput computing (HTC) is critical to astronomy, from black hole research to radial astronomy and beyond. At the [2022 HTCondor Week](https://agenda.hep.wisc.edu/event/1733/timetable/?view=standard), another area of astronomy was put in the spotlight by [Cody Messick](https://space.mit.edu/people/messick-cody/), a researcher working for the [LIGO](https://space.mit.edu/instrumentation/ligo/) lab and a Postdoc at the Massachusetts Institute of Technology (MIT). His work focuses on a gravitational-wave analysis that he’s been running with the help of HTCondor to search for new gravitational wave signals.

+

+ Starting with general relativity and why it’s crucial to his work, Messick explains that “it tells us two things; first, space and time are not separate entities but are instead part of a four-dimensional object called space-time. Second, space-time is warped by mass and energy, and it’s these changes to the geometry of space-time that we experience as gravity.”

+

+ Messick notes that general relativity is important to his work because it predicts the existence of gravitational waves. These waves are tiny ripples in the curvature of space-time that travel at the speed of light and stretch and compress space. Accelerating non-spherically symmetric masses generate these waves.

+

+ Generating ripples in the curvature of space-time large enough to be detectable using modern ground-based gravitational-wave observatories takes an enormous amount of energy; the observations made thus far have come from the mergers of compact binaries, pairs of extraordinarily dense yet relatively small astronomical objects that spiral into each other at speeds approaching the speed of light. Black holes and neutron stars are examples of these so-called compact objects, both of which are or almost are perfectly spherical.

+

+ Messick and his team first detected two black holes going two-thirds the speed of light right before they collided. “It’s these fantastic amounts of energy in a collision that moves our detectors by less than the radius of a proton, so we need extremely energetic explosions of collisions to detect these things.”

+

+ Messick looks for specific gravitational waveforms during the data analysis. “We don’t know which ones we’re going to look for or see in advance, so we look for about a million different ones.” They then use match filtering to find the probability that the random noise in the detectors would generate something that looks like a gravitational-wave; the first gravitational-wave observation had less than a 1 in 3.5 billion chance of coming from noise and matched theoretical predictions from general relativity extremely well.

+

+ Messick's work with external collaborators outside the LIGO-Virgo-KAGRA collaboration looks for systems their normal analyses are not sensitive to. Scientists use the parameter kappa to characterize the ability of a nearly spherical object to distort when spinning rapidly or, in simple terms, how squished a sphere will become when spinning quickly.

+

+ LIGO searches are insensitive to any signal with a kappa greater than approximately ten. “There could be [signals] hiding in the data that we can’t see because we’re not looking with the right waveforms,” Messick explains. His analysis has been working on this problem.

+

+ Messick uses HTCondor DAGs to model his workflows, which he modified to make integration with OSG easier. The first job checks the frequency spectrum of the noise. These workflows go into an aggregation of the frequency spectrum, decomposition (labeled by color by type of detector), and finally, the filtering process occurs.

+

+

+

+ A section of Messick’s DAG workflow.

+

+

+Although Messick’s work is more physics-heavy than computationally driven, he remarks that “HTCondor is extremely useful to us… it can fit the work we’ve been doing very, very naturally.”

+

+...

+

+ *Watch a video recording of Cody Messick’s talk at HTCondor Week 2022, and browse his [slides](https://agenda.hep.wisc.edu/event/1733/contributions/25501/attachments/8303/9586/How%20LIGO%20Analysis%20is%20using%20HTCondor.pdf).*

+

+

+

diff --git a/2022-09-27-DoIt-Article-Summary.md b/2022-09-27-DoIt-Article-Summary.md

index 6b09f13e..984389f2 100644

--- a/2022-09-27-DoIt-Article-Summary.md

+++ b/2022-09-27-DoIt-Article-Summary.md

@@ -1,5 +1,5 @@

---

-title: "Solving for the future: Investment, new coalition levels up research computing infrastructure at UW–Madison"

+title: Summary of "Solving for the future; Investment, new coalition levels up research computing infrastructure at UW–Madison"

author: Hannah Cheren

diff --git a/2022-11-03-ucsd-external-release.md b/2022-11-03-ucsd-external-release.md

new file mode 100644

index 00000000..d0ce2a77

--- /dev/null

+++ b/2022-11-03-ucsd-external-release.md

@@ -0,0 +1,49 @@

+---

+title: PATh Extends Access to Diverse Set of High Throughout Computing Research Programs

+

+author: Cannon Lock

+

+publish_on:

+- path

+

+type: news

+

+canonical_url: "https://path-cc.io/news/2022-11-03-ucsd-external-release"

+

+image:

+ path: "https://raw.githubusercontent.com/CHTC/Articles/main/images/ucsd-public-relations.png"

+ alt: The colors on the chart correspond to the total number of core hours – nearly 884,000 – utilized by researchers at participating universities on PATh Facility hardware located at SDSC.

+

+description: |

+ UCSD announces the new PATh Facility and discusses its impact on science.

+excerpt: |

+ UCSD announces the new PATh Facility and discusses its impact on science.

+

+card_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/ucsd-public-relations.png"

+card_alt: The colors on the chart correspond to the total number of core hours – nearly 884,000 – utilized by researchers at participating universities on PATh Facility hardware located at SDSC.

+---

+

+Finding the right road to research results is easier when there is a clear PATh to follow. The Partnership to Advance Throughput Computing ([PATh](https://path-cc.io/))—a partnership between the [OSG Consortium](https://osg-htc.org/) and the University of Wisconsin-Madison’s Center for High Throughput Computing ([CHTC](https://chtc.cs.wisc.edu/)) supported by the National Science Foundation (NSF)—has cleared the way for science and engineering researchers for years with its commitment to advancing distributed high throughput computing (dHTC) technologies and methods.

+

+HTC involves running a large number of independent computational tasks over long periods of time—from hours and days to week or months. dHTC tools leverage automation and build on distributed computing principles to save researchers with large ensembles incredible amounts of time by harnessing the computing capacity of thousands of computers in a network—a feat that with conventional computing could take years to complete.

+

+Recently PATh launched the [PATh Facility](https://path-cc.io/facility/index.html), a dHTC service meant to handle HTC workloads in support and advancement of NSF-funded open science. It was announced earlier this year via a [Dear Colleague Letter](https://www.nsf.gov/pubs/2022/nsf22051/nsf22051.jsp) issued by the NSF and identified a diverse set of [eligible research programs](https://www.nsf.gov/pubs/2022/nsf22051/nsf22051.jsp) that range across 14 domain science areas including geoinformatics, computational methods in chemistry, cyberinfrastructure, bioinformatics, astronomy, arctic research and more. Through this 2022-2023 fiscal year pilot project, the NSF awards credits for access to the PATh Facility, and researchers can request computing credits associated with their NSF awards. There are two ways to request credit: 1) within new proposals or 2) with existing awards via an email request for additional credits to participating program officers.

+

+“It is a remarkable program because it spans almost the entirety of the NSF’s directorates and offices,” said San Diego Supercomputer Center ([SDSC](https://www.sdsc.edu/)) Director Frank Würthwein, who also serves as executive director of the OSG Consortium.

+

+Access to the PATh Facility offers researchers approximately 35,000 modern cores and up to 44 A100 GPUs. Recently SDSC, located at [UC San Diego](https://ucsd.edu/), added PATh Facility hardware on its [Expanse](https://www.sdsc.edu/services/hpc/expanse/) supercomputer for use by researchers with PATh credits. According to SDSC Deputy Director Shawn Strande: “Within the first two weeks of operations, we saw researchers from 10 different institutions, including one minority serving institution, across nearly every field of science. The beauty of the PATh model of system integration is that researchers have access as soon as the resource is available via OSG. PATh democratizes access by lowering barriers to doing research on advanced computing resources.”

+

+While the PATh credit ecosystem is still growing, any PATh Facility capacity not used for credit will be available to the Open Science Pool ([OSPool](https://osg-htc.org/services/open_science_pool.html)) to benefit all open science under a Fair-Share allocation policy. “For researchers familiar with the OSPool, running HTC workloads on the PATh Facility should feel like second-nature” said Christina Koch, PATh’s research computing facilitator.

+

+“Like the OSPool, the PATh Facility is nationally spanning, geographically distributed and ideal for HTC workloads. But while resources on the OSPool belong to a diverse range of campuses and organizations that have generously donated their resources to open science, the allocation of capacity in the PATh Facility is managed by the PATh project itself,” said Koch.

+

+PATh will eventually reach over six national sites: SDSC at UC San Diego, CHTC at the University of Wisconsin-Madison, the Holland Computing Center at the University of Nebraska-Lincoln, Syracuse University’s Research Computing group, the Texas Advanced Computing Center at the University of Texas at Austin and Florida International University’s AMPATH network in Miami.

+

+PIs may contact [credit-accounts@path-cc.io](mailto:credit-accounts@path-cc.io) with questions about PATh resources, using HTC, or estimating credit needs. More details also are available on the [PATh credit accounts](https://path-cc.io/services/credit-accounts/) web page.

+

+

+

+

+ A diverse set of PATh national and international users benefit from the resource, and the recent launch of the PATh Facility further supports HTC workloads in an effort to advance NSF-funded open science. The colors on the chart correspond to the total number of core hours – nearly 884,000 – utilized by researchers at participating universities on PATh Facility hardware located at SDSC. Credit: Ben Tolo, SDSC

+

+

\ No newline at end of file

diff --git a/2022-11-09-CHTC-pool-record.md b/2022-11-09-CHTC-pool-record.md

new file mode 100644

index 00000000..4140f541

--- /dev/null

+++ b/2022-11-09-CHTC-pool-record.md

@@ -0,0 +1,65 @@

+---

+title: CHTCPool Hits Record Number of Core Hours

+

+author: Shirley Obih

+

+publish_on:

+ - htcondor

+ - path

+ - chtc

+

+type: news

+

+canonical_url: https://chtc.cs.wisc.edu/CHTC-pool-record.html

+

+image:

+ path: https://raw.githubusercontent.com/CHTC/Articles/main/images/Pool-Record-Image.jpg

+ alt: Pool Record Banner

+

+description: CHTC smashes record

+excerpt: CHTC smashes record

+

+card_src: https://raw.githubusercontent.com/CHTC/Articles/main/images/Pool-Record-Image.jpg

+card_alt: Pool Record Banner

+

+banner_src: https://raw.githubusercontent.com/CHTC/Articles/main/images/Pool-Record-Image.jpg

+banner_alt: Pool Record Banner

+---

+

+CHTC users recorded the most ever usage in the CHTC Pool on October 18th this year - utilizing

+over 700,000 core hours - only to have that record broken again a mere two days later on Oct 20th,

+with a total of 710,796 core hours reached.

+

+The Center for High Throughput (CHTC) users are hard at work smashing records with two almost consecutive record numbers of core hour usage.

+October 20th was the highest daily core hour in the CHTC Pool with 710,796 hours utilized, a feat attained

+just two days after the October 18th record break of 705,801 core hours.

+

+What is contributing to these records? One factor likely is UW’s investment in new hardware.

+UW-Madison’s research computing hardware recently underwent a [substantial hardware refresh](https://chtc.cs.wisc.edu/DoIt-Article-Summary.html),

+adding 207 new servers representing over 40,000 “batch slots” of computing capacity.

+

+However, additional capacity requires researchers ready and capable to use it.

+The efforts of the CHTC facilitation team, led by Christina Koch, contributed to

+this readiness. Since September 1, CHTC's Research Computing Facilitators have met

+with 70 new users for an introductory consultation, and there have been over 80

+visits to the twice-weekly drop-in office hours hosted by the facilitation team.

+Koch notes that "using large-scale computing can require skills and concepts that

+are new to most researchers - we are here to help bridge that gap."

+

+Finally, the hard work of the researchers themselves is another linchpin to these records.

+Over 80 users that span many fields of science contributed to this success, including

+these users with substantial usage:

+

+- [Ice Cube Neutrino Observatory](https://icecube.wisc.edu): an observatory operated by University of Madison, designed to observe the cosmos from deep within the South Pole ice.

+- [ECE_miguel](https://www.ece.uw.edu/people/miguel-a-ortega-vazquez/): In the Department of Electrical and Computer Engineering, Joshua San Miguel’s group explores new paradigms in computer architecture.

+- [MSE_Szlufarska](https://directory.engr.wisc.edu/mse/Faculty/Szlufarska_Izabela/): Isabel Szlufarska’s lab focuses on computational materials science, mechanical behavior at the nanoscale using atomic scale modeling to understand and design new materials.

+- [Genetics_Payseur](https://payseur.genetics.wisc.edu): Genetics professor Bret Payseur’s lab uses genetics and genomics to understand mechanisms of evolution.

+- [Pharmacy_Jiang](https://apps.pharmacy.wisc.edu/sopdir/jiaoyang_jiang/index.php): Pharmacy professor Jiaoyang Jiang’s interests span the gap between biology and chemistry by focusing on identifying the roles of protein post-translational modifications in regulating human physiological and pathological processes.

+- [EngrPhys_Franck](https://www.franck.engr.wisc.edu): Jennifer Franck’s group specializes in the development of new experimental techniques at the micro and nano scales with the goal of providing unprecedented full-field 3D access to real-time imaging and deformation measurements in complex soft matter and cellular systems.

+- [BMI_Gitter](https://www.biostat.wisc.edu/~gitter/): In Biostatistics and Computer Sciences, Anthony Gitter’s lab conducts computational biology research that brings together machine learning techniques and problems in biology

+- [DairyScience_Dorea](https://andysci.wisc.edu/directory/joao-ricardo-reboucas-dorea/): Joao Dorea’s Animal and Dairy Science group focuses on the development of high-throughput phenotyping technologies.

+

+Any UW student or researcher who wants to utilize high throughput of computing resources

+towards a given problem can harness the capacity of CHTC Pool.

+

+[Users can sign up here](https://chtc.cs.wisc.edu/uw-research-computing/get-started.html)

diff --git a/2022-12-05-htcondor-week-2023.md b/2022-12-05-htcondor-week-2023.md

new file mode 100644

index 00000000..dd76259a

--- /dev/null

+++ b/2022-12-05-htcondor-week-2023.md

@@ -0,0 +1,48 @@

+---

+title: "Save the Date! HTCondor Week 2023, June 5-8"

+

+author: Hannah Cheren

+

+publish_on:

+ - htcondor

+

+type: news

+

+canonical_url: http://htcondor.org/HTCondorWeek2023

+

+image:

+ path: https://raw.githubusercontent.com/CHTC/Articles/main/images/HTCondor_Banner.jpeg

+ alt: HTCondor Week 2023

+

+description: "Save the Date! HTCondor Week 2023, June 5-8"

+excerpt: "Save the Date! HTCondor Week 2023, June 5-8"

+

+card_src: https://raw.githubusercontent.com/CHTC/Articles/main/images/HTCondor_Banner.jpeg

+card_alt: HTCondor Week 2023

+

+banner_src: https://raw.githubusercontent.com/CHTC/Articles/main/images/HTCondor_Banner.jpeg

+banner_alt: HTCondor Week 2023

+---

+

+

Save the Date for HTCondor Week May 23 - 26!

+

+

+Hello HTCondor Users and Collaborators!

+

+We want to invite you to HTCondor Week 2023, our annual HTCondor user conference, from June 5-8, 2023 at the Fluno Center at the Univeristy of Wisconsin-Madison!

+

+More information about registration coming soon.

+

+We will have a variety of in-depth tutorials and talks where you can learn more about HTCondor and how other people are using and deploying HTCondor. Best of all, you can establish contacts and learn best practices from people in industry, government, and academia who are using HTCondor to solve hard problems, many of which may be similar to those you are facing.

+

+And make sure you check out these articles written on presentations from last year's HTCondor Week!

+- [Using high throughput computing to investigate the role of neural oscillations in visual working memory](https://path-cc.io/news/2022-07-06-Fulvio/)

+- [Using HTC and HPC Applications to Track the Dispersal of Spruce Budworm Moths](https://path-cc.io/news/2022-07-06-Garcia/)

+- [Testing GPU/ML Framework Compatibility](https://path-cc.io/news/2022-07-06-Hiemstra/)

+- [Expediting Nuclear Forensics and Security Using High Throughput Computing](https://path-cc.io/news/2022-07-06-Opotowsky/)

+- [The Future of Radio Astronomy Using High Throughput Computing](https://path-cc.io/news/2022-07-12-Wilcots/)

+- [LIGO's Search for Gravitational Waves Signals Using HTCondor](https://path-cc.io/news/2022-07-21-Messick/)

+

+Hope to see you there,

+

+\- The Center for High Throughput Computing

diff --git a/2022-12-14-CHTC-Facilitation.md b/2022-12-14-CHTC-Facilitation.md

new file mode 100644

index 00000000..ccb12697

--- /dev/null

+++ b/2022-12-14-CHTC-Facilitation.md

@@ -0,0 +1,71 @@

+---

+title: CHTC Facilitation Innovations for Research Computing

+

+author: Hannah Cheren

+

+publish_on:

+- chtc

+- path

+- htcondor

+- osg

+

+type: news

+

+canonical_url: "https://chtc.cs.wisc.edu/chtc-facilitation.html"

+

+image:

+path: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Facilitation-cover.jpeg"

+alt: Research Computing Facilitator Christina Koch with a researcher.

+

+description: |

+ After adding Research Computing Facilitators in 2013-2014, CHTC has expanded its reach to support researchers in all disciplines interested in using large-scale computing to support their research through the shared computing capacity offered by the CHTC.

+excerpt: |

+ After adding Research Computing Facilitators in 2013-2014, CHTC has expanded its reach to support researchers in all disciplines interested in using large-scale computing to support their research through the shared computing capacity offered by the CHTC.

+

+card_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Facilitation-cover.jpeg"

+card_alt: Research Computing Facilitator Christina Koch with a researcher.

+---

+ ***After adding Research Computing Facilitators in 2013-2014, CHTC has expanded its reach to support researchers in all disciplines interested in using large-scale computing to support their research through the shared computing capacity offered by the CHTC.***

+

+

+

+ Research Computing Facilitator Christina Koch with a researcher.

+

+

+ As the core research computing center at the University of Wisconsin-Madison and the leading high throughput computing (HTC) force nationally, the Center for High Throughput Computing (CHTC), formed in 2014, has always had one simple goal: to help researchers in all fields use HTC to advance their work.

+

+ Soon after its founding, CHTC learned that computing capacity alone was not enough; there needed to be more communication between researchers who used computing and the computer scientists who wanted to help them. To address this gap, the CHTC needed a new, two-way communication model that better understood and advocated for the needs of researchers and helped them understand how to apply computing to transform their research. In 2013, CHTC hired its first Research Computing Facilitator (RCF), Lauren Michael, to implement this new model and provide staff experience in domain research, research computing, and communication/teaching skills. Since then, the team has expanded to include additional facilitators, which today include Christina Koch, now leading the team, Rachel Lombardi, and a new team member CHTC is actively hiring.

+

+

+## What is an RCF?

+ An RCF’s job is to understand a new user's research goals and provide computing options that fit their needs. “As a Research Computing Facilitator, we want to facilitate the researcher’s use of computing,” explains Koch. “They can come to us with problems with their research, and we can advise them on different computing possibilities.”

+

+ Computing facilitators know how to work with researchers and understand research enough to guide the customizations researchers need. More importantly, RCFs are passionate about helping people and solving problems.

+

+ In the early days of CHTC, it was a relatively new idea to hire people with communication and problem-solving skills and apply those talents to computational research. Having facilitators with these skills bridge the gap between research computing organizations and researchers was what was unique to CHTC; in fact, the term “Research Computing Facilitator” was coined at UW-Madison.

+

+## RCF as a part of the CHTC model

+ Research computing facilitators have become an integral part of the CHTC and are a unique part of the model for this center. Koch elaborates that “...what’s unique at the CHTC is having a dedicated role – that we’re not just ‘user support’ responding to people’s questions, but we’re taking this more proactive, collaborative stance with researchers.” Research Computing Facilitators strengthen the CHTC and allow a more diverse range of computing dimensions to be supported. This support gives these researchers a competitive edge that others may not necessarily have.

+

+ The uniqueness of the RFC role allows for customized solutions for researchers and their projects. They meet with every researcher who [requests an account](https://chtc.cs.wisc.edu/uw-research-computing/form.html) to use [CHTC computing resources](https://chtc.cs.wisc.edu/uw-research-computing/index.html). These individual meetings allow RCFs to have strategic conversations to provide personal recommendations and discuss long-term goals.

+

+ Meetings between the facilitators and researchers also get researchers thinking about what they could do if they could do things faster, at a grander scale, and with less time and effort investment for each project. “We want to understand what their research project is, the goals of that project, and the limitations they’re concerned with to see if using CHTC resources could aid them,” Lombardi explains. “We’re always willing to push the boundaries of our services to try to accommodate to researchers' needs.” The RCFs must know enough about the researchers’ work to talk to the researchers about the dimensions of their computing requirements in terms they understand.

+

+ Although RCFs are integral to CHTC’s model, that doesn’t mean it doesn’t come without challenges. One hurdle is that they are facilitators, which means they’re ultimately not the ones to make choices for the researchers they support. They present solutions given each researcher’s unique circumstances, and it’s up to researchers to decide what to do. Koch explains that“it’s about finding the balance between helping them make those decisions while still having them do the actual work, even if it’s sometimes hard, because they understand that it will pay off in the long run.”

+

+ Supporting research computing across domains is also a significant CHTC facilitation accomplishment. Researchers used to need a programming background to apply computing to their analyses, which meant the physical sciences typically dominated large-scale computational analyses. Over the years, computing has become a lot more accessible. More researchers in the life sciences, social sciences, and humanities, have access to community software tools they can apply to their research problems. “It’s not about a user’s level of technical skill or what kind of science they do,” Koch says. It’s about asking, “are you using computing, and do you need help expanding?” CHTC’s ability to pull in researchers across new disciplines has been rewarding and beneficial. “When new disciplines start using computing to tackle their problems, they can do some new, interesting research to contribute to their fields,” Koch notes.

+

+## Democratizing Access

+ CHTC’s success can inspire other campuses to rethink their research computing operations to support their researchers better and innovate. Recognized nationally and internationally as an expert in HTC and facilitation, CHTC’s approach has started to make its way onto other campus computing centers.

+

+ CHTC efforts aim to bring broader access to HTC systems. “CHTC has enabled access to computing to a broad spectrum of researchers on campus,” Lombardi explains, “and we strive to help researchers and organizations implement throughput computing capacity.” CHTC is part of national and international efforts to bring that level of computing to other communities through partnerships with organizations, such as the [Campus Cyberinfrastructure (CC*) NSF program](https://beta.nsf.gov/funding/opportunities/campus-cyberinfrastructure-cc).

+

+ The CC* program supports campuses across the country that wish to contribute computing capacity to the [Open Science Pool (OSPool)](https://osg-htc.org/services/open_science_pool.html). These institutions are awarded a grant, and in turn, they agree to donate resources to the OSPool, a mutually beneficial system to democratize computing and make it more accessible to researchers who might not have access to such capacity otherwise.

+

+ The RCF team meets with researchers weekly from around the world (including Africa, Europe, and Asia). They hold OSG Office Hours twice a week for one-on-one support and provide training at least twice a month for new users and on special topics.

+

+ For other campuses to follow in CHTC’s footsteps, they can start implementing facilitation first, even before a campus has any computing systems. In some cases, such as on smaller campuses, they might not even have or need to have a computing center. Having facilitators is crucial to providing researchers with individualized support for their projects.

+

+ The next step would be for campuses to look at how they currently support their researchers, including examining what they’re currently doing and if there’s anything they’d want to do differently to communicate this ethic of supporting researchers.

+

+ Apart from the impact that research computing facilitators have had on the research community, Koch notes what this job means to her, “[w]orking for a more mission-driven organization where I feel like I’m enabling other people’s research success is so motivating.” Now, almost ten years later, the CHTC has gone from having roughly one hundred research groups using the capacity it provides to having several hundred research groups and thousands of users per year. “Facilitation will continue to advise and support these projects to advance the big picture,” Lombardi notes, “we’ll always be available to researchers who want to talk to someone about how CHTC resources can advance their work!”

diff --git a/2022-12-19-Lightning-Talks.md b/2022-12-19-Lightning-Talks.md

new file mode 100644

index 00000000..7176f3a7

--- /dev/null

+++ b/2022-12-19-Lightning-Talks.md

@@ -0,0 +1,172 @@

+---

+title: "Student Lightning Talks from the OSG User School 2022"

+

+author: Hannah Cheren

+

+publish_on:

+ - osg

+ - path

+ - chtc

+ - htcondor

+

+type: news

+

+canonical_url: https://osg-htc.org/spotlights/Lightning-Talks.html

+

+image:

+ path: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Lightning-Talks-card.jpeg"

+ alt: Staff and attendees from the OSG User School 2022.

+

+description: The OSG User School student lightning talks showcased their research, inspiring all the event participants.

+excerpt: The OSG User School student lightning talks showcased their research, inspiring all the event participants.

+

+card_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Lightning-Talks-card.jpeg"

+card_alt: Staff and attendees from the OSG User School 2022.

+

+banner_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/Lightning-Talks-card.jpeg"

+banner_alt: Staff and attendees from the OSG User School 2022.

+---

+ ***The OSG User School student lightning talks showcased their research, inspiring all the event participants.***

+

+

+

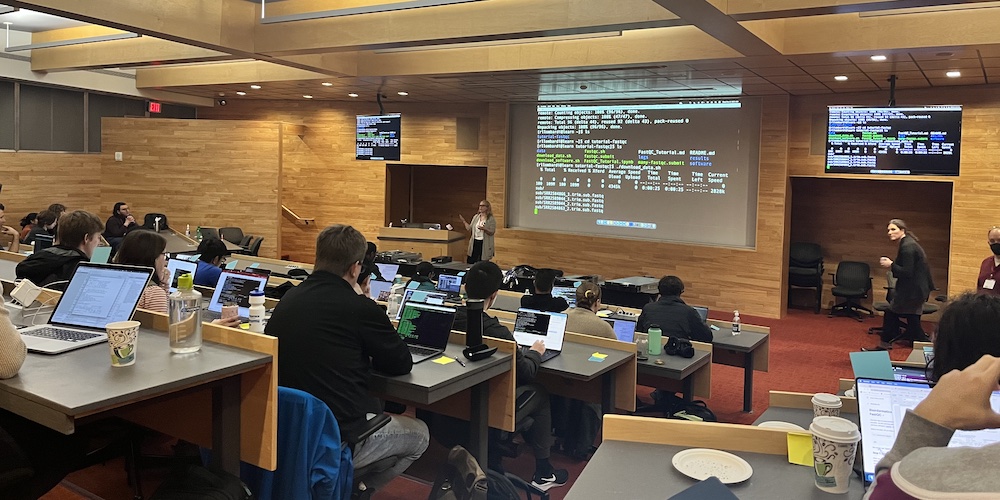

+ Staff and attendees from the OSG User School 2022.

+

+

+ Each summer, the OSG Consortium offers a [week-long summer school](https://osg-htc.org/user-school-2022/) for researchers who want to learn how to use [high-throughput computing](https://htcondor.org/htc.html) (HTC) methods and services to handle large-scale computing applications at the heart of today’s cutting-edge science. This past summer the school was back in-person on the University of Wisconsin–Madison campus, attended by 57 students and over a dozen staff.

+

+ Participants from Mali and Uganda, Africa, to campuses across the United States learned through lectures, discussions, and hands-on activities how to apply HTC approaches to handle large ensembles of jobs and large datasets in support of their research work.

+“It's truly humbling to see how much cool work is being done with computing on @CHTC_UW and @opensciencegrid!!” research facilitator Christina Koch tweeted regarding the School.

+

+ One highlight of the School is the closing participants’ lightning talks, where the researchers present their work and plans to integrate HTC, expanding the scope and goals of their research.

+The lightning talks given at this year’s OSG User School illustrate the diversity of students’ research and its expanding scope enabled by the power of HTC and the School.

+

+ *Note: Applications to attend the School typically open in March. Check the [OSG website](https://osg-htc.org/) for this announcement.*

+

+

+

+ Devin Bayly

+

+

+ [Devin Bayly](https://sxsw.arizona.edu/person/devin-bayly), a data and visualization consultant at the University of Arizona's Research Technologies department, presented “*OSG for Vulkan StarForge Renders.*” Devin has been working on a multimedia project called Stellarscape, which combines astronomy data with the fine arts. The project aims to pair the human’s journey with a star’s journey from birth to death.

+

+ His goal has been to find a way to support connections with the fine arts, a rarity in the HTC community. After attending the User School, Devin intends to use the techniques he learned to break up his data and entire simulation into tiles and use a low-level graphics API called Vulkan to target and render the data on CPU/GPU capacity. He then intends to combine the tiles into individual frames and assemble them into a video.

+

+

+

+ Rendering of the Starforge simulation gas position data.

+

+

+ Starforge Anvil of Creation: *Grudi'c, Michael Y. et al. “STARFORGE: Toward a comprehensive numerical model of star cluster formation and feedback.” arXiv: Instrumentation and Methods for Astrophysics (2020): n. pag. [https://arxiv.org/abs/2010.11254](https://arxiv.org/abs/2010.11254)*

+

+

+

+ Mike Nsubuga

+

+

+ [Mike Nsubuga](https://miken.netlify.app/), a Bioinformatics Research fellow at the African Center of Excellence in Bioinformatics and Data-Intensive Sciences ([ACE](https://ace.ac.ug/)) within the Infectious Disease Institute ([IDI](https://idi.mak.ac.ug/)) at Makerere University in Uganda, presented “*End-to-End AI data systems for targeted surveillance and management of COVID-19 and future pandemics affecting Uganda.*”

+

+ Nsubuga noted that in the United States, there are two physicians for every 1000 people; in Uganda, there is only one physician per 25,000 people. Research shows that AI, automation, and data science can support overburdened health systems and health workers when deployed responsibly.

+Nsubuga and a team of Researchers at ACE are working on creating AI chatbots for automated and personalized symptom assessments in English and Luganda, one of the major languages of Uganda. He's training the AI models using data from the public and healthcare workers to communicate with COVID-19 patients and the general public.

+

+ While at the School, Nsubuga learned how to containerize his data into a Docker image, and from that, he built an Apptainer (formerly Singularity) container image. He then deployed this to the [Open Science Pool](https://osg-htc.org/services/open_science_pool.html) (OSPool) to determine how to mimic the traditional conversation assistant workflow model in the context of COVID-19. The capacity offered by the OSPool significantly reduced the time it takes to train the AI model by eight times.

+

+

+

+ Jem Guhit

+

+

+ Jem Guhit, a Physics Ph.D. candidate from the University of Michigan, presented “*Search for Di-Higgs production in the LHC with the ATLAS Experiment in the bbtautau Final State.*” The Higgs boson was discovered in 2012 and is known for the Electroweak Symmetry Breaking (EWSB) phenomenon, which explains how other particles get mass. Since then, the focus of the LHC has been to investigate the properties of the Higgs boson, and one can get more insight into how the EWSB Mechanism works by searching for two Higgs bosons using the ATLAS Detector. The particle detectors capture the resultant particles from proton-proton collisions and use this as data to look for two Higgs bosons.

+

+ DiHiggs searches pose a challenge because the rate at which a particle process occurs for two Higgs bosons is 30x smaller than for a single Higgs boson. Furthermore, the particles the Higgs can decay to have similar particle trajectories to other particles produced in the collisions unrelated to the Higgs boson. Her strategy is to use a machine learning (ML) method powerful enough to handle complex patterns to determine whether the decay products come from a Higgs boson. She plans to use what she’s learned at the User School to show improvements in her machine-learning techniques and optimizations. With these new skills, she has been running jobs on the University of Michigan's [HTCondor](https://htcondor.com/) system utilizing GPU and CPUs to run ML jobs efficiently and plans to use the [OSPool](https://osg-htc.org/services/open_science_pool.html) computing cluster to run complex jobs.

+

+

+

+ Peder Engelstad

+

+

+ [Peder Engelstad](https://www.nrel.colostate.edu/ra-highlights-meet-peder-engelstad/), a spatial ecologist and research associate in the Natural Resource Ecology Laboratory at Colorado State University (and 2006 University of Wisconsin-Madison alumni), presented a talk on “*Spatial Ecology & Invasive Species.*” Engelstad’s work focuses on the ecological importance of natural spatial patterns of invasive species.

+

+ He uses modeling and mapping techniques to explore the spatial distribution of suitable habitats for invasive species. The models he uses combine locations of species with remotely-sensed data, using ML and spatial libraries in R. Recently. he’s taken on the massive task of creating thousands of suitability maps. To do this sequentially would take over three years, but he anticipates HTC methods can help drastically reduce this timeframe to a matter of days.

+

+ Engelstad said it’s been exciting to see the approaches he can use to tackle this problem using what he’s learned about HTC, including determining how to structure his data and break it into smaller chunks. He notes that the nice thing about using geospatial data is that they are often in a 2-D grid system, making it easy to index them spatially and designate georeferenced tiles to work on. Engelstad says that an additional benefit of incorporating HTC methods will be to free up time to work on other scientific questions.

+

+

+

+ Zachary Baldwin

+

+

+ [Zachary Baldwin](https://zabaldwin.github.io/), a Ph.D. candidate in Nuclear and Particle Physics at Carnegie Mellon University, works for the [GlueX Collaboration](http://www.gluex.org/), a particle physics experiment at the Thomas Jefferson National Lab that searches for and studies exotic hybrid mesons. Baldwin presented a talk on “*Analyzing hadronic systems in the search for exotic hybrid mesons at GlueX.*”

+

+ His thesis looks at data collected from the GlueX experiment to possibly discover forbidden quantum numbers found within subatomic particle systems to determine if they exist within our universe. Baldwin's experiment takes a beam of electrons, speeds them up to high energies, and then collides them with a thin diamond wafer. These electrons then slow down, producing linearly polarized photons. These photons will then collide with a container of liquid hydrogen (protons) within the center of his experiment. Baldwin studies the resulting systems produced within these photon-proton collisions.

+

+ The collision creates billions of particles, leaving Baldwin with many petabytes of data. Baldwin remarks that too much time gets wasted looping through all the data points, and massive processes run out of memory before he can compute results, which is one aspect where HTC comes into play. Through the User School, another major area he's been working on is simulating Monte Carlo particle reactions using [OSPool](https://osg-htc.org/services/open_science_pool.html)'s containers which he pushes into the OSPool using HTCondor to simulate events that he believes would happen in the real world.

+

+

+

+ Olaitan Awe

+

+

+ Olaitan Awe, a systems analyst in the Information Technology department at the Jackson Laboratory (JAX), presented “*Newborn Screening (NBS) of Inborn Errors of Metabolism (IEM).*” The goal of newborn screening is that, when a baby is born, it detects early what diseases they might have.

+

+ Genomic Newborn Screenings (gNBS) are generally cheap, detect many diseases, and have a quick turnaround time. The gNBS takes a child’s genome and compares it to a reference genome to check for variations. The computing challenge lies in looking for all variations, determining which are pathogenic, and seeing which diseases they align with.

+

+ After attending the User School, Awe intends to tackle this problem by writing [DAGMan](https://htcondor.org/dagman/dagman.html) scripts to implement parent-child relations in a pipeline he created. He then plans to build custom containers to run the pipeline on the [OSPool](https://osg-htc.org/services/open_science_pool.html) and stage big data shared across parent-child processes. The long-term goal is to develop a validated, reproducible gNBS pipeline for routine clinical practice and apply it to African populations.

+

+

+

+ Max Bareiss

+

+

+ [Max Bareiss](https://safetyimpact.beam.vt.edu/news/2021Abstracts/BareissAAAM20211.html), a Ph.D. Candidate at the Virginia Tech Center for Injury Biomechanics presented “*Detection of Camera Movement in Virginia Traffic Camera Video on OSG.*” Bareiss used a data set of 1263 traffic cameras in Virginia for his project. His goal was to determine how to document the crash, near-crashes, and normal driving recorded by traffic cameras using his video analysis pipeline. This work would ultimately allow him to detect vehicles and pedestrians and determine their trajectories.

+

+ The three areas he wanted to tackle and obtain help with at the User School were data movement, code movement, and using GPUs for other tasks. For data movement, he used MinIO, a high-performance object storage, so that the execution points could directly copy the videos from Virginia Tech. For code movement, Bareiss used Alpine Linux and multi-stage build, which he learned to implement throughout the week. He learned about using GPUs at the [Center for High Throughput Computing](https://chtc.cs.wisc.edu/) (CHTC) and in the [OSPool](https://osg-htc.org/services/open_science_pool.html).

+

+ Additionally, he learned about [DAGMan](https://htcondor.org/dagman/dagman.html), which he noted was “very exciting” since his pipeline was already a directed acyclic graph (DAG).

+

+

+

+ Matthew Dorsey

+

+

+ [Matthew Dorsey](https://www.linkedin.com/in/matthewadorsey/), a Ph.D. candidate in the Chemical and Biomolecular Engineering Department at North Carolina State University, presented on “*Computational Studies of the Structural Properties of Dipolar Square Colloids.*”

+

+ Dorsey is studying a colloidal particle developed in a research lab at NC State University in the Biomolecular Engineering Department. His research focuses on using computer models to discover what these particles can do. The computer models he has developed explore how different parameters (like the system’s temperature, particle density, and the strength of an applied external field) affect the particle’s self-assembly.

+

+ Dorsey recently discovered how the magnetic dipoles embedded in the squares lead to structures with different material properties. He intends to use the [HTCondor Software Suite](https://htcondor.com/htcondor/overview/) (HTCSS) to investigate the applied external fields that change with respect to time. “The HTCondor system allows me to rapidly investigate how different combinations of many different parameters affect the colloids' self-assembly,” Dorsey says.

+

+

+

+ Ananya Bandopadhyay

+

+

+ [Ananya Bandopadhyay](https://thecollege.syr.edu/people/graduate-students/ananya-bandopadhyay/), a graduate student from the Physics Department at Syracuse University, presented “*Using HTCondor to Study Gravitational Waves from Binary Neutron Star Mergers.*”

+

+ Gravitational waves are created when black holes or neutron stars crash into each other. Analyzing these waves helps us to learn about the objects that created them and their properties.

+

+ Bandopadhyay's project focuses on [LIGO](https://www.ligo.caltech.edu/)'s ability to detect gravitational wave signals coming from binary neutron star mergers involving sub-solar mass component stars, which she determines from a graph which shows the detectability of the signals as a function of the component masses comprising the binary system.

+

+ The fitting factors for the signals would have initially taken her laptop a little less than a year to run. She learned how to use [OSPool](https://osg-htc.org/services/open_science_pool.html) capacity from the School, where it takes her jobs only 2-3 days to run. Other lessons that Bandopadhyay hopes to apply are data organization and management as she scales up the number of jobs. Additionally, she intends to implement [containers](https://htcondor.readthedocs.io/en/latest/users-manual/container-universe-jobs.html) to help collaborate with and build upon the work of researchers in related areas.

+

+

+

+ Meng Luo

+

+

+ [Meng Luo](https://www.researchgate.net/profile/Meng-Luo-8), a Ph.D. student from the Department of Forest and Wildlife Ecology at the University of Wisconsin–Madison, presented “*Harnessing OSG to project the impact of future forest productivity change on land use change.*” Luo is interested in learning how forest productivity increases or decreases over time.

+

+ Luo built a single forest productivity model using three sets of remote sensing data to predict this productivity, coupling it with a global change analysis model to project possible futures.

+

+ Using her computer would take her two years to finish this work. During the User School, Luo learned she could use [Apptainer](https://portal.osg-htc.org/documentation/htc_workloads/using_software/containers-singularity/) to run her model and multiple events simultaneously. She also learned to use the [DAGMan workflow](https://htcondor.readthedocs.io/en/latest/users-manual/dagman-workflows.html) to organize the process better. With all this knowledge, she ran a scenario, which used to take a week to complete but only took a couple of hours with the help of [OSPool](https://osg-htc.org/services/open_science_pool.html) capacity.

+

+ Tinghua Chen from Wichita State University presented a talk on “*Applying HTC to Higgs Boson Production Simulations.*” Ten years ago, the [ATLAS](https://atlas.cern/) and [CMS](https://cms.cern/) experiments at [CERN](https://home.web.cern.ch/) announced the discovery of the Higgs boson. CERN is a research center that operates the world's largest particle physics laboratory. The ATLAS and CMS experiments are general-purpose detectors at the Large Hadron Collider (LHC) that both study the Higgs boson.

+

+ For his work, Chen uses a Monte Carlo event generator, Herwig 7, to simulate the production of the Higgs boson in vector boson fusion (VBF). He uses the event generator to predict hadronic cross sections, which could be useful for the experimentalist to study the Standard Model Higgs boson. Based on the central limit theorem, the more events Chen can generate, the more accurate the prediction.

+

+ Chen can run ten thousand events on his laptop, but the predictions could be more accurate. Ideally, he'd like to run five billion events for more precision. Running all these events would be impossible on his laptop; his solution is to run the event generators using the HTC services provided by the OSG consortium.

+

+ Using a workflow he built, he can set up the event generator using parallel integration steps and event generation. He can then use the Herwig 7 event generator to build, integrate, and run the events.

+

+...

+

+Thank you to all the researchers who presented their work in the Student Lightning Talks portion of the OSG User School 2022!

diff --git a/2022-12-19-ML-Demo.md b/2022-12-19-ML-Demo.md

new file mode 100644

index 00000000..5a3f04ee

--- /dev/null

+++ b/2022-12-19-ML-Demo.md

@@ -0,0 +1,107 @@

+---

+title: "CHTC Hosts Machine Learning Demo and Q+A session"

+

+author: Shirley Obih

+

+publish_on:

+ - chtc

+

+type: user

+

+canonical_url: https://chtc.cs.wisc.edu/mldemo.html

+

+image:

+ path: "https://raw.githubusercontent.com/CHTC/Articles/main/images/firstmldemoimage.png"

+ alt: A broad lens image of some students present at the demo.

+

+description: Over 60 students and researchers attended the Center for High Throughput Computing (CHTC) machine learning and GPU demonstration on November 16th.

+excerpt: Eric Wilcots, UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy, dazzles the HTCondor Week 2022 audience.

+

+card_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/firstmldemoimage.png"

+card_alt: Koch and Gitter presenting at the demo

+

+banner_src: "https://raw.githubusercontent.com/CHTC/Articles/main/images/ML_1.jpeg"

+banner_alt: Koch and Gitter presenting at the demo

+---

+***Over 60 students and researchers attended the Center for High Throughput Computing (CHTC) machine learning and GPU demonstration on November 16th. UW Madison Associate Professor of Biostatistics and Medical Informatics Anthony Gitter and CHTC Lead Research Computing Facilitator Christina Koch led the demonstration and fielded many questions from the engaged audience.***

+

+

+

+ Koch and Gitter presenting at the demo.

+

+

+[CHTC services](https://chtc.cs.wisc.edu/uw-research-computing/) include a free large scale computing systems solution for campus researchers who have encountered computing issues and outgrown their resources, often a laptop, Koch began. One of the services CHTC provides is the [GPU Lab](https://chtc.cs.wisc.edu/uw-research-computing/gpu-lab.html), a resource within the HTC system of CHTC.

+

+The GPU Lab supports up to dozens of concurrent jobs per user, a variety of GPU types including 40GB and 80GB A100s, runtimes from a few hours up to seven days, significant RAM needs, and space for large data sets.

+

+Researchers are not waiting to take advantage of these CHTC GPU resources. Over the past two months, 52 researchers ran over 17,000 jobs on GPU hardware. Additionally, the UW-Madison [IceCube project](https://icecube.wisc.edu) alone ran over 70K jobs.

+

+Even more capacity is available. The recent [$4.3 million investment from the Wisconsin Alumni Research Foundation (WARF) in UW-Madison’s research computing hardware](https://chtc.cs.wisc.edu/DoIt-Article-Summary.html) is a significant contributor to this abundance of resources, Gitter noted.

+

+There are two main ways to know what GPUs are available and the number of GPUs users may request per job:

+The first is through the CHTC website - which offers up-to-date information. To access this information, go to the [CHTC website](https://chtc.cs.wisc.edu) and enter ‘gpu’ in the search bar. The first result will be the [‘Jobs that Use GPU Overview’](https://chtc.cs.wisc.edu/uw-research-computing/gpu-jobs.html) which is the main guide on using GPUs in CHTC. At the very top of this guide is a table that contains information about the kinds of GPUs, the number of servers, and the number of GPUs per server, which limits how many GPUs can be requested per job. Also listed is the GPU memory, which shows the amount of GPU memory and the attribute you would use in the ‘required_gpu’ statement when submitting a job.

+

+A second way is to use the ‘condor_status’ command. To use this command, make sure to set a constraint of ‘Gpus > 0’ to prevent printing out information on every single server we have in the system: condor_status -constraint ‘Gpus > 0’. This gives the names of servers in the pool and their availability status - idle or busy. Users may also add an auto format flag attribute ‘-af’ to print out any desired attribute of the machine. For instance, to access the attributes like those listed in the table of the CHTC guide, users must include the GPUs prefix followed by an underscore and then the name of the column to access.

+

+The GPU Lab, due to its expansive potential, can be used in many scenarios. Koch explained this using real-world examples. Researchers might want to seek the CHTC GPU Lab when:

+Running into the time limit of an existing GPU while trying to develop and run a machine learning algorithm.

+Working with models that require more memory than what is available with a current GPU in use.

+Trying to benchmark the performance of a new machine algorithm and realizing that the computing resources available are time-consuming and not equipped for multitasking.

+

+While GPU Lab users routinely submit many jobs that need a single GPU without issue, users may need to work collaboratively with the CHTC team on extra testing and configuration when handling larger data sets and models and benchmark precise timing. Koch presented a slide outlining what is easy to more challenging on CHTC GPU resources, stressing that, when in doubt about what is feasible, to contact CHTC:

+

+

+

+ Slide showing what is possible with GPU Lab.

+

+

+Work that is done in CHTC is run through a job submission. Koch presented a flowchart demonstration on how this works:

+

+

+ How to run work via job submission.

+

+

+

+

+She demonstrated the three-step process of

+1. login and file upload

+2. submission to queue, and

+3. job-run execution by HTCondor job scheduler.

+This process, she displayed, involves writing up a submit file and utilizing command line syntax to be submitted to the queue. Below are some commands that can be used to submit a file:

+

+

+ Commands to use when submitting jobs.

+

+

+