Sparse Sampling Transformer with Uncertainty-Driven Ranking for Unified Removal of Raindrops and Rain Streaks (ICCV'23)

Sixiang Chen*

Tian Ye*

Jinbin Bai

Erkang Chen

Jun Shi

Lei Zhu✉️

|

|

|

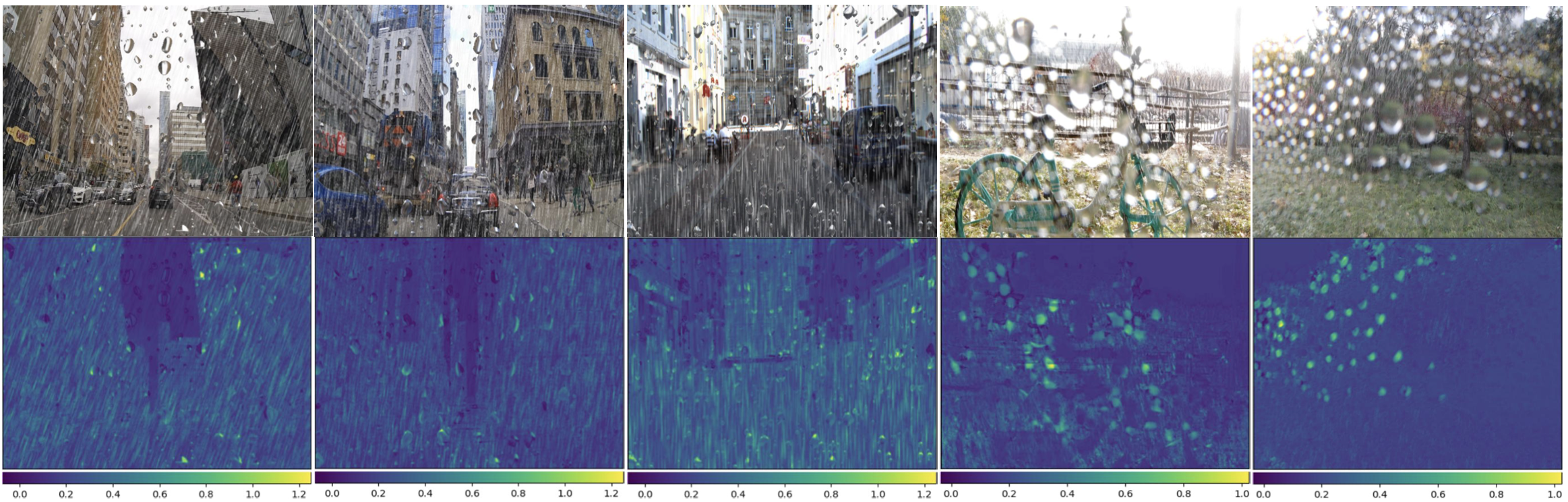

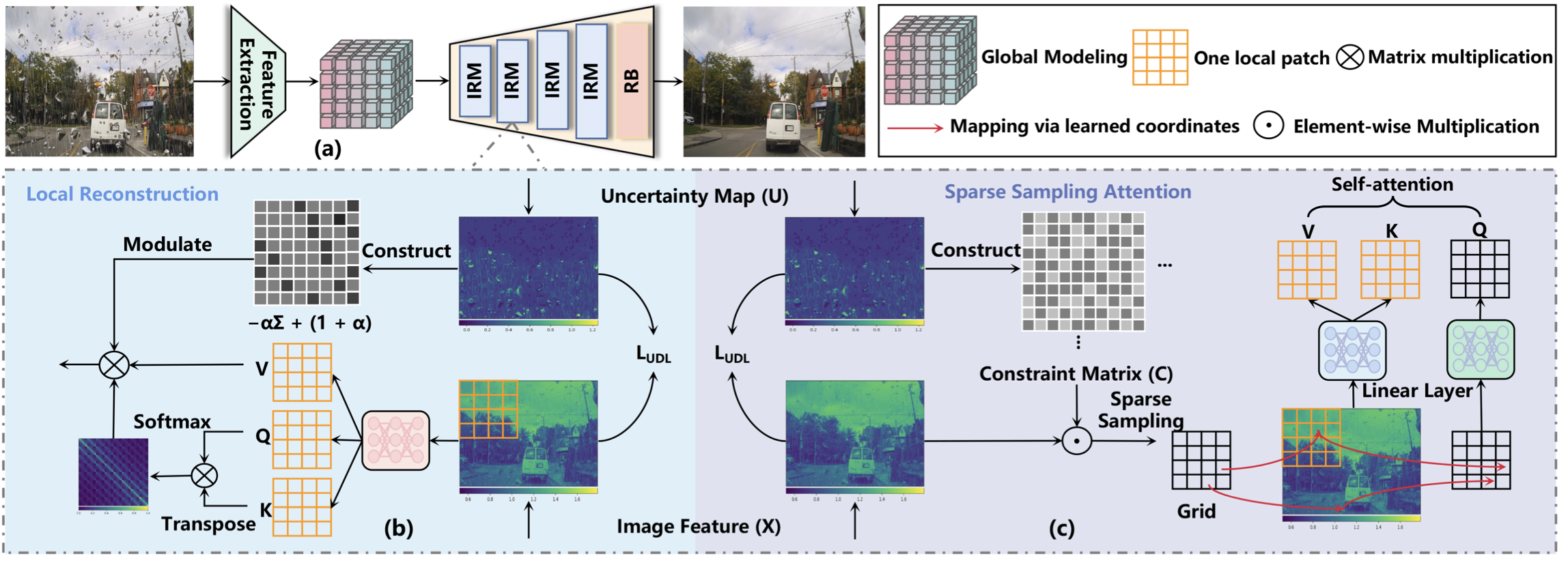

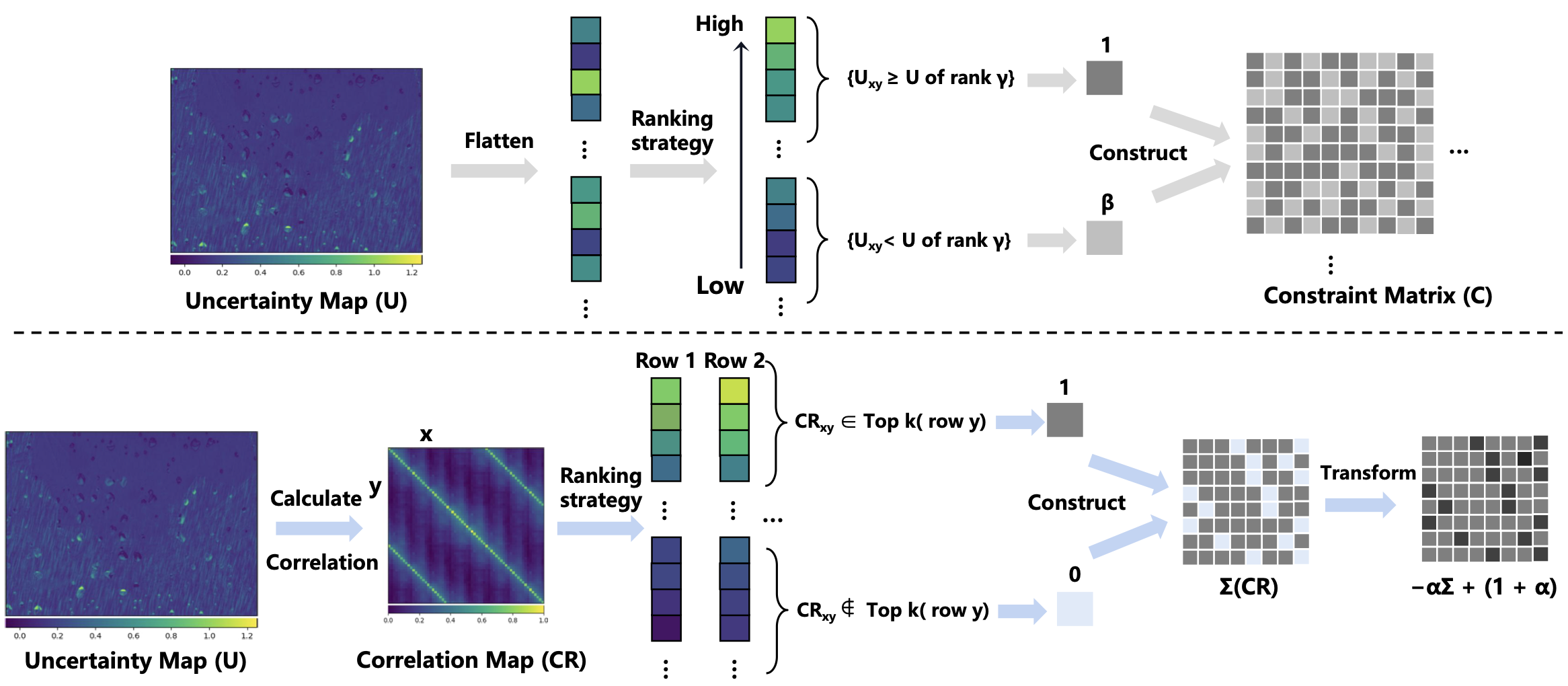

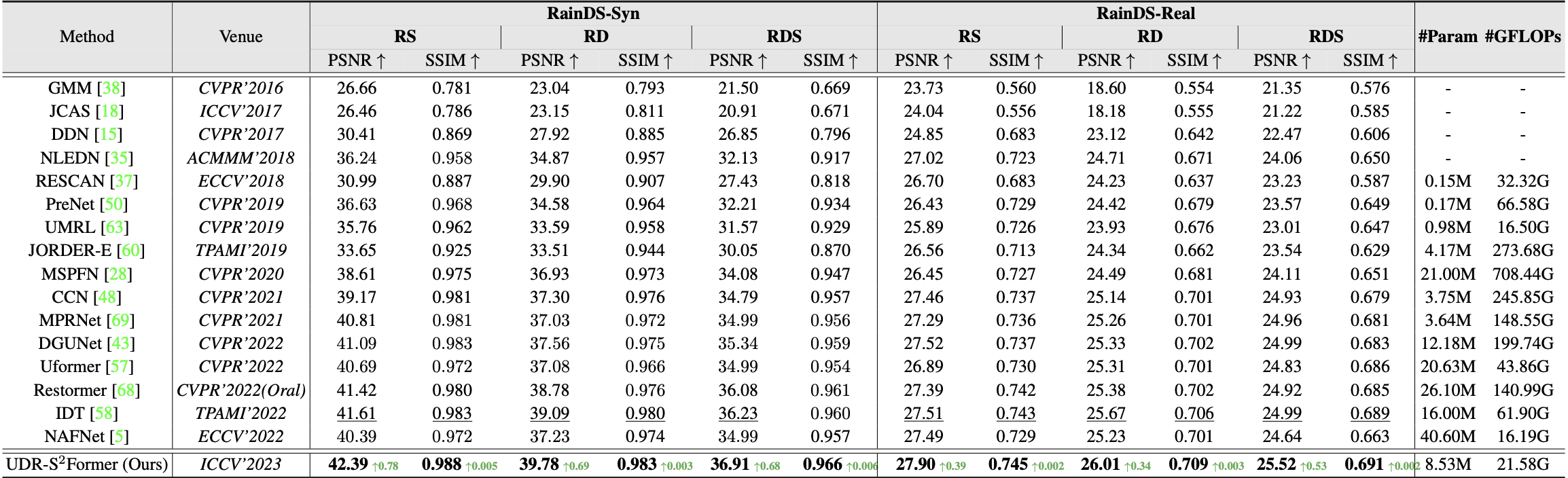

In the real world, image degradations caused by rain often exhibit a combination of rain streaks and raindrops, thereby increasing the challenges of recovering the underlying clean image. Note that the rain streaks and raindrops have diverse shapes, sizes, and locations in the captured image, and thus modeling the correlation relationship between irregular degradations caused by rain artifacts is a necessary prerequisite for image deraining. This paper aims to present an efficient and flexible mechanism to learn and model degradation relationships in a global view, thereby achieving a unified removal of intricate rain scenes. To do so, we propose a Sparse Sampling Transformer based on Uncertainty-Driven Ranking, dubbed UDR-S2Former. Compared to previous methods, our UDR-S2Former has three merits. First, it can adaptively sample relevant image degradation information to model underlying degradation relationships. Second, explicit application of the uncertainty-driven ranking strategy can facilitate the network to attend to degradation features and understand the reconstruction process. Finally, experimental results show that our UDR-S2Former clearly outperforms state-of-the-art methods for all benchmarks.

|

|

😆 Our UDR-S2Former is built in Pytorch2.0.1, we train and test it on Ubuntu20.04 environment (Python3.8+, Cuda11.6).

For installing, please follow these instructions:

conda create -n py38 python=3.8

conda activate py38

pip3 install torch torchvision torchaudio

pip3 install -r requirements.txt

📂 We train and test our UDR-S2Former in Rain200H(Rain streaks), Rain200L(Rain streaks), RainDrop

(Raindrops&Rain streaks) and AGAN(Raindrops) benchmarks. The download links of datasets are provided.

| Dataset | Rain200H | Rain200L | RainDrop | AGAN |

|---|---|---|---|---|

| Link | Download | Download | Download | Download |

🙌 To test the demo of Deraining on your own images simply, run:

python demo.py -c config/demo.yaml

👉 Here is an example to perform demo, please save your rainy images into the path of ‘image_demo/input_images’, then execute the following command:

python demo.py -c config/demo.yaml

Then deraining results will be output to the save path of 'image_demo/output_images'.

😋 Our training process is built upon pytorch_lightning, rather than the conventional torch framework. Please run the code below to begin training UDR-S2Former on various benchmarks (raindrop_syn,raindrop_real,agan,

rain200h,rain200l). Example usage to training our model in raindrop_real:

python train.py fit -c config/config_pretrain_raindrop_real.yamlThe logs and checkpoints are saved in ‘tb_logs/udrs2former‘.

😄 We have pre-trained models available for evaluating on different datasets. Please run the code below to obtain the performance on various benchmarks via --dataset_type (raindrop_syn,raindrop_real,agan,rain200h,rain200l). Here is an example to test raindrop_real datatset:

python3 test.py --dataset_type raindrop_real --dataset_raindrop_real your pathThe results are saved in ‘out/dataset_type‘.

@InProceedings{Chen_2023_ICCV,

author = {Chen, Sixiang and Ye, Tian and Bai, Jinbin and Chen, Erkang and Shi, Jun and Zhu, Lei},

title = {Sparse Sampling Transformer with Uncertainty-Driven Ranking for Unified Removal of Raindrops and Rain Streaks},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {13106-13117}

}

If you have any questions, please contact the email [email protected] or [email protected].