You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

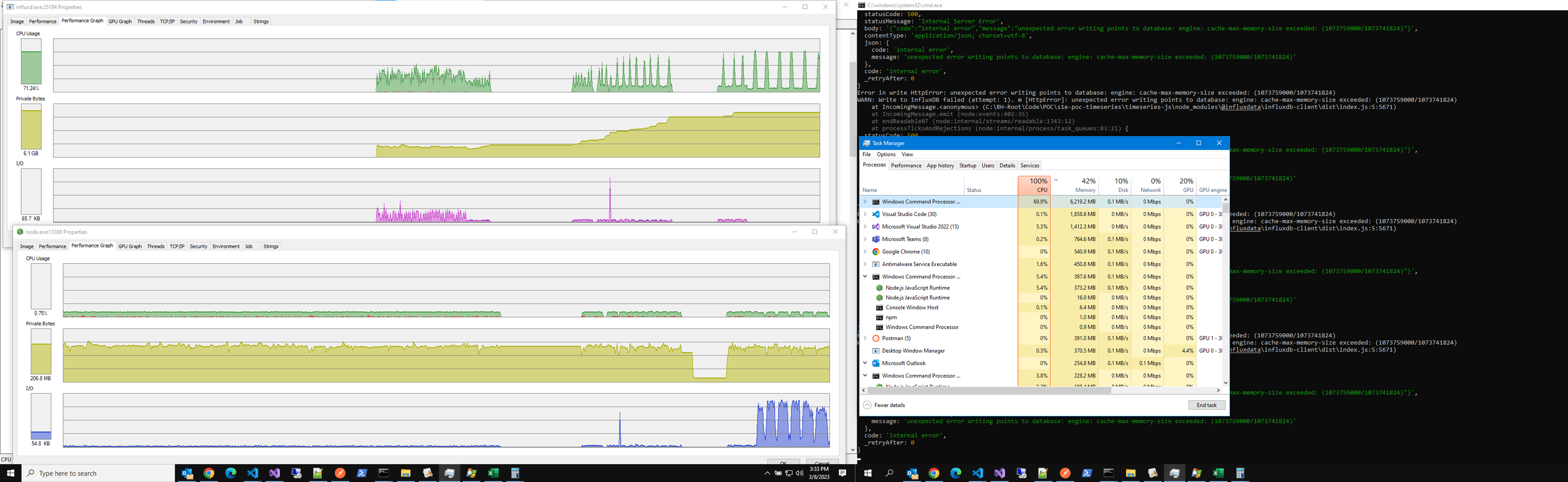

Unable to write points for long run (unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824))

#739

Using Singleton InfluxDB client instance used for all writes & Single writeApi used for all calls throughout

Call details: 200 parallel calls with each call having 500 samples/points, this is equal to 1lakh measurements (or point data).

These 200 calls are run at every 1 second interval.

Single call payload size in bytes: 85452 and 200 calls its ~16MB

constructor code:

private writeOptions: any={batchSize: 1000,flushInterval: 500,// maxBatchBytes: 1024*1024*10,writeFailed: this.writeFailedHandler,// // function (this: WriteApi, error: Error, lines: string[], attempt: number, expires: number): void | Promise<void> {// // throw new Error('Function not implemented.');// // },writeSuccess: this.writeSuccessHandler,// // function (this: WriteApi, lines: string[]): void {// // throw new Error('Function not implemented.');// // },// writeRetrySkipped: function (entry: { lines: string[]; expires: number; }): void {// throw new Error('Function not implemented.');// },// maxRetries: 0,// maxRetryTime: 0,maxBufferLines: 0,// retryJitter: 0,// minRetryDelay: 0,// maxRetryDelay: 0,// exponentialBase: 0,// randomRetry: false};this.influxClient=newInfluxDB({url: envCfg.url,token: envCfg.token});this.writeApi=this.influxClient.getWriteApi(envCfg.org,envCfg.bucket,'ns',this.writeOptions);////// method call as per above setup i.e. 200 parallel calls every second. total points in 1 call = 500publicasyncsetData(setDataReq: SetDataRequest){letdataPoints: Point[]=[];setDataReq.SampleSets.forEach(sampleSet=>{for(letsampleIndex=0;sampleIndex<sampleSet.Samples.length;sampleIndex++){constsample=sampleSet.Samples[sampleIndex];letpoint=newPoint(sampleSet.TagId).intField('hashId',stringHash(sampleSet.TagId)).floatField('value',sample.Value).uintField('dataStatus',sample.DataStatus).uintField('nodeStatus',sample.NodeStatus).booleanField('isValid',true).timestamp(newDate(convertUtcTicksToLocalDate(sample.Timestamp).toJSON()));dataPoints.push(point);}});constst=performance.now();this.writeApi.writePoints(dataPoints);if((this.logCounter++)!=0&&(this.logCounter%this.logAt)==0){console.log(`SetData for samples:ellapedInMs ${dataPoints.length}:${(performance.now()-st)}`);this.logCounter=0;}}

Note that i'm not closing/reset write api any time, as I expect to the writeOptions should do the job for me for long running write data.

Expected behavior

Should be able to write data seemlessly without failures for long run time like many days.

Memory should be efficiently managed by client-js & influxd by itself.

Kindly provide best way to acheive such write load without having to close/reset/flush the writeApi.

Thanks & Regards!

Actual behavior

node js influx client: failed at ~190 times, when batchsize = 5000 & flush=500ms

i.e failed in 3/5 mins

ERROR: RetryBuffer: 15000 oldest lines removed to keep buffer size under the limit of 32000 lines.

Error in write HttpError: unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824)

WARN: Write to InfluxDB failed (attempt: 1). m [HttpError]: unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824)

at IncomingMessage. (C:\BH-Root\Code\POC\s1e-poc-timeseries\timeseries-js\node_modules@influxdata\influxdb-client\dist\index.js:5:5671)

at IncomingMessage.emit (node:events:402:35)

at endReadableNT (node:internal/streams/readable:1343:12)

at processTicksAndRejections (node:internal/process/task_queues:83:21) {

statusCode: 500,

statusMessage: 'Internal Server Error',

body: '{"code":"internal error","message":"unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824)"}',

contentType: 'application/json; charset=utf-8',

json: {

code: 'internal error',

message: 'unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824)'

},

code: 'internal error',

_retryAfter: 0

}

single payload size in bytes: 85452

Failed: with batchsize = 1000 & flush = 500ms. i.e. failed in ~20 mins

{"level":"error","message":"3/8/2023, 3:33:42 PM, Error: connect ECONNREFUSED 127.0.0.1:7000"}

{"level":"error","message":"3/8/2023, 3:33:42 PM, Error: connect ECONNREFUSED 127.0.0.1:7000"}

{"level":"info","message":"timesCalled 1186"}

Specifications

"@influxdata/influxdb-client-apis": "^1.33.2",

64.0 GB (63.7 GB usable)

64-bit operating system, x64-based processor

Code sample to reproduce problem

Using Singleton InfluxDB client instance used for all writes & Single writeApi used for all calls throughout

Call details: 200 parallel calls with each call having 500 samples/points, this is equal to 1lakh measurements (or point data).

These 200 calls are run at every 1 second interval.

Single call payload size in bytes: 85452 and 200 calls its ~16MB

Note that i'm not closing/reset write api any time, as I expect to the writeOptions should do the job for me for long running write data.

Expected behavior

Should be able to write data seemlessly without failures for long run time like many days.

Memory should be efficiently managed by client-js & influxd by itself.

Kindly provide best way to acheive such write load without having to close/reset/flush the writeApi.

Thanks & Regards!

Actual behavior

node js influx client: failed at ~190 times, when batchsize = 5000 & flush=500ms

i.e failed in 3/5 mins

ERROR: RetryBuffer: 15000 oldest lines removed to keep buffer size under the limit of 32000 lines.

Error in write HttpError: unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824)

WARN: Write to InfluxDB failed (attempt: 1). m [HttpError]: unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824)

at IncomingMessage. (C:\BH-Root\Code\POC\s1e-poc-timeseries\timeseries-js\node_modules@influxdata\influxdb-client\dist\index.js:5:5671)

at IncomingMessage.emit (node:events:402:35)

at endReadableNT (node:internal/streams/readable:1343:12)

at processTicksAndRejections (node:internal/process/task_queues:83:21) {

statusCode: 500,

statusMessage: 'Internal Server Error',

body: '{"code":"internal error","message":"unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824)"}',

contentType: 'application/json; charset=utf-8',

json: {

code: 'internal error',

message: 'unexpected error writing points to database: engine: cache-max-memory-size exceeded: (1074087500/1073741824)'

},

code: 'internal error',

_retryAfter: 0

}

single payload size in bytes: 85452

Failed: with batchsize = 1000 & flush = 500ms. i.e. failed in ~20 mins

{"level":"error","message":"3/8/2023, 3:33:42 PM, Error: connect ECONNREFUSED 127.0.0.1:7000"}

{"level":"error","message":"3/8/2023, 3:33:42 PM, Error: connect ECONNREFUSED 127.0.0.1:7000"}

{"level":"info","message":"timesCalled 1186"}

Failure 2:

{"level":"info","message":"timesCalled 946"}

{"level":"error","message":"3/8/2023, 3:28:36 PM, Error: connect ECONNREFUSED 127.0.0.1:7000"}

{"level":"error","message":"3/8/2023, 3:28:36 PM, Error: connect ECONNREFUSED 127.0.0.1:7000"}

{"level":"info","message":"3/8/2023, 3:28:36 PM Req size= 85475 B. Res: {"data":"resData"}"}

{"level":"info","message":"timesCalled 947"}

Error in write Error: read ECONNRESET

WARN: Write to InfluxDB failed (attempt: 1). Error: read ECONNRESET

at TCP.onStreamRead (node:internal/stream_base_commons:220:20) {

errno: -4077,

code: 'ECONNRESET',

syscall: 'read'

}

Additional info

No response

The text was updated successfully, but these errors were encountered: