@@ -36,36 +35,40 @@ We perform experiments on StyleGANv3 paper settings and also experimental settin

For user convenience, we also offer the converted version of official weights.

### Paper Settings

-| Model | Dataset | Iter |FID50k | Config | Log | Download |

-| :---------------------------------: | :-------------: | :-----------: | :-----------: |:---------------------------------------------------------------------------------------------------------------------------: | :-------------: |:--------------------------------------------------------------------------------------------------------------------------------------: |

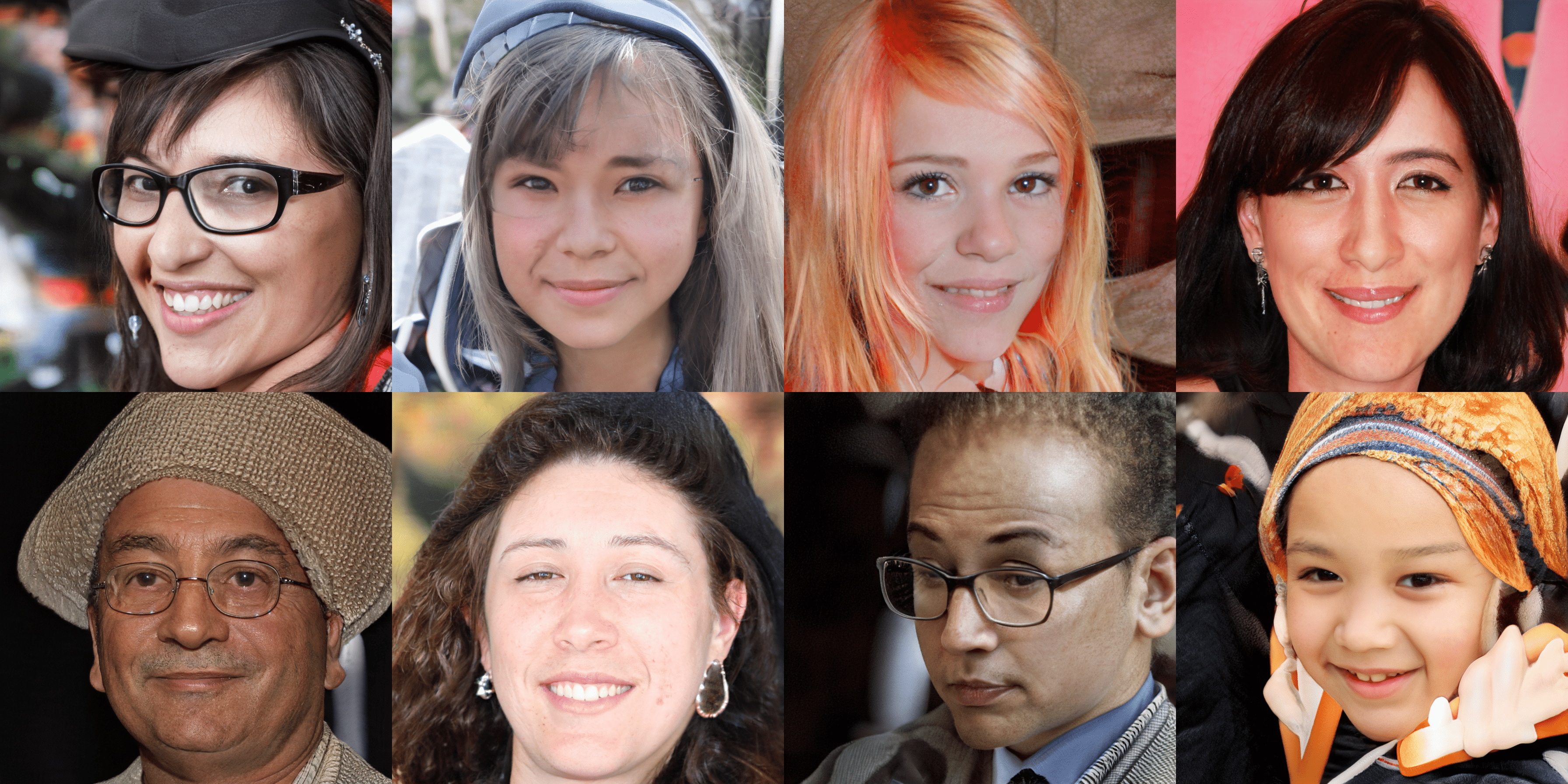

-| stylegan3-t | ffhq 1024x1024 | 490000 | 3.37

*| [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/styleganv3/stylegan3_t_noaug_fp16_gamma32.8_ffhq_1024_b4x8.py) | [log](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_noaug_fp16_gamma32.8_ffhq_1024_b4x8_20220322_090417.log.json) |[model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_noaug_fp16_gamma32.8_ffhq_1024_b4x8_best_fid_iter_490000_20220401_120733-4ff83434.pth) |

-| stylegan3-t-ada | metface 1024x1024 | 130000 | 15.09 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/styleganv3/stylegan3_t_ada_fp16_gamma6.6_metfaces_1024_b4x8.py) | [log](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_ada_fp16_gamma6.6_metfaces_1024_b4x8_20220328_142211.log.json) |[model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_ada_fp16_gamma6.6_metfaces_1024_b4x8_best_fid_iter_130000_20220401_115101-f2ef498e.pth) |

-Note

*: This setting still needs a few days to run through, we put out currently the best checkpoint, and we will update the results the first time on the end of the experiment.

+| Model | Dataset | Iter | FID50k | Config | Log | Download |

+| :-------------: | :---------------: | :----: | :---------------: | :-------------------------------------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------------------------------------------------: |

+| stylegan3-t | ffhq 1024x1024 | 490000 | 3.37

\* | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/styleganv3/stylegan3_t_noaug_fp16_gamma32.8_ffhq_1024_b4x8.py) | [log](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_noaug_fp16_gamma32.8_ffhq_1024_b4x8_20220322_090417.log.json) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_noaug_fp16_gamma32.8_ffhq_1024_b4x8_best_fid_iter_490000_20220401_120733-4ff83434.pth) |

+| stylegan3-t-ada | metface 1024x1024 | 130000 | 15.09 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/styleganv3/stylegan3_t_ada_fp16_gamma6.6_metfaces_1024_b4x8.py) | [log](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_ada_fp16_gamma6.6_metfaces_1024_b4x8_20220328_142211.log.json) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_ada_fp16_gamma6.6_metfaces_1024_b4x8_best_fid_iter_130000_20220401_115101-f2ef498e.pth) |

+

+Note

\*: This setting still needs a few days to run through, we put out currently the best checkpoint, and we will update the results the first time on the end of the experiment.

### Experimental Settings

-| Model | Dataset |Iter | FID50k | Config | Log | Download |

-| :---------------------------------: | :-------------: |:-----------: | :-----------: |:---------------------------------------------------------------------------------------------------------------------------: | :-------------: |:--------------------------------------------------------------------------------------------------------------------------------------: |

-| stylegan3-t | ffhq 256x256 | 740000 |7.65 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/styleganv3/stylegan3_t_noaug_fp16_gamma2.0_ffhq_256_b4x8.py) | [log](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_noaug_fp16_gamma2.0_ffhq_256_b4x8_20220323_144815.log.json) |[model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_noaug_fp16_gamma2.0_ffhq_256_b4x8_best_fid_iter_740000_20220401_122456-730e1fba.pth) |

-### Converted Weights

-| Model | Dataset | Comment | FID50k | EQ-T | EQ-R | Config | Download |

-| :---------------------------------: | :-------------: |:-------------: | :----: | :-----------: | :-----------: |:---------------------------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------------------------------: |

-| stylegan3-t | ffhqu 256x256|official weight | 4.62 | 63.01 | 13.12 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_t_ffhqu_256_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_ffhqu_256_b4x8_cvt_official_rgb_20220329_235046-153df4c8.pth) |

-| stylegan3-t |afhqv2 512x512 |official weight | 4.04 | 60.15 | 13.51 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_t_afhqv2_512_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_afhqv2_512_b4x8_cvt_official_rgb_20220329_235017-ee6b037a.pth) |

-| stylegan3-t |ffhq 1024x1024 |official weight | 2.79 | 61.21 | 13.82 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_t_ffhq_1024_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_ffhq_1024_b4x8_cvt_official_rgb_20220329_235113-db6c6580.pth) |

-| stylegan3-r | ffhqu 256x256 |official weight | 4.50| 66.65 | 40.48 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_r_ffhqu_256_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_r_ffhqu_256_b4x8_cvt_official_rgb_20220329_234909-4521d963.pth) |

-| stylegan3-r | afhqv2 512x512 |official weight |4.40 |64.89 | 40.34 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_r_afhqv2_512_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_r_afhqv2_512_b4x8_cvt_official_rgb_20220329_234829-f2eaca72.pth) |

-| stylegan3-r |ffhq 1024x1024 |official weight |3.07 | 64.76 | 46.62 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_r_ffhq_1024_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_r_ffhq_1024_b4x8_cvt_official_rgb_20220329_234933-ac0500a1.pth) |

+| Model | Dataset | Iter | FID50k | Config | Log | Download |

+| :---------: | :----------: | :----: | :----: | :----------------------------------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------: |

+| stylegan3-t | ffhq 256x256 | 740000 | 7.65 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/styleganv3/stylegan3_t_noaug_fp16_gamma2.0_ffhq_256_b4x8.py) | [log](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_noaug_fp16_gamma2.0_ffhq_256_b4x8_20220323_144815.log.json) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_noaug_fp16_gamma2.0_ffhq_256_b4x8_best_fid_iter_740000_20220401_122456-730e1fba.pth) |

+### Converted Weights

+| Model | Dataset | Comment | FID50k | EQ-T | EQ-R | Config | Download |

+| :---------: | :------------: | :-------------: | :----: | :---: | :---: | :---------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------: |

+| stylegan3-t | ffhqu 256x256 | official weight | 4.62 | 63.01 | 13.12 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_t_ffhqu_256_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_ffhqu_256_b4x8_cvt_official_rgb_20220329_235046-153df4c8.pth) |

+| stylegan3-t | afhqv2 512x512 | official weight | 4.04 | 60.15 | 13.51 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_t_afhqv2_512_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_afhqv2_512_b4x8_cvt_official_rgb_20220329_235017-ee6b037a.pth) |

+| stylegan3-t | ffhq 1024x1024 | official weight | 2.79 | 61.21 | 13.82 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_t_ffhq_1024_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_ffhq_1024_b4x8_cvt_official_rgb_20220329_235113-db6c6580.pth) |

+| stylegan3-r | ffhqu 256x256 | official weight | 4.50 | 66.65 | 40.48 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_r_ffhqu_256_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_r_ffhqu_256_b4x8_cvt_official_rgb_20220329_234909-4521d963.pth) |

+| stylegan3-r | afhqv2 512x512 | official weight | 4.40 | 64.89 | 40.34 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_r_afhqv2_512_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_r_afhqv2_512_b4x8_cvt_official_rgb_20220329_234829-f2eaca72.pth) |

+| stylegan3-r | ffhq 1024x1024 | official weight | 3.07 | 64.76 | 46.62 | [config](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/models/stylegan/stylegan3_r_ffhq_1024_b4x8_cvt_official_rgb.py) | [model](https://download.openmmlab.com/mmgen/stylegan3/stylegan3_r_ffhq_1024_b4x8_cvt_official_rgb_20220329_234933-ac0500a1.pth) |

## Interpolation

+

We provide a tool to generate video by walking through GAN's latent space.

Run this command to get the following video.

+

```bash

python apps/interpolate_sample.py configs/styleganv3/stylegan3_t_afhqv2_512_b4x8_official.py https://download.openmmlab.com/mmgen/stylegan3/stylegan3_t_afhqv2_512_b4x8_cvt_official.pkl --export-video --samples-path work_dirs/demos/ --endpoint 6 --interval 60 --space z --seed 2022 --sample-cfg truncation=0.8

```

+

https://user-images.githubusercontent.com/22982797/151506918-83da9ee3-0d63-4c5b-ad53-a41562b92075.mp4

## Equivarience Visualization && Evaluation

@@ -81,16 +84,14 @@ python tools/utils/equivariance_viz.py configs/styleganv3/stylegan3_r_ffhqu_256_

python tools/utils/equivariance_viz.py configs/styleganv3/stylegan3_r_ffhqu_256_b4x8_official.py https://openmmlab-share.oss-cn-hangzhou.aliyuncs.com/mmgen/stylegan3/stylegan3_r_ffhqu_256_b4x8_cvt_official.pkl --translate_max 0.25 --transform y_t --seed 5432

```

-

https://user-images.githubusercontent.com/22982797/151504902-f3cbfef5-9014-4607-bbe1-deaf48ec6d55.mp4

-

https://user-images.githubusercontent.com/22982797/151504973-b96e1639-861d-434b-9d7c-411ebd4a653f.mp4

-

https://user-images.githubusercontent.com/22982797/151505099-cde4999e-aab1-42d4-a458-3bb069db3d32.mp4

If you want to get EQ-Metric for StyleGAN3, just add following codes into config.

+

```python

metrics = dict(

eqv=dict(

@@ -99,8 +100,8 @@ metrics = dict(

eq_cfg=dict(

compute_eqt_int=True, compute_eqt_frac=True, compute_eqr=True)))

```

-And we highly recommend you to use [slurm_eval_multi_gpu](tools/slurm_eval_multi_gpu.sh) script to accelerate evaluation time.

+And we highly recommend you to use [slurm_eval_multi_gpu](tools/slurm_eval_multi_gpu.sh) script to accelerate evaluation time.

## Citation

diff --git a/configs/styleganv3/metafile.yml b/configs/styleganv3/metafile.yml

index 36ec52aa9..2c8cb0514 100755

--- a/configs/styleganv3/metafile.yml

+++ b/configs/styleganv3/metafile.yml

@@ -15,7 +15,7 @@ Models:

Results:

- Dataset: FFHQ

Metrics:

- FID50k: 3.37

+ FID50k: 3.37

\

Iter: 490000.0

Log: '[log]'

Task: Unconditional GANs

diff --git a/configs/wgan-gp/README.md b/configs/wgan-gp/README.md

index dec3d1ef5..df6d994e8 100644

--- a/configs/wgan-gp/README.md

+++ b/configs/wgan-gp/README.md

@@ -11,6 +11,7 @@

Generative Adversarial Networks (GANs) are powerful generative models, but suffer from training instability. The recently proposed Wasserstein GAN (WGAN) makes progress toward stable training of GANs, but sometimes can still generate only low-quality samples or fail to converge. We find that these problems are often due to the use of weight clipping in WGAN to enforce a Lipschitz constraint on the critic, which can lead to undesired behavior. We propose an alternative to clipping weights: penalize the norm of gradient of the critic with respect to its input. Our proposed method performs better than standard WGAN and enables stable training of a wide variety of GAN architectures with almost no hyperparameter tuning, including 101-layer ResNets and language models over discrete data. We also achieve high quality generations on CIFAR-10 and LSUN bedrooms.

+

diff --git a/docs/en/changelog.md b/docs/en/changelog.md

index 90d069576..328d13bbb 100644

--- a/docs/en/changelog.md

+++ b/docs/en/changelog.md

@@ -13,7 +13,6 @@

- Efficient Distributed Training for Generative Models: For the highly dynamic training in generative models, we adopt a new way to train dynamic models with `MMDDP`.

- New Modular Design for Flexible Combination: A new design for complex loss modules is proposed for customizing the links between modules, which can achieve flexible combination among different modules.

-

## v0.2.0 (30/05/2021)

#### Highlights

@@ -34,7 +33,6 @@

- Fix error when data_root option in val_cfg or test_cfg are set as None (#28)

- Change latex in quick_run.md to svg url and fix number of checkpoints in modelzoo_statistics.md (#34)

-

## v0.3.0 (02/08/2021)

#### Highlights

@@ -52,7 +50,6 @@

- Revise the logic of `num_classes` in basic conditional gan #69

- Support dynamic eval internal in eval hook #73

-

## v0.4.0 (03/11/2021)

#### Highlights

@@ -71,7 +68,6 @@

- Add support for PyTorch1.9 #115

- Add pre-commit hook for spell checking #135

-

## v0.5.0 (12/01/2022)

#### Highlights

@@ -93,7 +89,6 @@

- Fix bug in SinGAN dataset (#192)

- Fix SAGAN, SNGAN and BigGAN's default `sn_style` (#199, #213)

-

## v0.6.0 (07/03/2022)

#### Highlights

@@ -106,14 +101,12 @@

- Support training on CPU (#238)

- Speed up training (#231)

-

#### Fix bugs and Improvements

- Fix bug in non-distributed training/testing (#239)

- Fix typos and invalid links (#221, #226, #228, #244, #249)

- Add part of Chinese documentation (#250, #257)

-

## v0.7.0 (02/04/2022)

#### Highlights

@@ -128,14 +121,15 @@

- Add multi machine distribute train (#267)

#### Fix bugs and Improvements

+

- Add brief installation steps in README (#270)

- Support random seed for distributed sampler (#271)

- Use hyphen for command line args in apps (#273)

-

## v0.7.1 (30/04/2022)

#### Fix bugs and Improvements

+

- Support train_dataloader, val_dataloader and test_dataloader settings (#281)

- Fix ada typo (#283)

- Add chinese application tutorial (#284)

diff --git a/docs/en/get_started.md b/docs/en/get_started.md

index 1591426ef..55ba29736 100644

--- a/docs/en/get_started.md

+++ b/docs/en/get_started.md

@@ -18,95 +18,93 @@ If mmcv and mmcv-full are both installed, there will be `ModuleNotFoundError`.

## Installation

-1. Create a conda virtual environment and activate it. (Here, we assume the new environment is called ``open-mmlab``)

+1. Create a conda virtual environment and activate it. (Here, we assume the new environment is called `open-mmlab`)

- ```shell

- conda create -n open-mmlab python=3.7 -y

- conda activate open-mmlab

- ```

+ ```shell

+ conda create -n open-mmlab python=3.7 -y

+ conda activate open-mmlab

+ ```

2. Install PyTorch and torchvision following the [official instructions](https://pytorch.org/), e.g.,

- ```shell

- conda install pytorch torchvision -c pytorch

- ```

+ ```shell

+ conda install pytorch torchvision -c pytorch

+ ```

- Note: Make sure that your compilation CUDA version and runtime CUDA version match.

- You can check the supported CUDA version for precompiled packages on the [PyTorch website](https://pytorch.org/).

+ Note: Make sure that your compilation CUDA version and runtime CUDA version match.

+ You can check the supported CUDA version for precompiled packages on the [PyTorch website](https://pytorch.org/).

- `E.g.1` If you have CUDA 10.1 installed under `/usr/local/cuda` and would like to install

- PyTorch 1.5, you need to install the prebuilt PyTorch with CUDA 10.1.

+ `E.g.1` If you have CUDA 10.1 installed under `/usr/local/cuda` and would like to install

+ PyTorch 1.5, you need to install the prebuilt PyTorch with CUDA 10.1.

- ```shell

- conda install pytorch cudatoolkit=10.1 torchvision -c pytorch

- ```

+ ```shell

+ conda install pytorch cudatoolkit=10.1 torchvision -c pytorch

+ ```

- `E.g. 2` If you have CUDA 9.2 installed under `/usr/local/cuda` and would like to install

- PyTorch 1.5.1., you need to install the prebuilt PyTorch with CUDA 9.2.

+ `E.g. 2` If you have CUDA 9.2 installed under `/usr/local/cuda` and would like to install

+ PyTorch 1.5.1., you need to install the prebuilt PyTorch with CUDA 9.2.

- ```shell

- conda install pytorch=1.5.1 cudatoolkit=9.2 torchvision=0.6.1 -c pytorch

- ```

+ ```shell

+ conda install pytorch=1.5.1 cudatoolkit=9.2 torchvision=0.6.1 -c pytorch

+ ```

- If you build PyTorch from source instead of installing the prebuilt package,

- you can use more CUDA versions such as 9.0.

+ If you build PyTorch from source instead of installing the prebuilt package,

+ you can use more CUDA versions such as 9.0.

3. Install mmcv-full, we recommend you to install the pre-build package as below.

- ```shell

- pip install mmcv-full={mmcv_version} -f https://download.openmmlab.com/mmcv/dist/{cu_version}/{torch_version}/index.html

- ```

+ ```shell

+ pip install mmcv-full={mmcv_version} -f https://download.openmmlab.com/mmcv/dist/{cu_version}/{torch_version}/index.html

+ ```

- Please replace `{cu_version}` and `{torch_version}` in the url to your desired one. For example, to install the latest `mmcv-full` with `CUDA 11` and `PyTorch 1.7.0`, use the following command:

+ Please replace `{cu_version}` and `{torch_version}` in the url to your desired one. For example, to install the latest `mmcv-full` with `CUDA 11` and `PyTorch 1.7.0`, use the following command:

- ```shell

- pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu110/torch1.7.0/index.html

- ```

+ ```shell

+ pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu110/torch1.7.0/index.html

+ ```

- See [here](https://github.com/open-mmlab/mmcv#install-with-pip) for different versions of MMCV compatible to different PyTorch and CUDA versions.

- Optionally you can choose to compile mmcv from source by the following command

+ See [here](https://github.com/open-mmlab/mmcv#install-with-pip) for different versions of MMCV compatible to different PyTorch and CUDA versions.

+ Optionally you can choose to compile mmcv from source by the following command

- ```shell

- git clone https://github.com/open-mmlab/mmcv.git

- cd mmcv

- MMCV_WITH_OPS=1 pip install -e . # package mmcv-full will be installed after this step

- cd ..

- ```

+ ```shell

+ git clone https://github.com/open-mmlab/mmcv.git

+ cd mmcv

+ MMCV_WITH_OPS=1 pip install -e . # package mmcv-full will be installed after this step

+ cd ..

+ ```

- Or directly run

+ Or directly run

- ```shell

- pip install mmcv-full

- ```

+ ```shell

+ pip install mmcv-full

+ ```

4. Clone the MMGeneration repository.

- ```shell

- git clone https://github.com/open-mmlab/mmgeneration.git

- cd mmgeneration

- ```

+ ```shell

+ git clone https://github.com/open-mmlab/mmgeneration.git

+ cd mmgeneration

+ ```

5. Install build requirements and then install MMGeneration.

- ```shell

- pip install -r requirements.txt

- pip install -v -e . # or "python setup.py develop"

- ```

+ ```shell

+ pip install -r requirements.txt

+ pip install -v -e . # or "python setup.py develop"

+ ```

Note:

a. Following the above instructions, MMGeneration is installed on `dev` mode,

any local modifications made to the code will take effect without the need to reinstall it.

-b. If you would like to use `opencv-python-headless` instead of `opencv

--python`,

+b. If you would like to use `opencv-python-headless` instead of `opencv -python`,

you can install it before installing MMCV.

### Install with CPU only

The code can be built for CPU only environment (where CUDA isn't available).

-

### A from-scratch setup script

Assuming that you already have CUDA 10.1 installed, here is a full script for setting up MMGeneration with conda.

diff --git a/docs/en/modelzoo_statistics.md b/docs/en/modelzoo_statistics.md

index 6530d3090..4fa28ed55 100644

--- a/docs/en/modelzoo_statistics.md

+++ b/docs/en/modelzoo_statistics.md

@@ -1,49 +1,35 @@

-

# Model Zoo Statistics

-* Number of papers: 15

-* Number of checkpoints: 91

-

- * [Large Scale GAN Training for High Fidelity Natural Image Synthesis](https://github.com/open-mmlab/mmgeneration/blob/master/configs/biggan) (7 ckpts)

-

-

- * [CycleGAN: Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/cyclegan) (6 ckpts)

-

-

- * [Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/dcgan) (3 ckpts)

-

-

- * [Geometric GAN](https://github.com/open-mmlab/mmgeneration/blob/master/configs/ggan) (3 ckpts)

-

-

- * [Improved Denoising Diffusion Probabilistic Models](https://github.com/open-mmlab/mmgeneration/blob/master/configs/improved_ddpm) (3 ckpts)

-

-

- * [Least Squares Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/lsgan) (4 ckpts)

-

-

- * [Progressive Growing of GANs for Improved Quality, Stability, and Variation](https://github.com/open-mmlab/mmgeneration/blob/master/configs/pggan) (3 ckpts)

+- Number of papers: 15

+- Number of checkpoints: 91

- * [Pix2Pix: Image-to-Image Translation with Conditional Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/pix2pix) (4 ckpts)

+ - [Large Scale GAN Training for High Fidelity Natural Image Synthesis](https://github.com/open-mmlab/mmgeneration/blob/master/configs/biggan) (7 ckpts)

+ - [CycleGAN: Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/cyclegan) (6 ckpts)

- * [Positional Encoding as Spatial Inductive Bias in GANs (CVPR'2021)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/positional_encoding_in_gans) (21 ckpts)

+ - [Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/dcgan) (3 ckpts)

+ - [Geometric GAN](https://github.com/open-mmlab/mmgeneration/blob/master/configs/ggan) (3 ckpts)

- * [Self-attention generative adversarial networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/sagan) (9 ckpts)

+ - [Improved Denoising Diffusion Probabilistic Models](https://github.com/open-mmlab/mmgeneration/blob/master/configs/improved_ddpm) (3 ckpts)

+ - [Least Squares Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/lsgan) (4 ckpts)

- * [Singan: Learning a Generative Model from a Single Natural Image (ICCV'2019)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/singan) (3 ckpts)

+ - [Progressive Growing of GANs for Improved Quality, Stability, and Variation](https://github.com/open-mmlab/mmgeneration/blob/master/configs/pggan) (3 ckpts)

+ - [Pix2Pix: Image-to-Image Translation with Conditional Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/pix2pix) (4 ckpts)

- * [Spectral Normalization for Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/sngan_proj) (10 ckpts)

+ - [Positional Encoding as Spatial Inductive Bias in GANs (CVPR'2021)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/positional_encoding_in_gans) (21 ckpts)

+ - [Self-attention generative adversarial networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/sagan) (9 ckpts)

- * [A Style-Based Generator Architecture for Generative Adversarial Networks (CVPR'2019)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv1) (2 ckpts)

+ - [Singan: Learning a Generative Model from a Single Natural Image (ICCV'2019)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/singan) (3 ckpts)

+ - [Spectral Normalization for Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/sngan_proj) (10 ckpts)

- * [Analyzing and Improving the Image Quality of Stylegan (CVPR'2020)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv2) (11 ckpts)

+ - [A Style-Based Generator Architecture for Generative Adversarial Networks (CVPR'2019)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv1) (2 ckpts)

+ - [Analyzing and Improving the Image Quality of Stylegan (CVPR'2020)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv2) (11 ckpts)

- * [Improved Training of Wasserstein GANs](https://github.com/open-mmlab/mmgeneration/blob/master/configs/wgan-gp) (2 ckpts)

+ - [Improved Training of Wasserstein GANs](https://github.com/open-mmlab/mmgeneration/blob/master/configs/wgan-gp) (2 ckpts)

diff --git a/docs/en/quick_run.md b/docs/en/quick_run.md

index c70515d14..480054765 100644

--- a/docs/en/quick_run.md

+++ b/docs/en/quick_run.md

@@ -39,6 +39,7 @@ python demo/unconditional_demo.py \

[--save-path ${SAVE_PATH}] \

[--device ${GPU_ID}]

```

+

Note that more arguments are also offered to customizing your sampling procedure. Please use `python demo/unconditional_demo.py --help` to check more details.

### Sample images with conditional GANs

@@ -79,6 +80,7 @@ python demo/conditional_demo.py \

[--save-path ${SAVE_PATH}] \

[--device ${GPU_ID}]

```

+

If `--label` is not passed, images with random labels would be generated.

If `--label` is passed, we would generate `${SAMPLES_PER_CLASSES}` images for each input label.

If `sample_all_classes` is set true in command line, `--label` would be ignored and the generator will output images for all categories.

@@ -86,6 +88,7 @@ If `sample_all_classes` is set true in command line, `--label` would be ignored

Note that more arguments are also offered to customizing your sampling procedure. Please use `python demo/conditional_demo.py --help` to check more details.

### Sample images with image translation models

+

MMGeneration provides high-level APIs for translating images by using image translation models. Here is an example of building Pix2Pix and obtaining the translated images.

```python

@@ -116,6 +119,7 @@ python demo/translation_demo.py \

[--save-path ${SAVE_PATH}] \

[--device ${GPU_ID}]

```

+

Note that more customized arguments are also offered to customizing your sampling procedure. Please use `python demo/translation_demo.py --help` to check more details.

# 2: Prepare dataset for training and testing

@@ -141,6 +145,7 @@ Here, we provide several download links of datasets frequently used in unconditi

For translation models, now we offer two settings for datasets called paired image dataset and unpaired image dataset.

For paired image dataset, every image is formed by concatenating two corresponding images from two domains along the width dimension. You are supposed to make two folders "train" and "test" filled with images of this format for training and testing. Folder structure is presented below.

+

```

./data/dataset_name/

├── test

@@ -227,9 +232,11 @@ export CUDA_VISIBLE_DEVICES=-1

```

And then run this script.

+

```shell

python tools/train.py config --work-dir WORK_DIR

```

+

**Note**:

We do not recommend users to use CPU for training because it is too slow. We support this feature to allow users to debug on machines without GPU for convenience. Also you cannot train Dynamic GANs on CPU. For more details, please refer to [ddp training](docs/en/tutorials/ddp_train_gans.md).

@@ -247,12 +254,14 @@ metrics = dict(

inception_pkl='work_dirs/inception_pkl/ffhq-256-50k-rgb.pkl',

bgr2rgb=True))

```

+

(We will specify how to obtain `inception_pkl` in the [FID](#FID) section.)

Then, users can use the evaluation script with the following command:

```shell

sh eval.sh ${CONFIG_FILE} ${CKPT_FILE} --batch-size 10 --online

```

+

If you are in slurm environment, please switch to the [tools/slurm_eval.sh](https://github.com/open-mmlab/mmgeneration/tree/master/tools/slurm_eval.sh) by using the following commands:

```shell

@@ -273,11 +282,13 @@ sh slurm_eval.sh ${PLATFORM} ${JOBNAME} ${CONFIG_FILE} ${CKPT_FILE} \

```

We also provide [tools/utils/translation_eval.py](https://github.com/open-mmlab/mmgeneration/blob/master/tools/utils/translation_eval.py) for users to evaluate their translation models. You are supposed to set the `target-domain` of the output images and run the following command:

+

```shell

python tools/utils/translation_eval.py ${CONFIG_FILE} ${CKPT_FILE} --t ${target-domain}

```

To be noted that, in current version of MMGeneration, we support multi GPUs for [FID](#fid) and [IS](#is) evaluation and image saving. You can use the following command to use this feature:

+

```shell

# online evaluation

sh dist_eval.sh ${CONFIG_FILE} ${CKPT_FILE} ${GPUS_NUMBER} --batch-size 10 --online

@@ -294,11 +305,13 @@ sh dist_eval.sh${CONFIG_FILE} ${CKPT_FILE} ${GPUS_NUMBER} --eval none --samples-

# image saving with slurm

sh slurm_eval_multi_gpu.sh ${PLATFORM} ${JOBNAME} ${CONFIG_FILE} ${CKPT_FILE} --eval none --samples-path ${SAMPLES_PATH}

```

+

In the subsequent version, multi GPUs evaluation for more metrics will be supported.

Next, we will specify the details of different metrics one by one.

## **FID**

+

Fréchet Inception Distance is a measure of similarity between two datasets of images. It was shown to correlate well with the human judgment of visual quality and is most often used to evaluate the quality of samples of Generative Adversarial Networks. FID is calculated by computing the Fréchet distance between two Gaussians fitted to feature representations of the Inception network.

In `MMGeneration`, we provide two versions for FID calculation. One is the commonly used PyTorch version and the other one is used in StyleGAN paper. Meanwhile, we have compared the difference between these two implementations in the StyleGAN2-FFHQ1024 model (the details can be found [here](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv2/README.md)). Fortunately, there is a marginal difference in the final results. Thus, we recommend users adopt the more convenient PyTorch version.

@@ -310,12 +323,14 @@ In `MMGeneration`, we provide two versions for FID calculation. One is the commo

```shell

python tools/utils/inception_stat.py --imgsdir ${IMGS_PATH} --pklname ${PKLNAME} --size ${SIZE}

```

+

In the aforementioned command, the script will take the PyTorch InceptionV3 by default. If you want the Tero's InceptionV3, you will need to switch to the script module:

```shell

python tools/utils/inception_stat.py --imgsdir ${IMGS_PATH} --pklname ${PKLNAME} --size ${SIZE} \

--inception-style stylegan --inception-pth ${PATH_SCRIPT_MODULE}

```

+

If you want to know more information about how to extract the inception state please refer to this [doc](https://github.com/open-mmlab/mmgeneration/blob/master/docs/en/tutorials/inception_stat.md).

To use the FID metric, you should add the metric in a config file like this:

@@ -328,6 +343,7 @@ metrics = dict(

inception_pkl='work_dirs/inception_pkl/ffhq-256-50k-rgb.pkl',

bgr2rgb=True))

```

+

If the `inception_pkl` is not set, the metric will calculate the real inception statistics on the fly. If you hope to use the Tero's InceptionV3, please use the following metric configuration:

```python

@@ -340,9 +356,11 @@ metrics = dict(

inception_path='work_dirs/cache/inception-2015-12-05.pt')))

```

+

The `inception_path` indicates the path to Tero's script module.

## Precision and Recall

+

Our `Precision and Recall` implementation follows the version used in StyleGAN2. In this metric, a VGG network will be adopted to extract the features for images. Unfortunately, we have not found a PyTorch VGG implementation leading to similar results with Tero's version used in StyleGAN2. (About the differences, please see this [file](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv2/README.md).) Thus, in our implementation, we adopt [Teor's VGG](https://nvlabs-fi-cdn.nvidia.com/stylegan2-ada-pytorch/pretrained/metrics/vgg16.pt) network by default. Importantly, applying this script module needs `PyTorch >= 1.6.0`. If with a lower PyTorch version, we will use the PyTorch official VGG network for feature extraction.

To evaluate with `P&R`, please add the following configuration in the config file:

@@ -355,20 +373,25 @@ metrics = dict(

```

## IS

+

Inception score is an objective metric for evaluating the quality of generated images, proposed in [Improved Techniques for Training GANs](https://arxiv.org/pdf/1606.03498.pdf). It uses an InceptionV3 model to predict the class of the generated images, and suppose that 1) If an image is of high quality, it will be categorized into a specific class. 2) If images are of high diversity, the range of images' classes will be wide. So the KL-divergence of the conditional probability and marginal probability can indicate the quality and diversity of generated images. You can see the complete implementation in `metrics.py`, which refers to https://github.com/sbarratt/inception-score-pytorch/blob/master/inception_score.py.

If you want to evaluate models with `IS` metrics, you can add the `metrics` into your config file like this:

+

```python

# at the end of the configs/pix2pix/pix2pix_vanilla_unet_bn_facades_b1x1_80k.py

metrics = dict(

IS=dict(type='IS', num_images=106, image_shape=(3, 256, 256)))

```

+

You can run the command below to calculate IS.

+

```shell

python tools/utils/translation_eval.py --t photo \

./configs/pix2pix/pix2pix_vanilla_unet_bn_facades_b1x1_80k.py \

https://download.openmmlab.com/mmgen/pix2pix/refactor/pix2pix_vanilla_unet_bn_1x1_80k_facades_20210902_170442-c0958d50.pth \

--eval IS

```

+

To be noted that, the selection of Inception V3 and image resize method can significantly influence the final IS score. Therefore, we strongly recommend users may download the [Tero's script model of Inception V3](https://nvlabs-fi-cdn.nvidia.com/stylegan2-ada-pytorch/pretrained/metrics/inception-2015-12-05.pt) (load this script model need torch >= 1.6) and use `Bicubic` interpolation with `Pillow` backend. We provide a template for the [data process pipline](https://github.com/open-mmlab/mmgeneration/tree/master/configs/_base_/datasets/Inception_Score.py) as well.

We also perform a survey on the influence of data loading pipeline and the version of pretrained Inception V3 on the IS result. All IS are evaluated on the same group of images which are randomly selected from the ImageNet dataset.

@@ -376,7 +399,7 @@ We also perform a survey on the influence of data loading pipeline and the versi

Show the Comparison Results

| Code Base | Inception V3 Version | Data Loader Backend | Resize Interpolation Method | IS |

-|:---------------------------------------------------------------:|:--------------------:|:-------------------:|:---------------------------:|:---------------------:|

+| :-------------------------------------------------------------: | :------------------: | :-----------------: | :-------------------------: | :-------------------: |

| [OpenAI (baseline)](https://github.com/openai/improved-gan) | Tensorflow | Pillow | Pillow Bicubic | **312.255 +/- 4.970** |

| [StyleGAN-Ada](https://github.com/NVlabs/stylegan2-ada-pytorch) | Tero's Script Model | Pillow | Pillow Bicubic | 311.895 +/ 4.844 |

| mmgen (Ours) | Pytorch Pretrained | cv2 | cv2 Bilinear | 322.932 +/- 2.317 |

@@ -394,26 +417,29 @@ We also perform a survey on the influence of data loading pipeline and the versi

## PPL

+

Perceptual path length measures the difference between consecutive images (their VGG16 embeddings) when interpolating between two random inputs. Drastic changes mean that multiple features have changed together and that they might be entangled. Thus, a smaller PPL score appears to indicate higher overall image quality by experiments. \

As a basis for our metric, we use a perceptually-based pairwise image distance that is calculated as a weighted difference between two VGG16 embeddings, where the weights are fit so that the metric agrees with human perceptual similarity judgments.

-If we subdivide a latent space interpolation path into linear segments, we can define the total perceptual length of this segmented path as the sum of perceptual differences over each segment, and a natural definition for the perceptual path length would be the limit of this sum under infinitely fine subdivision, but in practice we approximate it using a small subdivision ``$`\epsilon=10^{-4}`$``.

+If we subdivide a latent space interpolation path into linear segments, we can define the total perceptual length of this segmented path as the sum of perceptual differences over each segment, and a natural definition for the perceptual path length would be the limit of this sum under infinitely fine subdivision, but in practice we approximate it using a small subdivision `` $`\epsilon=10^{-4}`$ ``.

The average perceptual path length in latent `space` Z, over all possible endpoints, is therefore

-``$$`L_Z = E[\frac{1}{\epsilon^2}d(G(slerp(z_1,z_2;t))), G(slerp(z_1,z_2;t+\epsilon)))]`$$``

+`` $$`L_Z = E[\frac{1}{\epsilon^2}d(G(slerp(z_1,z_2;t))), G(slerp(z_1,z_2;t+\epsilon)))]`$$ ``

Computing the average perceptual path length in latent `space` W is carried out in a similar fashion:

-``$$`L_Z = E[\frac{1}{\epsilon^2}d(G(slerp(z_1,z_2;t))), G(slerp(z_1,z_2;t+\epsilon)))]`$$``

+`` $$`L_Z = E[\frac{1}{\epsilon^2}d(G(slerp(z_1,z_2;t))), G(slerp(z_1,z_2;t+\epsilon)))]`$$ ``

-Where ``$`z_1, z_2 \sim P(z)`$``, and ``$` t \sim U(0,1)`$`` if we set `sampling` to full, ``$` t \in \{0,1\}`$`` if we set `sampling` to end. ``$` G`$`` is the generator(i.e. ``$` g \circ f`$`` for style-based networks), and ``$` d(.,.)`$`` evaluates the perceptual distance between the resulting images.We compute the expectation by taking 100,000 samples (set `num_images` to 50,000 in our code).

+Where `` $`z_1, z_2 \sim P(z)`$ ``, and `` $` t \sim U(0,1)`$ `` if we set `sampling` to full, `` $` t \in \{0,1\}`$ `` if we set `sampling` to end. `` $` G`$ `` is the generator(i.e. `` $` g \circ f`$ `` for style-based networks), and `` $` d(.,.)`$ `` evaluates the perceptual distance between the resulting images.We compute the expectation by taking 100,000 samples (set `num_images` to 50,000 in our code).

You can find the complete implementation in `metrics.py`, which refers to https://github.com/rosinality/stylegan2-pytorch/blob/master/ppl.py.

If you want to evaluate models with `PPL` metrics, you can add the `metrics` into your config file like this:

+

```python

# at the end of the configs/styleganv2/stylegan2_c2_ffhq_1024_b4x8.py

metrics = dict(

ppl_wend=dict(type='PPL', space='W', sampling='end', num_images=50000, image_shape=(3, 1024, 1024)))

```

+

You can run the command below to calculate PPL.

```shell

@@ -423,13 +449,16 @@ python tools/evaluation.py ./configs/styleganv2/stylegan2_c2_ffhq_1024_b4x8.py \

```

## SWD

+

Sliced Wasserstein distance is a discrepancy measure for probability distributions, and smaller distance indicates generated images look like the real ones. We obtain the Laplacian pyramids of every image and extract patches from the Laplacian pyramids as descriptors, then SWD can be calculated by taking the sliced Wasserstein distance of the real and fake descriptors.

You can see the complete implementation in `metrics.py`, which refers to https://github.com/tkarras/progressive_growing_of_gans/blob/master/metrics/sliced_wasserstein.py.

If you want to evaluate models with `SWD` metrics, you can add the `metrics` into your config file like this:

+

```python

# at the end of the configs/pggan/pggan_celeba-cropped_128_g8_12Mimgs.py

metrics = dict(swd16k=dict(type='SWD', num_images=16384, image_shape=(3, 128, 128)))

```

+

You can run the command below to calculate SWD.

```shell

@@ -439,12 +468,15 @@ python tools/evaluation.py ./configs/pggan/pggan_celeba-cropped_128_g8_12Mimgs.p

```

## MS-SSIM

+

Multi-scale structural similarity is used to measure the similarity of two images. We use MS-SSIM here to measure the diversity of generated images, and a low MS-SSIM score indicates the high diversity of generated images. You can see the complete implementation in `metrics.py`, which refers to https://github.com/tkarras/progressive_growing_of_gans/blob/master/metrics/ms_ssim.py.

If you want to evaluate models with `MS-SSIM` metrics, you can add the `metrics` into your config file like this:

+

```python

# at the end of the configs/pggan/pggan_celeba-cropped_128_g8_12Mimgs.py

metrics = dict(ms_ssim10k=dict(type='MS_SSIM', num_images=10000))

```

+

You can run the command below to calculate MS-SSIM.

```shell

@@ -453,7 +485,6 @@ python tools/evaluation.py ./configs/pggan/pggan_celeba-cropped_128_g8_12Mimgs.p

--batch-size 64 --online --eval ms_ssim10k

```

-

# 5: Evaluation during training

In this section, we will discuss how to evaluate the generative models, especially for GANs, in the training. Note that `MMGeneration` only supports distributed training and the evaluation metric adopted in the training procedure should also be run in a distributed style. Currently, only `FID` has been implemented and tested in an efficient distributed version. Other metrics with efficient distributed version will be supported in the recent future. Thus, in the following part, we will specify how to evaluate your models with `FID` metric in training.

@@ -503,6 +534,7 @@ data = dict(

We highly recommend that users should pre-calculate the inception pickle file in advance, which will reduce the evaluation cost significantly.

We also provide `TranslationEvalHook` for users to evaluate translation models during training. The only difference with `GenerativeEvalHook` is that you need to specify the target domain of the evaluated model. For example, to evaluate the model with `FID` metric, please add the following python codes in your config file:

+

```python

evaluation = dict(

type='TranslationEvalHook',

diff --git a/docs/en/tutorials/applications.md b/docs/en/tutorials/applications.md

index 49d8221d0..6c71df906 100644

--- a/docs/en/tutorials/applications.md

+++ b/docs/en/tutorials/applications.md

@@ -1,6 +1,7 @@

# Tutorial 8: Applications with Generative Models

## Interpolation

+

The generative model in the GAN architecture learns to map points in the latent space to generated images. The latent space has no meaning other than the meaning applied to it via the generative model. Generally, we want to explore the structure of latent space, one thing we can do is to interpolate a sequence of points between two endpoints in the latent space, and see the results these points yield. (Eg. we believe that features that are absent in either endpoint appear in the middle of a linear interpolation path is a sign that the latent space is entangled and the factors of variation are not properly separated.)

Indeed, we have provided a application script to users. You can use [apps/interpolate_sample.py](https://github.com/open-mmlab/mmgeneration/tree/master/apps/interpolate_sample.py) with the following commands for unconditional models' interpolation:

@@ -16,6 +17,7 @@ python apps/interpolate_sample.py \

[--samples-path ${SAMPLES_PATH}] \

[--batch-size ${BATCH_SIZE}] \

```

+

Here, we provide two kinds of `show-mode`, `sequence`, and `group`. In `sequence` mode, we sample a sequence of endpoints first, then interpolate points between two endpoints in order, generated images will be saved individually. In `group` mode, we sample several pairs of endpoints, then interpolate points between two endpoints in a pair, generated images will be saved in a single picture. What's more, `space` refers to the latent code space, you can choose 'z' or 'w' (especially refer to style space in StyleGAN series), `endpoint` indicates the number of endpoints you want to sample (should be set to even number in `group` mode), `interval` means the number of points (include endpoints) you interpolate between two endpoints.

Note that more customized arguments are also offered to customizing your interpolating procedure.

@@ -36,9 +38,11 @@ python apps/conditional_interpolate.py \

[--samples-path ${SAMPLES_PATH}] \

[--batch-size ${BATCH_SIZE}] \

```

+

Here, unlike unconditional models, you need to provide the name of the embedding layer if the label embedding is shared among conv_blocks. Otherwise, you can set the `embedding-name` to 'NULL'. Considering that conditional models have noise and label as inputs, we provide `fix-z` to fix the noise and `fix-y` to fix the label when performing image interpolation.

## Projection

+

Inverting the synthesis network g is an interesting problem that has many applications. For example, manipulating a given image in the latent feature space requires finding a matching latent code for it first. Generally, you can reconstruct a target image by optimizing over the latent vector, using lpips and pixel-wise loss as the objective function.

Indeed, we have provided an application script to users to find the matching latent vector w of StyleGAN series synthesis network for given images. You can use [apps/stylegan_projector.py](https://github.com/open-mmlab/mmgeneration/tree/master/apps/stylegan_projector.py) with the following commands:

@@ -50,11 +54,13 @@ python apps/stylegan_projector.py \

${FILES}

[--results-path ${RESULTS_PATH}]

```

+

Here, `FILES` refer to the images' path, and the projection latent and reconstructed images will be saved in `results-path`.

Note that more customized arguments are also offered to customizing your projection procedure.

Please use `python apps/stylegan_projector.py --help` to check more details.

## Manipulation

+

A general application of StyleGAN based models is manipulating the latent space to control the attributes of the synthesized images. Here, we provide a simple but popular algorithm based on [SeFa](https://arxiv.org/pdf/2007.06600.pdf) to users. Of course, we modify the original version in calculating eigenvectors and offer a more flexible interface.

To manipulate your generator, you can run the script [apps/modified_sefa.py](https://github.com/open-mmlab/mmgeneration/tree/master/apps/modified_sefa.py) with the following command:

diff --git a/docs/en/tutorials/config.md b/docs/en/tutorials/config.md

index 71de8d543..48d66f46a 100644

--- a/docs/en/tutorials/config.md

+++ b/docs/en/tutorials/config.md

@@ -21,7 +21,7 @@ When submitting jobs using "tools/train.py" or "tools/evaluation.py", you may sp

- Update values of list/tuples.

If the value to be updated is a list or a tuple. For example, the config file normally sets `workflow=[('train', 1)]`. If you want to

- change this key, you may specify `--cfg-options workflow="[(train,1),(val,1)]"`. Note that the quotation mark \" is necessary to

+ change this key, you may specify `--cfg-options workflow="[(train,1),(val,1)]"`. Note that the quotation mark " is necessary to

support list/tuple data types, and that **NO** white space is allowed inside the quotation marks in the specified value.

## Config File Structure

@@ -55,7 +55,6 @@ We follow the below style to name config files. Contributors are advised to foll

- `[batch_per_gpu x gpu]`: GPUs and samples per GPU, `b4x8` is used by default in stylegan2.

- `{schedule}`: training schedule. Following Tero's convention, we recommend to use the number of images shown to the discriminator, like 5M, 800k. Of course, you can use 5e indicating 5 epochs or 80k-iters for 80k iterations.

-

## An Example of StyleGAN2

To help the users have a basic idea of a complete config and the modules in a modern detection system,

diff --git a/docs/en/tutorials/customize_dataset.md b/docs/en/tutorials/customize_dataset.md

index 1f9b6b8cb..f1298155d 100644

--- a/docs/en/tutorials/customize_dataset.md

+++ b/docs/en/tutorials/customize_dataset.md

@@ -1,8 +1,8 @@

# Tutorial 2: Customize Datasets

In this section, we will detail how to prepare data and adopt proper dataset in our repo for different methods.

-## Datasets for unconditional models

+## Datasets for unconditional models

**Data preparation for unconditional model** is simple. What you need to do is downloading the images and put them into a directory. Next, you should set a symlink in the `data` directory. For standard unconditional gans with static architectures, like DCGAN and StyleGAN2, `UnconditionalImageDataset` is designed to train such unconditional models. Here is an example config for FFHQ dataset:

@@ -39,6 +39,7 @@ data = dict(

pipeline=train_pipeline)))

```

+

Here, we adopt `RepeatDataset` to avoid frequent dataloader reloading, which will accelerate the training procedure. As shown in the example, `pipeline` provides important data pipeline to process images, including loading from file system, resizing, cropping and transferring to `torch.Tensor`. All of supported data pipelines can be found in `mmgen/datasets/pipelines`.

For unconditional GANs with dynamic architectures like PGGAN and StyleGANv1, `GrowScaleImgDataset` is recommended to use for training. Since such dynamic architectures need real images in different scales, directly adopting `UnconditionalImageDataset` will bring heavy I/O cost for loading multiple high-resolution images. Here is an example we use for training PGGAN in CelebA-HQ dataset:

@@ -90,6 +91,7 @@ data = dict(

},

len_per_stage=300000))

```

+

In this dataset, you should provide a dictionary of image paths to the `imgs_roots`. Thus, you should resize the images in the dataset in advance. For the resizing methods in the data pre-processing, we adopt bilinear interpolation methods in all of the experiments studied in MMGeneration.

Note that this dataset should be used with `PGGANFetchDataHook`. In this config file, this hook should be added in the customized hooks, as shown below.

@@ -108,9 +110,11 @@ custom_hooks = [

priority='VERY_HIGH')

]

```

+

This fetching data hook helps the dataloader update the status of dataset to change the data source and batch size during training.

## Datasets for image translation models

+

**Data preparation for translation model** needs a little attention. You should organize the files in the way we told you in `quick_run.md`. Fortunately, for most official datasets like facades and summer2winter_yosemite, they already have the right format. Also, you should set a symlink in the `data` directory. For paired-data trained translation model like Pix2Pix , `PairedImageDataset` is designed to train such translation models. Here is an example config for facades dataset:

```python

@@ -275,4 +279,5 @@ data = dict(

test_mode=True))

```

+

Here, `UnpairedImageDataset` will load both images (domain A and B) from different paths and transform them at the same time.

diff --git a/docs/en/tutorials/customize_losses.md b/docs/en/tutorials/customize_losses.md

index 3f5daf313..753e33c48 100644

--- a/docs/en/tutorials/customize_losses.md

+++ b/docs/en/tutorials/customize_losses.md

@@ -22,7 +22,6 @@ class DiscShiftLoss(nn.Module):

# codes can be found in ``mmgen/models/losses/disc_auxiliary_loss.py``

```

-

The goal of this design for loss modules is to allow for using it automatically in the generative models (`MODELS`), without other complex codes to define the mapping between data and keyword arguments. Thus, different from other frameworks in `OpenMMLab`, our loss modules contain a special keyword, `data_info`, which is a dictionary defining the mapping between the input arguments and data from the generative models. Taking the `DiscShiftLoss` as an example, when writing the config file, users may use this loss as follows:

```python

@@ -30,6 +29,7 @@ dict(type='DiscShiftLoss',

loss_weight=0.001 * 0.5,

data_info=dict(pred='disc_pred_real')

```

+

The information in `data_info` tells the module to use the `disc_pred_real` data as the input tensor for `pred` arguments. Once the `data_info` is not `None`, our loss module will automatically build up the computational graph.

```python

diff --git a/docs/en/tutorials/customize_models.md b/docs/en/tutorials/customize_models.md

index f062735f1..8ec67f838 100644

--- a/docs/en/tutorials/customize_models.md

+++ b/docs/en/tutorials/customize_models.md

@@ -3,8 +3,8 @@

We basically categorize our supported models into 3 main streams according to tasks:

- Unconditional GANs:

- - Static architectures: DCGAN, StyleGANv2

- - Dynamic architectures: PGGAN, StyleGANv1

+ - Static architectures: DCGAN, StyleGANv2

+ - Dynamic architectures: PGGAN, StyleGANv1

- Image Translation Models: Pix2Pix, CycleGAN

- Internal Learning (Single Image Model): SinGAN

@@ -18,7 +18,6 @@ All of the other modules in `MMGeneration` will be registered as `MODULES`, incl

In all of the related repos in OpenMMLab, users may follow the similar steps to build up a new components:

-

- Implement a class

- Decorate the class with one of the register (`MODELS` or `MODULES` in our repo)

- Import this component in related `__init__.py` files

diff --git a/docs/en/tutorials/customize_runtime.md b/docs/en/tutorials/customize_runtime.md

index 7e863a82a..9b0787f7a 100644

--- a/docs/en/tutorials/customize_runtime.md

+++ b/docs/en/tutorials/customize_runtime.md

@@ -41,8 +41,8 @@ To find the `MyOptimizer` module defined above, this module should be imported i

- Modify `mmgen/core/optimizer/__init__.py` to import it.

- The newly defined module should be imported in `mmgen/core/optimizer/__init__.py` so that the registry will

- find the new module and add it:

+ The newly defined module should be imported in `mmgen/core/optimizer/__init__.py` so that the registry will

+ find the new module and add it:

```python

from .my_optimizer import MyOptimizer

@@ -106,34 +106,34 @@ The default optimizer constructor is implemented [here](https://github.com/open-

Tricks not implemented by the optimizer should be implemented through optimizer constructor (e.g., set parameter-wise learning rates) or hooks. We list some common settings that could stabilize the training or accelerate the training. Feel free to create PR, issue for more settings.

- __Use gradient clip to stabilize training__:

- Some models need gradient clip to clip the gradients to stabilize the training process. An example is as below:

+ Some models need gradient clip to clip the gradients to stabilize the training process. An example is as below:

- ```python

- optimizer_config = dict(

- _delete_=True, grad_clip=dict(max_norm=35, norm_type=2))

- ```

+ ```python

+ optimizer_config = dict(

+ _delete_=True, grad_clip=dict(max_norm=35, norm_type=2))

+ ```

- If your config inherits the base config which already sets the `optimizer_config`, you might need `_delete_=True` to override the unnecessary settings. See the [config documentation](https://mmgeneration.readthedocs.io/en/latest/config.html) for more details.

+ If your config inherits the base config which already sets the `optimizer_config`, you might need `_delete_=True` to override the unnecessary settings. See the [config documentation](https://mmgeneration.readthedocs.io/en/latest/config.html) for more details.

- __Use momentum schedule to accelerate model convergence__:

- We support momentum scheduler to modify model's momentum according to learning rate, which could make the model converge in a faster way.

- Momentum scheduler is usually used with LR scheduler, for example, the following config is used in 3D detection to accelerate convergence.

- For more details, please refer to the implementation of [CyclicLrUpdater](https://github.com/open-mmlab/mmcv/blob/f48241a65aebfe07db122e9db320c31b685dc674/mmcv/runner/hooks/lr_updater.py#L327) and [CyclicMomentumUpdater](https://github.com/open-mmlab/mmcv/blob/f48241a65aebfe07db122e9db320c31b685dc674/mmcv/runner/hooks/momentum_updater.py#L130).

-

- ```python

- lr_config = dict(

- policy='cyclic',

- target_ratio=(10, 1e-4),

- cyclic_times=1,

- step_ratio_up=0.4,

- )

- momentum_config = dict(

- policy='cyclic',

- target_ratio=(0.85 / 0.95, 1),

- cyclic_times=1,

- step_ratio_up=0.4,

- )

- ```

+ We support momentum scheduler to modify model's momentum according to learning rate, which could make the model converge in a faster way.

+ Momentum scheduler is usually used with LR scheduler, for example, the following config is used in 3D detection to accelerate convergence.

+ For more details, please refer to the implementation of [CyclicLrUpdater](https://github.com/open-mmlab/mmcv/blob/f48241a65aebfe07db122e9db320c31b685dc674/mmcv/runner/hooks/lr_updater.py#L327) and [CyclicMomentumUpdater](https://github.com/open-mmlab/mmcv/blob/f48241a65aebfe07db122e9db320c31b685dc674/mmcv/runner/hooks/momentum_updater.py#L130).

+

+ ```python

+ lr_config = dict(

+ policy='cyclic',

+ target_ratio=(10, 1e-4),

+ cyclic_times=1,

+ step_ratio_up=0.4,

+ )

+ momentum_config = dict(

+ policy='cyclic',

+ target_ratio=(0.85 / 0.95, 1),

+ cyclic_times=1,

+ step_ratio_up=0.4,

+ )

+ ```

## Customize training schedules

@@ -142,20 +142,20 @@ We support many other learning rate schedules [here](https://github.com/open-mml

- Poly schedule:

- ```python

- lr_config = dict(policy='poly', power=0.9, min_lr=1e-4, by_epoch=False)

- ```

+ ```python

+ lr_config = dict(policy='poly', power=0.9, min_lr=1e-4, by_epoch=False)

+ ```

- ConsineAnnealing schedule:

- ```python

- lr_config = dict(

- policy='CosineAnnealing',

- warmup='linear',

- warmup_iters=1000,

- warmup_ratio=1.0 / 10,

- min_lr_ratio=1e-5)

- ```

+ ```python

+ lr_config = dict(

+ policy='CosineAnnealing',

+ warmup='linear',

+ warmup_iters=1000,

+ warmup_ratio=1.0 / 10,

+ min_lr_ratio=1e-5)

+ ```

## Customize workflow

@@ -229,8 +229,8 @@ Then we need to make `MyHook` imported. Assuming the file is in `mmgen/core/util

- Modify `mmgen/core/utils/__init__.py` to import it.

- The newly defined module should be imported in `mmgen/core/utils/__init__.py` so that the registry will

- find the new module and add it:

+ The newly defined module should be imported in `mmgen/core/utils/__init__.py` so that the registry will

+ find the new module and add it:

```python

from .my_hook import MyHook

@@ -264,7 +264,6 @@ By default, the hook's priority is set as `NORMAL` during registration.

If the hook is already implemented in MMCV, you can directly modify the config to use the hook as below

-

### Modify default runtime hooks

Some common hooks are not registered through `custom_hooks`, they are

diff --git a/docs/en/tutorials/ddp_train_gans.md b/docs/en/tutorials/ddp_train_gans.md

index 86ecd5f6c..275e569ce 100644

--- a/docs/en/tutorials/ddp_train_gans.md

+++ b/docs/en/tutorials/ddp_train_gans.md

@@ -34,6 +34,7 @@ if self.is_dynamic_ddp:

kwargs.update(dict(ddp_reducer=self.model.reducer))

outputs = self.model.train_step(data_batch, self.optimizer, **kwargs)

```

+

The reducer can help us to rebuild the bucket for current backward path by just adding this line in the `train_step` function:

```python

@@ -54,6 +55,7 @@ if ddp_reducer is not None:

loss_disc.backward()

```

+

That is, users should add reducer preparation in between the loss calculation and loss backward.

In our `MMGeneration`, this feature is adoptted as the default way to train DDP model. In configs, users should only add the following configuration to use dynamic ddp runner:

@@ -68,8 +70,6 @@ runner = dict(

*We have to admit that this implementation will use the private interface in PyTorch and will keep maintaining this feature.*

-

-

## DDP Wrapper

Of course, we still support using the `DDP Wrapper` to train your GANs. If you want to switch to use DDP Wrapper, you should modify the config file like this:

diff --git a/docs/en/tutorials/inception_stat.md b/docs/en/tutorials/inception_stat.md

index 2a2530595..13893eb18 100644

--- a/docs/en/tutorials/inception_stat.md

+++ b/docs/en/tutorials/inception_stat.md

@@ -5,12 +5,13 @@ In MMGeneration, we provide a [script](https://github.com/open-mmlab/mmgeneratio

- [Load images](#load-images)

- - [Load from directory](#load-from-directory)

- - [Load with dataset config](#load-with-dataset-config)

+ - [Load from directory](#load-from-directory)

+ - [Load with dataset config](#load-with-dataset-config)

- [Define the version of Inception Net](#define-the-version-of-inception-net)

- [Control number of images to calculate inception state](#control-number-of-images-to-calculate-inception-state)

- [Control the shuffle operation in data loading](#control-the-shuffle-operation-in-data-loading)

- [Note on inception state extraction between various code bases](#note-on-inception-state-extraction-between-various-code-bases)

+

## Load Images

@@ -20,10 +21,13 @@ We provide two ways to load real data, namely, pass the path of directory that c

### Load from Directory

If you want to pass the path of real images, you can use `--imgsdir` arguments as the follow command.

+

```shell

python tools/utils/inception_stat.py --imgsdir ${IMGS_PATH} --pklname ${PKLNAME} --size ${SIZE} --flip ${FLIP}

```

+

Then a pre-defined pipeline will be used to load images in `${IMGS_PATH}`.

+

```python

pipeline = [

dict(type='LoadImageFromFile', key='real_img'),

@@ -40,16 +44,21 @@ pipeline = [

dict(type='ImageToTensor', keys=['real_img'])

]

```

+

If `${FLIP}` is set as `True`, the following config of horizontal flip operation would be added to the end of the pipeline.

+

```python

dict(type='Flip', keys=['real_img'], direction='horizontal')

```

If you want to use a specific pipeline otherwise the pre-defined ones, you can use `--pipeline-cfg` to pass a config file contains the data pipeline you want to use.

+

```shell

python tools/utils/inception_stat.py --imgsdir ${IMGS_PATH} --pklname ${PKLNAME} --pipeline-cfg ${PIPELINE}

```

+

To be noted that, the name of the pipeline dict in `${PIPELINE}` should be fixed as `inception_pipeline`. For example,

+

```python

# an example of ${PIPELINE}

inception_pipeline = [

@@ -61,11 +70,13 @@ inception_pipeline = [

### Load with Dataset Config

If you want to use a dataset config, you can use `--data-config` arguments as the following command.

+

```shell

python tools/utils/inception_stat.py --data-config ${CONFIG} --pklname ${PKLNAME} --subset ${SUBSET}

```

Then a dataset will be instantiated following the `${SUBSET}` in the configs, and defaults to `test`. Take the following dataset config as example,

+

```python

# from `imagenet_128x128_inception_stat.py`

data = dict(

@@ -86,6 +97,7 @@ data = dict(

ann_file='data/imagenet/meta/val.txt',

pipeline=test_pipeline))

```

+

If not defined, the config in `data['test']` would be used in data loading process. If you want to extract the inception state of the training set, you can set `--subset train` in the command. Then the dataset would be built under the guidance of config in `data['train']` and images under `data/imagenet/train` and process pipeline of `train_pipeline` would be used.

## Define the Version of Inception Net

@@ -120,6 +132,7 @@ python tools/utils/inception_stat.py --data-config ${CONFIG} --pklname ${PKLNAME

For FID evaluation, differences between [PyTorch Studio GAN](https://github.com/POSTECH-CVLab/PyTorch-StudioGAN) and ours are mainly on the selection of real samples. In MMGen, we follow the pipeline of [BigGAN](https://github.com/ajbrock/BigGAN-PyTorch), where the whole training set is adopted to extract inception statistics. Besides, we also use [Tero's Inception](https://nvlabs-fi-cdn.nvidia.com/stylegan2-ada-pytorch/pretrained/metrics/inception-2015-12-05.pt) for feature extraction.

You can download the preprocessed inception state by the following url:

+

- [CIFAR10](https://download.openmmlab.com/mmgen/evaluation/fid_inception_pkl/cifar10.pkl)

- [ImageNet1k](https://download.openmmlab.com/mmgen/evaluation/fid_inception_pkl/imagenet.pkl)

- [ImageNet1k-64x64](https://download.openmmlab.com/mmgen/evaluation/fid_inception_pkl/imagenet_64x64.pkl)

diff --git a/docs/zh_cn/get_started.md b/docs/zh_cn/get_started.md

index d8ebc1784..acb47a596 100644

--- a/docs/zh_cn/get_started.md

+++ b/docs/zh_cn/get_started.md

@@ -17,78 +17,78 @@

## 安装

-1. 创建conda虚拟环境并激活。 (这里假设新环境叫 ``open-mmlab``)

+1. 创建conda虚拟环境并激活。 (这里假设新环境叫 `open-mmlab`)

- ```shell

- conda create -n open-mmlab python=3.7 -y

- conda activate open-mmlab

- ```

+ ```shell

+ conda create -n open-mmlab python=3.7 -y

+ conda activate open-mmlab

+ ```

2. 安装 PyTorch 和 torchvision,参考[官方安装指令](https://pytorch.org/),比如,

- ```shell

- conda install pytorch torchvision -c pytorch

- ```

+ ```shell

+ conda install pytorch torchvision -c pytorch

+ ```

- 注:确保您编译的CUDA版本和运行时CUDA版本相匹配。您可以在[PyTorch官网](https://pytorch.org/)检查预编译库支持的CUDA版本。

+ 注:确保您编译的CUDA版本和运行时CUDA版本相匹配。您可以在[PyTorch官网](https://pytorch.org/)检查预编译库支持的CUDA版本。

- `示例1` 如果您在`/usr/local/cuda`下安装了 CUDA 10.1 并想要安装

- PyTorch 1.5,您需要安装支持CUDA 10.1的PyTorch预编译版本。

+ `示例1` 如果您在`/usr/local/cuda`下安装了 CUDA 10.1 并想要安装

+ PyTorch 1.5,您需要安装支持CUDA 10.1的PyTorch预编译版本。

- ```shell

- conda install pytorch cudatoolkit=10.1 torchvision -c pytorch

- ```

+ ```shell

+ conda install pytorch cudatoolkit=10.1 torchvision -c pytorch

+ ```

- `示例2`如果您在`/usr/local/cuda`下安装了 CUDA 9.2 并想要安装

- PyTorch 1.5.1,您需要安装支持CUDA 9.2的PyTorch预编译版本。

+ `示例2`如果您在`/usr/local/cuda`下安装了 CUDA 9.2 并想要安装

+ PyTorch 1.5.1,您需要安装支持CUDA 9.2的PyTorch预编译版本。

- ```shell

- conda install pytorch=1.5.1 cudatoolkit=9.2 torchvision=0.6.1 -c pytorch

- ```

+ ```shell

+ conda install pytorch=1.5.1 cudatoolkit=9.2 torchvision=0.6.1 -c pytorch

+ ```

- 如果您从源码编译PyTorch 而非安装预编译库, 您可以使用更多CUDA版本如9.0。

+ 如果您从源码编译PyTorch 而非安装预编译库, 您可以使用更多CUDA版本如9.0。

3. 安装 mmcv-full, 我们建议您按照下述方法安装预编译库。

- ```shell

- pip install mmcv-full={mmcv_version} -f https://download.openmmlab.com/mmcv/dist/{cu_version}/{torch_version}/index.html

- ```

+ ```shell

+ pip install mmcv-full={mmcv_version} -f https://download.openmmlab.com/mmcv/dist/{cu_version}/{torch_version}/index.html

+ ```

- 请替换链接中的 `{cu_version}` 和 `{torch_version}` 为您想要的版本。 比如, 要安装支持 `CUDA 11` 和 `PyTorch 1.7.0`的 `mmcv-full`, 使用下面命令:

+ 请替换链接中的 `{cu_version}` 和 `{torch_version}` 为您想要的版本。 比如, 要安装支持 `CUDA 11` 和 `PyTorch 1.7.0`的 `mmcv-full`, 使用下面命令:

- ```shell

- pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu110/torch1.7.0/index.html

- ```

+ ```shell

+ pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu110/torch1.7.0/index.html

+ ```

- 可在[这里](https://github.com/open-mmlab/mmcv#install-with-pip)查看兼容了不同PyTorch和CUDA的MMCV版本信息。

- 您也可以选择按照下方命令从源码编译mmcv

+ 可在[这里](https://github.com/open-mmlab/mmcv#install-with-pip)查看兼容了不同PyTorch和CUDA的MMCV版本信息。

+ 您也可以选择按照下方命令从源码编译mmcv

- ```shell

- git clone https://github.com/open-mmlab/mmcv.git

- cd mmcv

- MMCV_WITH_OPS=1 pip install -e . # package mmcv-full will be installed after this step

- cd ..

- ```

+ ```shell

+ git clone https://github.com/open-mmlab/mmcv.git

+ cd mmcv

+ MMCV_WITH_OPS=1 pip install -e . # package mmcv-full will be installed after this step

+ cd ..

+ ```

- 或者直接运行

+ 或者直接运行

- ```shell

- pip install mmcv-full

- ```

+ ```shell

+ pip install mmcv-full

+ ```

4. 克隆MMGeneration仓库。

- ```shell

- git clone https://github.com/open-mmlab/mmgeneration.git

- cd mmgeneration

- ```

+ ```shell

+ git clone https://github.com/open-mmlab/mmgeneration.git

+ cd mmgeneration

+ ```

5. 安装构建依赖项并安装MMGeneration。

- ```shell

- pip install -r requirements.txt

- pip install -v -e . # or "python setup.py develop"

- ```

+ ```shell

+ pip install -r requirements.txt

+ pip install -v -e . # or "python setup.py develop"

+ ```

注:

diff --git a/docs/zh_cn/modelzoo_statistics.md b/docs/zh_cn/modelzoo_statistics.md

index 79eae5b98..0ceb52125 100644

--- a/docs/zh_cn/modelzoo_statistics.md

+++ b/docs/zh_cn/modelzoo_statistics.md

@@ -1,37 +1,27 @@

-

# Model Zoo Statistics

-* Number of papers: 11

-* Number of checkpoints: 62

-

- * [CycleGAN: Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/cyclegan) (6 ckpts)

-

-

- * [Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/dcgan) (3 ckpts)

-

-

- * [Geometric GAN](https://github.com/open-mmlab/mmgeneration/blob/master/configs/ggan) (3 ckpts)

-

-

- * [Least Squares Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/lsgan) (4 ckpts)

-

-

- * [Progressive Growing of GANs for Improved Quality, Stability, and Variation](https://github.com/open-mmlab/mmgeneration/blob/master/configs/pggan) (3 ckpts)

+- Number of papers: 11

+- Number of checkpoints: 62

- * [Pix2Pix: Image-to-Image Translation with Conditional Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/pix2pix) (4 ckpts)

+ - [CycleGAN: Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/cyclegan) (6 ckpts)

+ - [Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/dcgan) (3 ckpts)

- * [Positional Encoding as Spatial Inductive Bias in GANs (CVPR'2021)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/positional_encoding_in_gans) (21 ckpts)

+ - [Geometric GAN](https://github.com/open-mmlab/mmgeneration/blob/master/configs/ggan) (3 ckpts)

+ - [Least Squares Generative Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/lsgan) (4 ckpts)

- * [Singan: Learning a Generative Model from a Single Natural Image (ICCV'2019)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/singan) (3 ckpts)

+ - [Progressive Growing of GANs for Improved Quality, Stability, and Variation](https://github.com/open-mmlab/mmgeneration/blob/master/configs/pggan) (3 ckpts)

+ - [Pix2Pix: Image-to-Image Translation with Conditional Adversarial Networks](https://github.com/open-mmlab/mmgeneration/blob/master/configs/pix2pix) (4 ckpts)

- * [A Style-Based Generator Architecture for Generative Adversarial Networks (CVPR'2019)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv1) (2 ckpts)

+ - [Positional Encoding as Spatial Inductive Bias in GANs (CVPR'2021)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/positional_encoding_in_gans) (21 ckpts)

+ - [Singan: Learning a Generative Model from a Single Natural Image (ICCV'2019)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/singan) (3 ckpts)

- * [Analyzing and Improving the Image Quality of Stylegan (CVPR'2020)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv2) (11 ckpts)

+ - [A Style-Based Generator Architecture for Generative Adversarial Networks (CVPR'2019)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv1) (2 ckpts)

+ - [Analyzing and Improving the Image Quality of Stylegan (CVPR'2020)](https://github.com/open-mmlab/mmgeneration/blob/master/configs/styleganv2) (11 ckpts)

- * [Improved Training of Wasserstein GANs](https://github.com/open-mmlab/mmgeneration/blob/master/configs/wgan-gp) (2 ckpts)

+ - [Improved Training of Wasserstein GANs](https://github.com/open-mmlab/mmgeneration/blob/master/configs/wgan-gp) (2 ckpts)

diff --git a/docs/zh_cn/quick_run.md b/docs/zh_cn/quick_run.md

index 9c04df55b..70d111665 100644

--- a/docs/zh_cn/quick_run.md

+++ b/docs/zh_cn/quick_run.md

@@ -1,5 +1,3 @@

# 1: 在标准的数据集上训练和推理现有的模型

-

-

## 用现有的生成模型来生成图像

diff --git a/docs/zh_cn/tutorials/applications.md b/docs/zh_cn/tutorials/applications.md

index eaa13f9ca..55a9f6bb1 100644

--- a/docs/zh_cn/tutorials/applications.md

+++ b/docs/zh_cn/tutorials/applications.md

@@ -1,6 +1,7 @@

# Tutorial 8: 生成模型的应用

## 插值

+

以GAN为架构的生成模型学习将潜码空间中的点映射到生成的图像上。生成模型赋予了潜码空间的具体意义。一般来说,我们想探索潜码空间的结构,我们可以做的一件事是在潜码空间的两个端点之间插入一系列点,观察这些点生成的结果。(例如,我们认为,如果任何一个端点都不存在的特征出现在线性插值路径的中间点,则说明潜码空间是纠缠在一起的,动态属性没有得到适当的分离。)

我们为用户提供了一个应用脚本。你可以使用[apps/interpolate_sample.py](https://github.com/open-mmlab/mmgeneration/tree/master/apps/interpolate_sample.py)的以下命令进行无条件模型的插值。

@@ -16,6 +17,7 @@ python apps/interpolate_sample.py \

[--samples-path ${SAMPLES_PATH}] \

[--batch-size ${BATCH_SIZE}] \

```

+

在这里,我们提供两种显示模式(SHOW_MODE),即序列(sequence)和组(group)。在序列模式下,我们首先对一连串的端点进行采样,然后按顺序对两个端点之间的点进行插值,生成的图像将被单独保存。在组模式下,我们先采样几对端点,然后在每对端点之间进行插值,生成的图像将被保存在一张图片中。此外,`space` 指的是潜码空间,你可以选择'z'或'w'(指StyleGAN系列中的风格空间),`endpoint` 表示你要采样的端点数量(在 `group` 模式中应设置为偶数),`interval`表示你在两个端点之间插值的点的数量(包括端点)。

注意,我们还提供了更多的自定义参数来定制你的插值程序。