Universal Parts in Universal-Geometry-with-ICA

This repository is intended to be run in a Docker environment. If you are not familiar with Docker, please set up an environment with torch==1.11.0 and install the packages listed in docker/requirements.txt accordingly.

Please create a Docker image as follows:

docker build -t ${USER}/universal dockerExecution as root should be avoided if possible. For example, refer to the docker document to properly set the -u option.

If you don't mind running as root, you can execute the Docker container as follows:

docker run --rm -it --name ica_container \

--gpus device=0 \

-v $PWD:/working \

${USER}/universal bashPlease set the volume so that this directory becomes /working. When running Python scripts in an environment other than Docker, please add an option like --root_dir path/to/universal.

The experiments described in the following sections should be performed in src/.

Note, however, that the descriptions of file and directory locations are based on this directory.

The PCA-transformed and ICA-transformed embeddings used in the experiments are available. For details, please refer to the Save Embeddings section of this section.

We used the 157 languages fastText [1]. Download the embeddings as follows:

./scripts/get_157langs_vectors.shOr manually download them from the following links:

English | Spanish | Russian | Arabic | Hindi | Chinese | Japanese | French | German | Italian

Please place the embeddings in data/crosslingual/157langs/vectors/.

We used MUSE [2] fastText. Download the embeddings as follows:

./scripts/get_MUSE_vectors.shOr manually download them from the following links:

English | Spanish | French | German | Italian | Russian

Please place the embeddings in data/crosslingual/MUSE/vectors/.

We used the MUSE [2] dictionaries for word translations. Download the MUSE dictionaries as follows:

./scripts/get_MUSE_dictionaries.shFor more details, please see the original repository MUSE.

Please place the dictionaries files in data/crosslingual/MUSE/dictionaries/.

We used the following fonts:

Please place the font files in data/crosslingual/fonts/.

Perform PCA and ICA transformations as follows:

python crosslingual_save_pca_and_ica_embeddings.pyAlternatively, embeddings for the following language sets used in the paper are available:

Please place the downloaded embedding directories under output/crosslingual/ as in output/crosslingual/en-es-ru-ar-hi-zh-ja/.

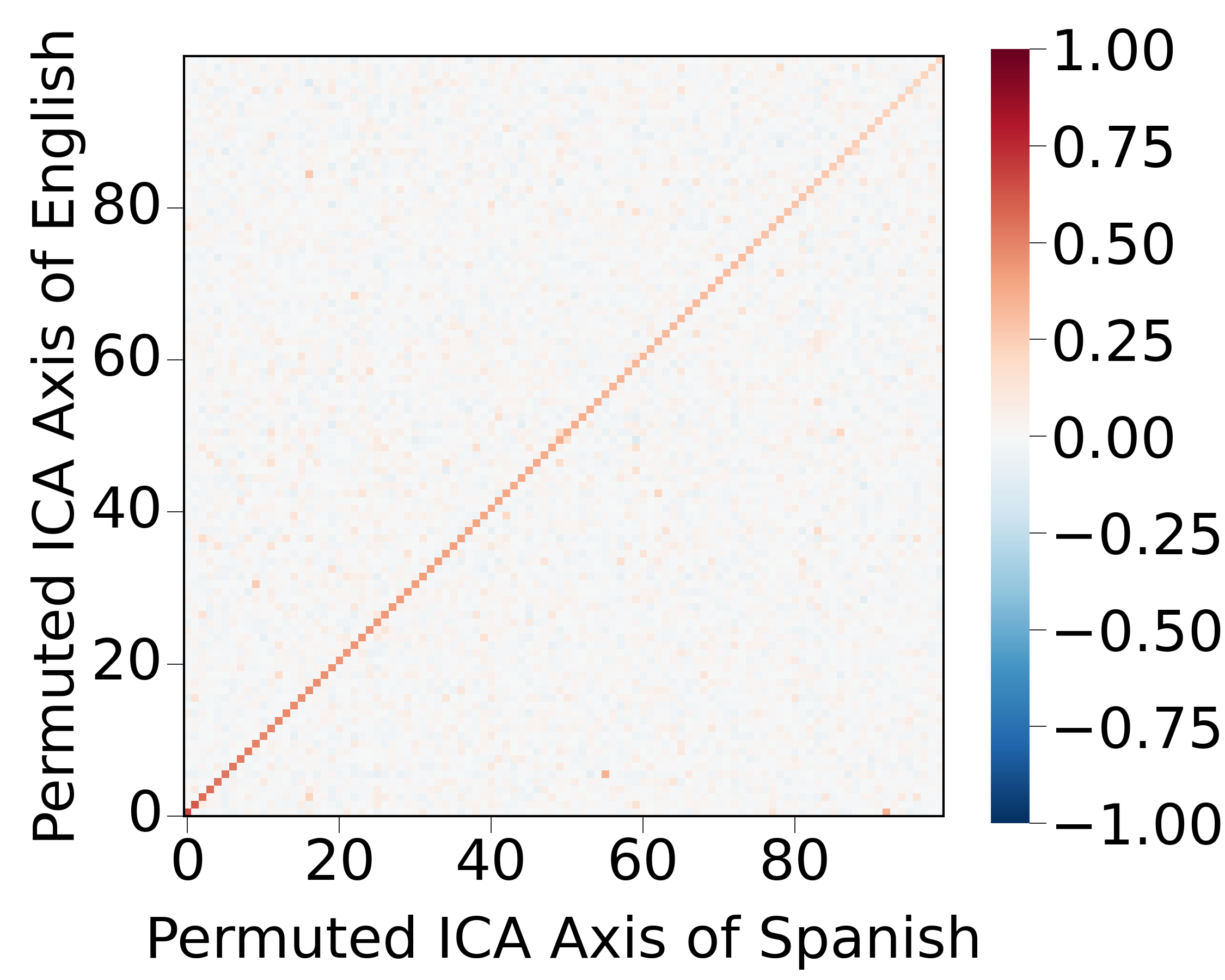

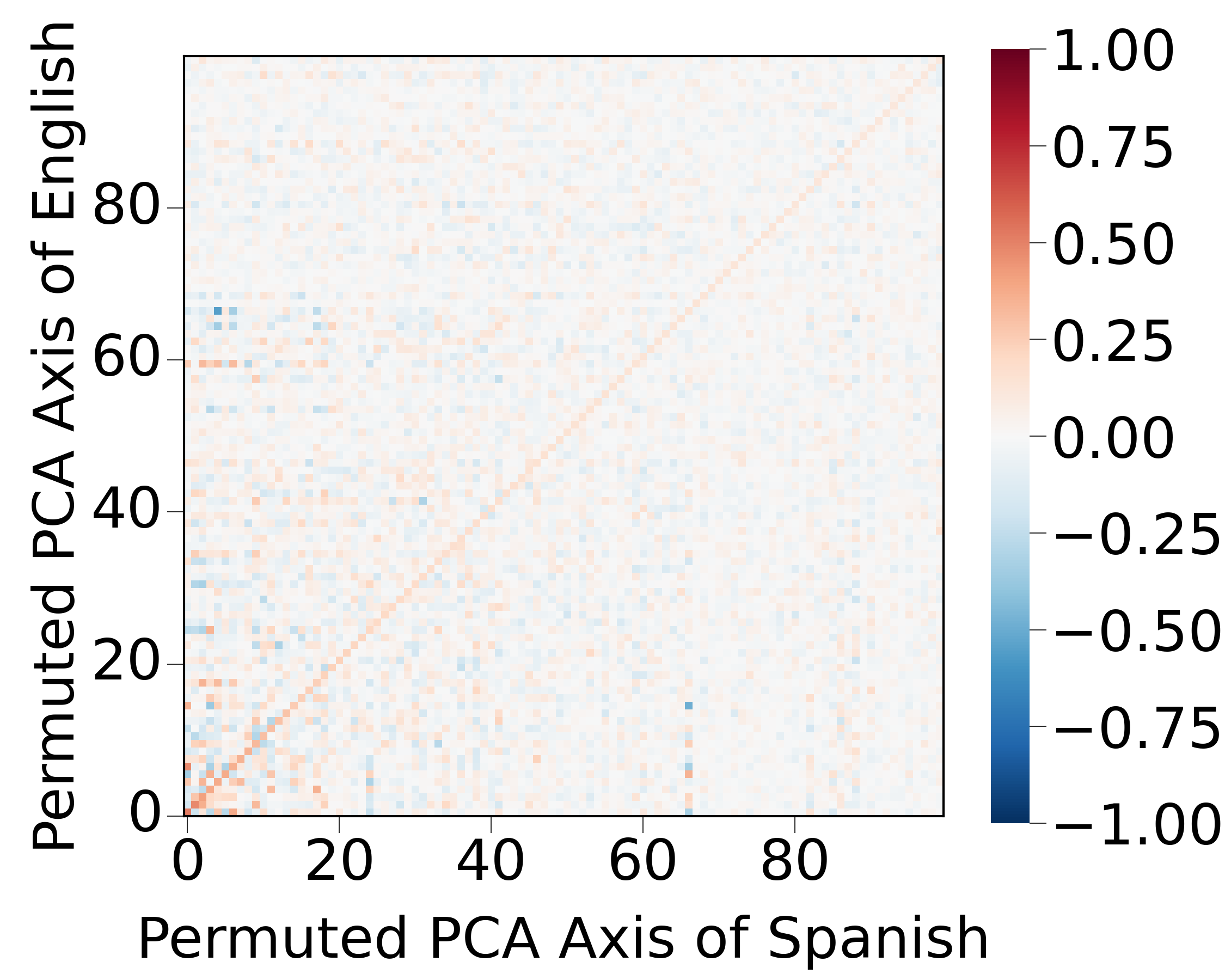

| ICA | PCA |

|---|---|

|

|

The figure above represents the correlations between the axes of the ICA-transformed embeddings of English and Spanish. Create the figure as follows:

python crosslingual_show_axis_corr.pyFor PCA transformation, please add the --pca option.

| ICA | |

|---|---|

|

|

The figure above is the heatmap of the normalized ICA-transformed embeddings for multiple languages. Create the figure as follows:

python crosslingual_show_embeddings_heatmap.pyFor PCA transformation, please add the --pca option.

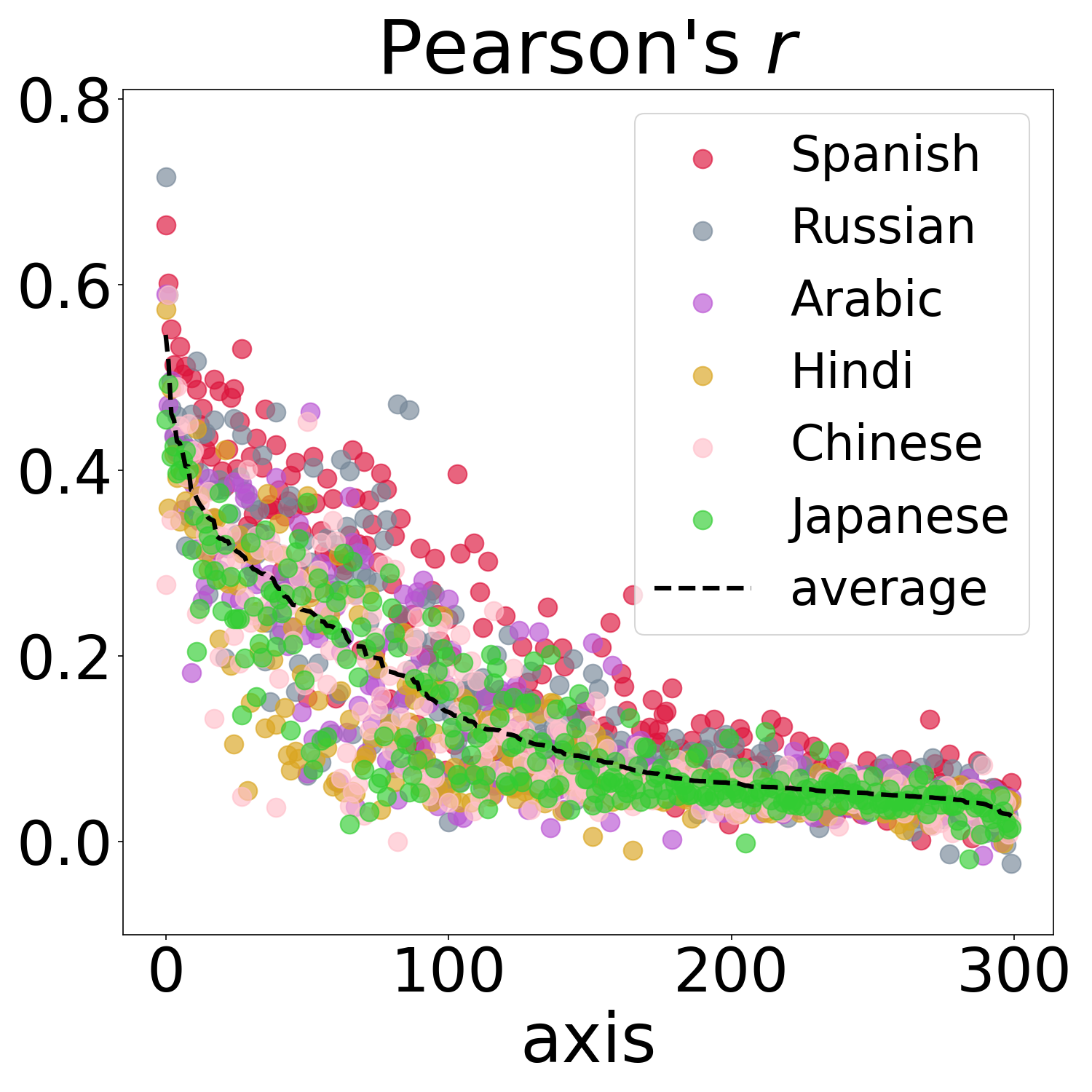

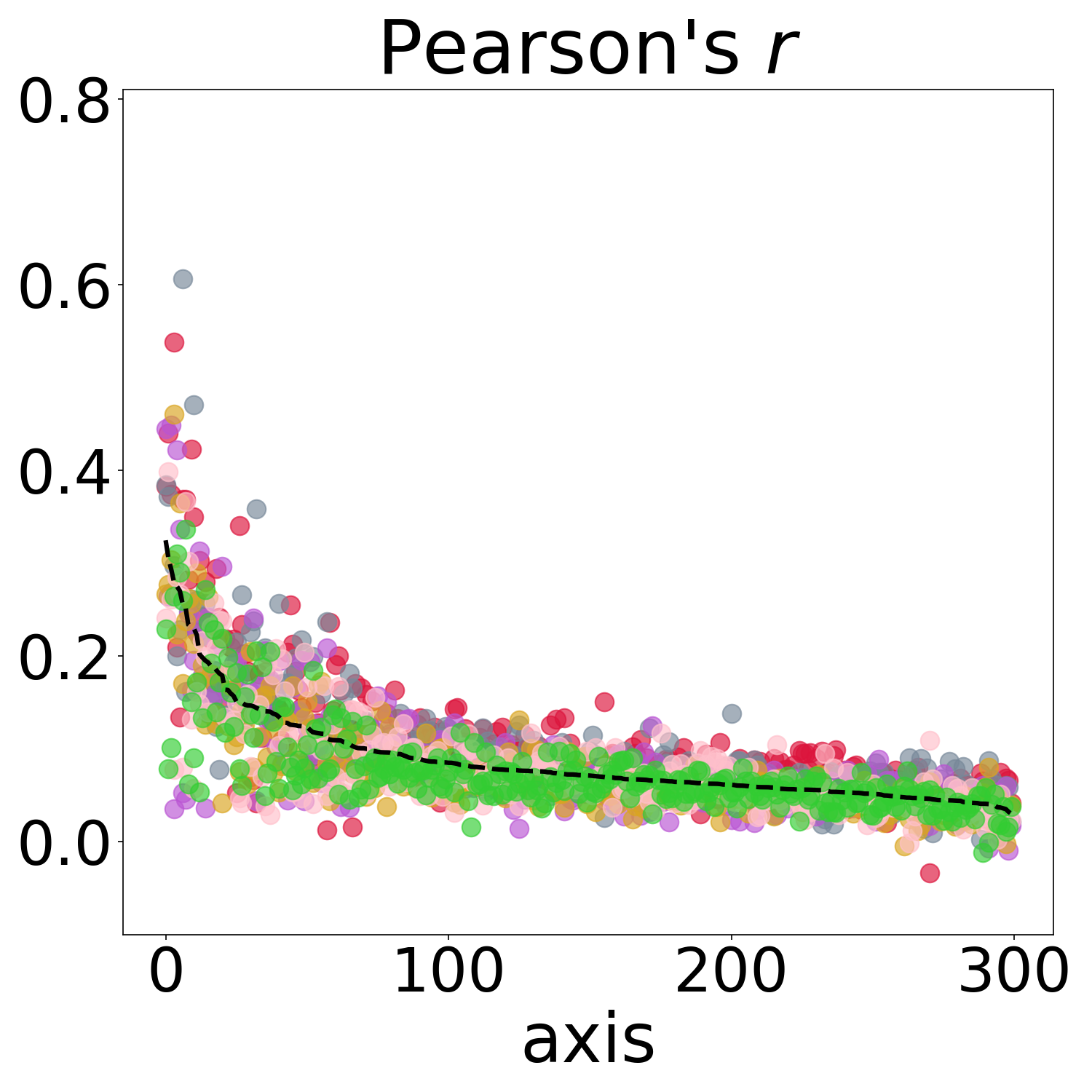

| ICA | PCA |

|---|---|

|

|

The figure above is the scatterplot of the correlations between the axes matched by crosslingual_show_embeddings_heatmap.py for the ICA-transformed embeddings of English and other languages. Create the figure as follows:

python crosslingual_show_corr_scatter.pyFor PCA transformation, please add the --pca option.

| ICA | |

|---|---|

| English | Spanish |

|

|

The figure above is the projection of the embedding space along the axes matched by crosslingual_show_embeddings_heatmap.py for the normalized ICA-transformed embeddings of multiple languages. Create the figure as follows:

python crosslingual_show_scatter_projection.pyFor PCA transformation, please add the --pca option.

If you are interested in the order of axes in ICA-transformed embeddings when projecting the embedding space, please see our related work [3].

| ICA | |

|---|---|

|

|

The figure above shows the logarithmic values of skewness and kurtosis for each axis of the ICA-transformed embeddings of multiple languages. Create the figure as follows:

python crosslingual_show_log_skew_and_kurt.pyFor PCA transformation, please add the --pca option.

| ICA | |

|---|---|

|

|

The figure above show the scatterplot when selecting two axes matched by crosslingual_show_embeddings_heatmap.py for ICA-transformed embeddings of multiple languages. Note that this figure is not in the paper. Create the figure as follows:

python crosslingual_show_scatter_plot.pyFor PCA transformation, please add the --pca option.

When linear, orthogonal, PCA, and ICA transformations are applied to the embeddings, the performance of the alignment task between two languages is determined as follows:

python crosslingual_eval_alignment_task.pyNote that if faiss is not installed, performance may decrease.

The PCA-transformed and ICA-transformed embeddings used in the experiments are available. For details, please refer to the Save Embeddings section of this section.

We used the One Billion Word Benchmark [4]. Please download it from the following link:

Please place the data as in data/dynamic/1-billion-word-language-modeling-benchmark-r13output/.

Save the BERT embeddings as follows:

python dynamic_save_raw_embeddings.pyPerform PCA and ICA transformations as follows:

python dynamic_save_pca_and_ica_embeddings.pyAlternatively, the BERT embeddings used in the paper are available:

Please place the downloaded embeddings under output/dynamic/ as in output/dynamic/bert-pca-ica-100000.pkl.

| ICA | PCA |

|---|---|

|

|

The figure above represents the correlations between the axes of the ICA-transformed fastText embeddings (calculated in Cross-lingual Embeddings section) and the ICA-transformed BERT embeddings. Create the figure as follows:

python dynamic_show_axis_corr.pyFor PCA transformation, please add the --pca option.

| ICA | PCA |

|---|---|

|

|

The figure above is the heatmap of the normalized ICA-transformed fastText embeddings (calculated in Cross-lingual Embeddings section) and the normalized ICA-transformed BERT embeddings. Create the figure as follows:

python dynamic_show_embeddings_heatmap.pyFor PCA transformation, please add the --pca option.

The PCA-transformed and ICA-transformed embeddings used in the experiments are available. For details, please refer to the Save Embeddings section of this section.

We used ImageNet [5]. Please download it from the following link:

If you can use kaggle API, please download as follows:

kaggle competitions download -c imagenet-object-localization-challenge

mkdir -p data/image/imagenet/

unzip imagenet-object-localization-challenge.zip -d data/image/imagenet/Please place the data as in data/image/imagenet/.

Randomly sample 100 images per class as follows:

python image_save_imagenet_100k.pySave the image model embeddings as follows:

python image_save_raw_embeddings.pyPerform PCA and ICA transformations as follows:

python image_save_pca_and_ica_embeddings.pyAlternatively, the image model embeddings used in the paper are available:

- vit_base_patch32_224_clip_laion2b (Google Drive)

- resmlp_12_224 (Google Drive)

- swin_small_patch4_window7_224 (Google Drive)

- resnet18 (Google Drive)

- regnety_002 (Google Drive)

Please place the downloaded embeddings under output/image/ as in output/image/vit_base_patch32_224_clip_laion2b-pca_ica.pkl.

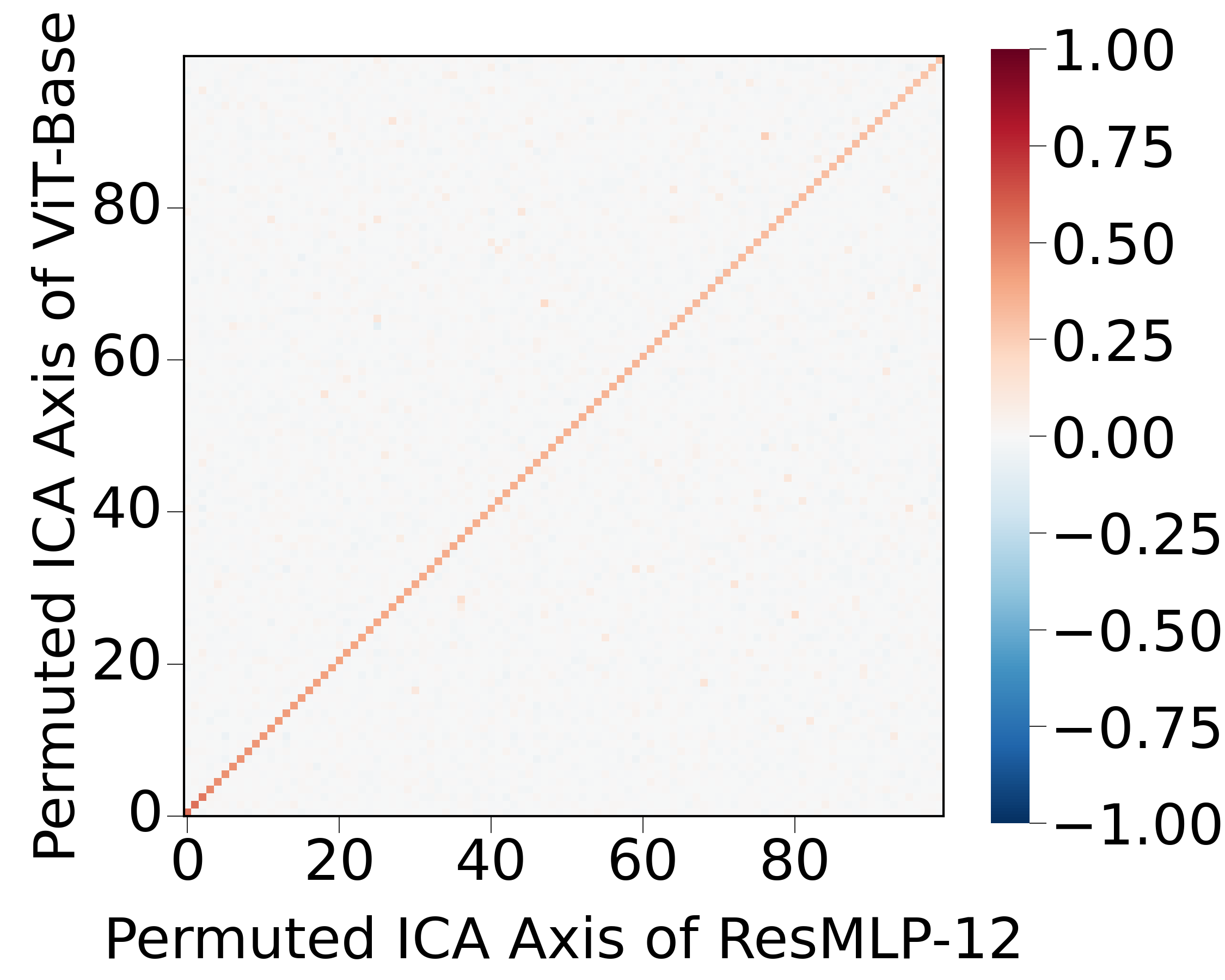

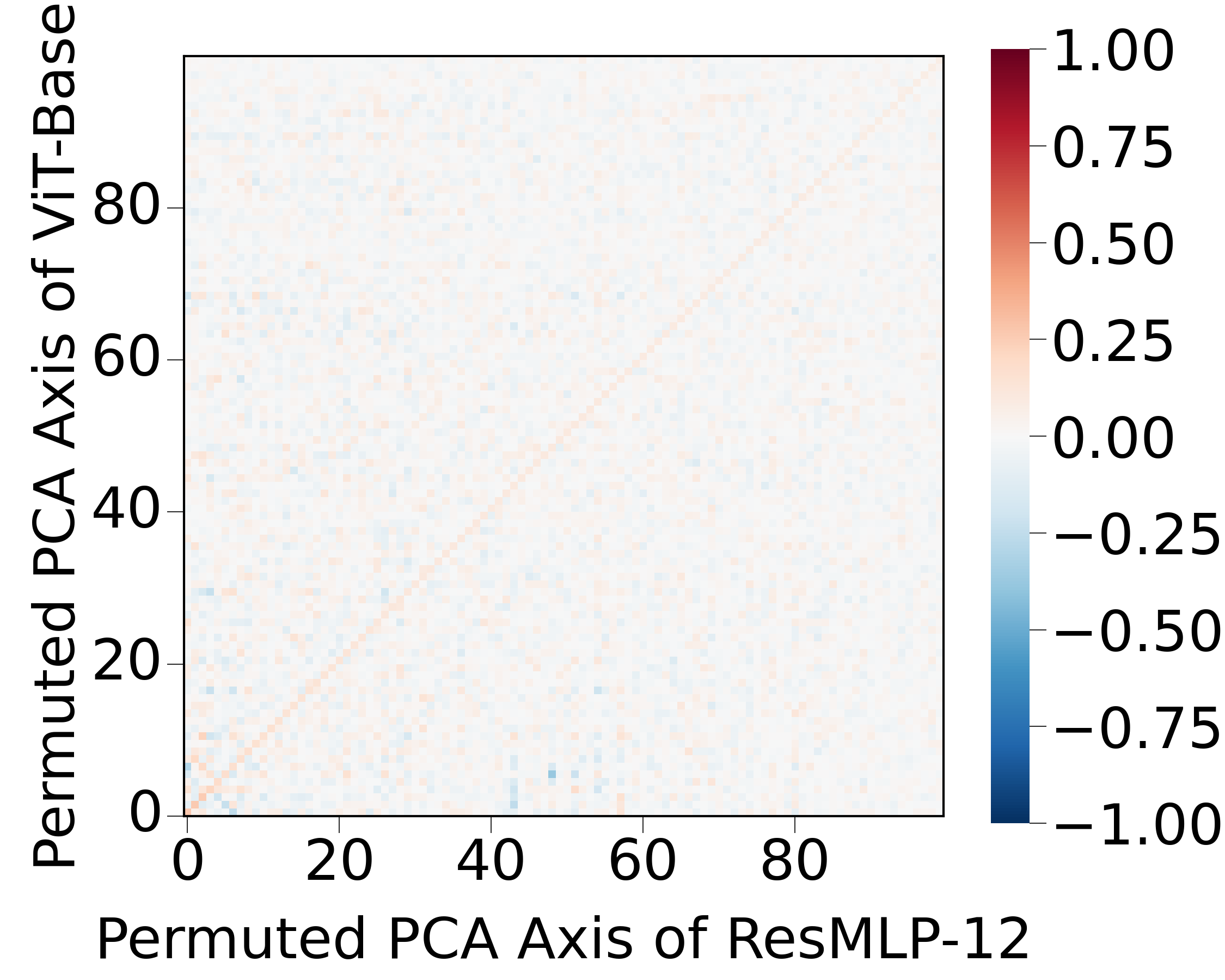

| ICA | PCA |

|---|---|

|

|

The figure above represents the correlations between the axes of the ICA-transformed embeddings of image models. Create the figure as follows:

python image_show_axis_corr.pyFor PCA transformation, please add the --pca option.

| ICA | |

|---|---|

|

|

The figure above is the heatmap of the normalized ICA-transformed fastText embeddings (calculated in Cross-lingual Embeddings section) and the normalized ICA-transformed embeddings of image models. Create the figure as follows:

python image_show_embeddings_heatmap.pyFor PCA transformation, please add the --pca option.

We used publicly available repositories. We are especially grateful for the following two repositories. Thank you.

[1] Edouard Grave, Piotr Bojanowski, Prakhar Gupta, Armand Joulin, and Tomás Mikolov. Learning word vectors for 157 languages. LREC 2018.

[2] Guillaume Lample, Alexis Conneau, Marc’Aurelio Ranzato, Ludovic Denoyer, and Hervé Jégou. Word translation without parallel data. ICLR 2018.

[3] Hiroaki Yamagiwa, Yusuke Takase, and Hidetoshi Shimodaira. Axis Tour: Word Tour Determines the Order of Axes in ICA-transformed Embeddings. arXiv 2024.

[4] Ciprian Chelba, Tomás Mikolov, Mike Schuster, Qi Ge, Thorsten Brants, Phillipp Koehn, and Tony Robinson. One billion word benchmark for measuring progress in statistical language modeling. INTER-SPEECH 2014.

[5] Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, Alexander C. Berg, and Li Fei-Fei. IJCV 2015.

[6] Aapo Hyvärinen, and Erkki Oja. Neural networks 2000.

[7] Tomáš Musil, and David Mareček. Independent Components of Word Embeddings Represent Semantic Features. arXiv 2022.

- Since the URLs of published embeddings may change, please refer to the GitHub repository URL instead of the direct URL when referencing in papers, etc.

- This directory was created by Hiroaki Yamagiwa.