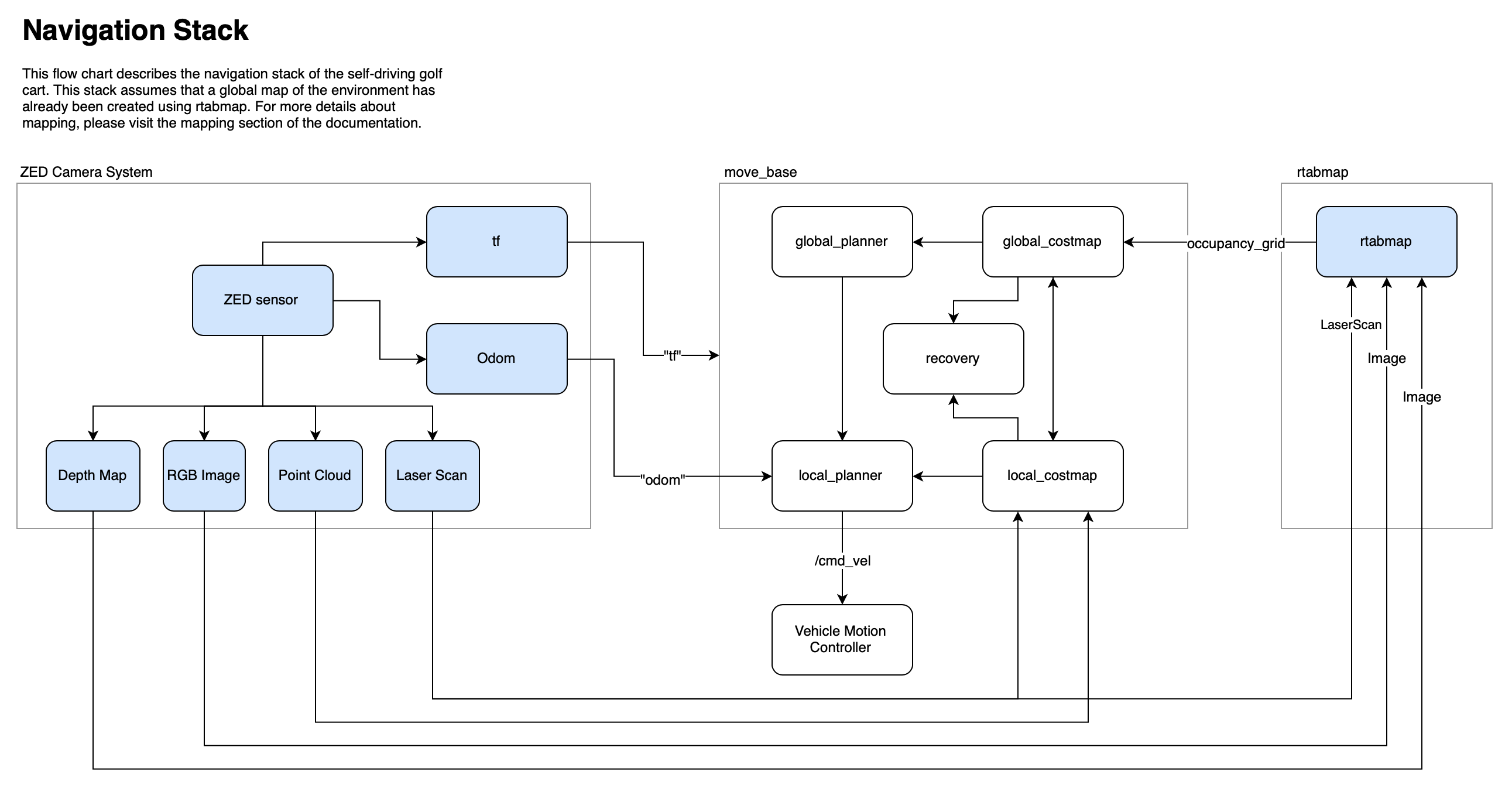

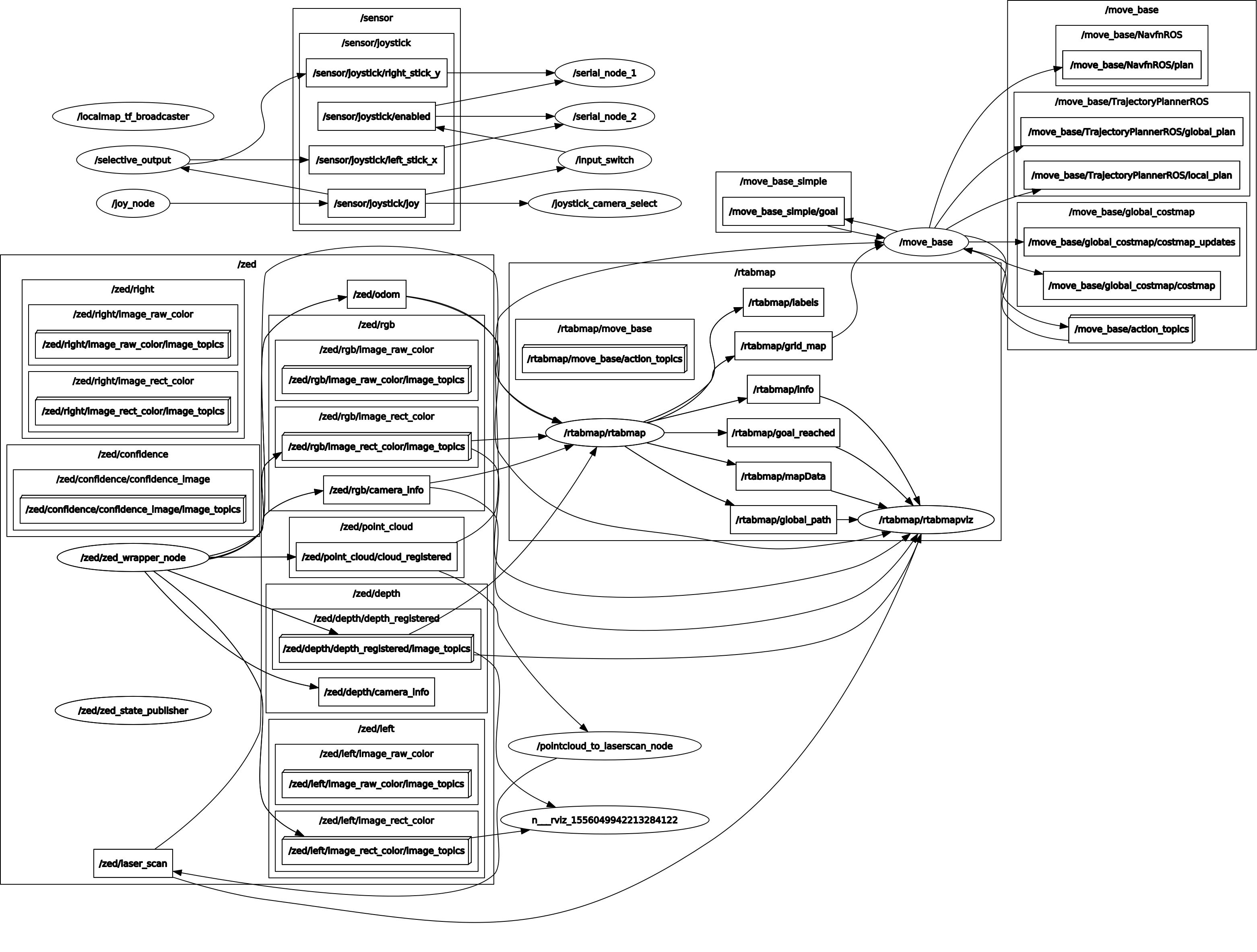

The self-driving vehicle uses a modified version of the ROS navigation stack. The flowchart above illustrate the mapping and path planning process. First, I create a detailed map of the environment with rtabmap_ros. With that global map, I use the localization feature of rtabmap_ros and the odom feature of the zed camera system to localize and plan paths.

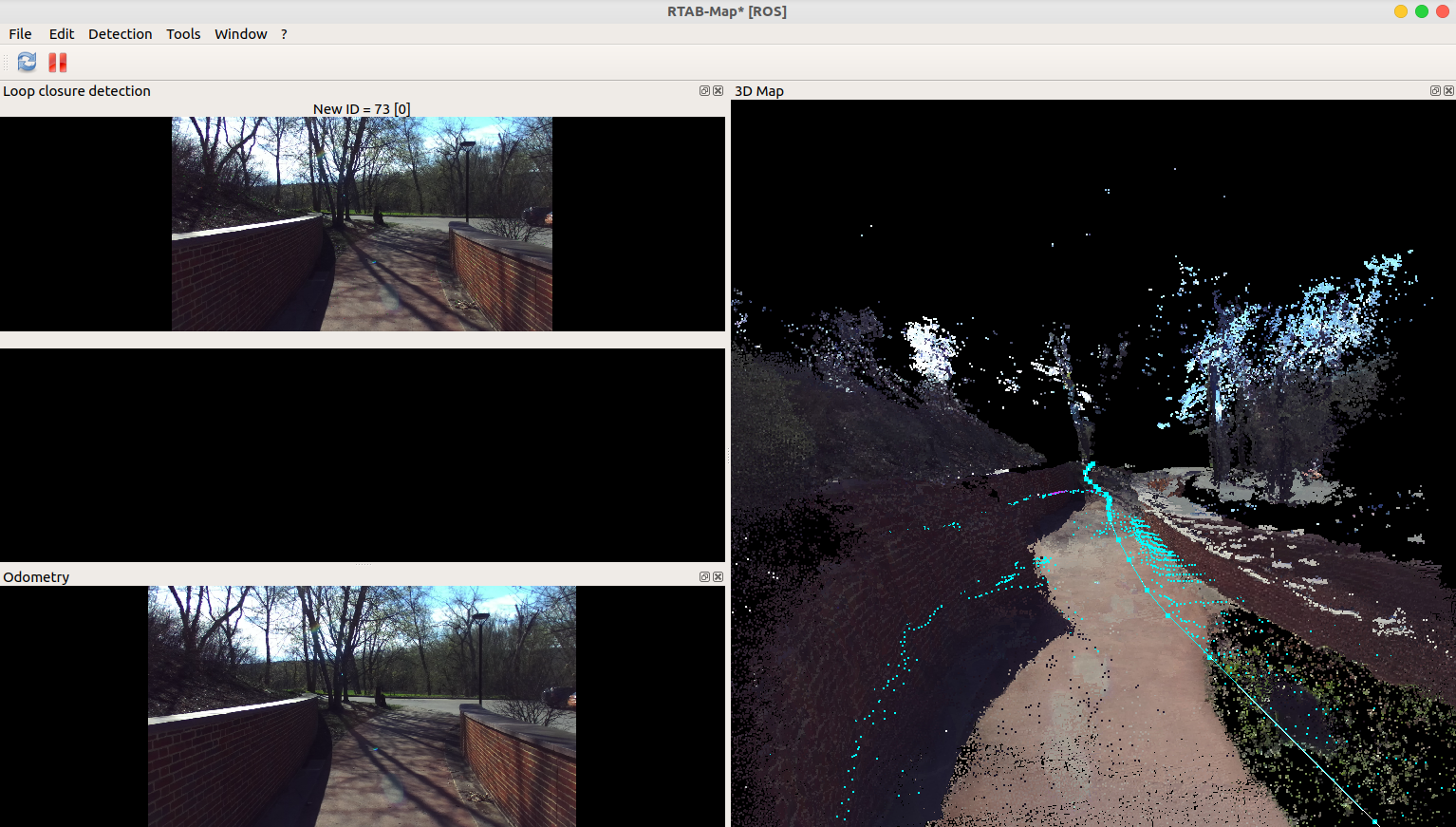

rtabmap (realtime appearance based mapping) allows me to construct a global map of the environment. RTABMap is also used to initialize the location of the vehicle. For more information on the mapping package, please check out this .launch file or their ROS wiki website.

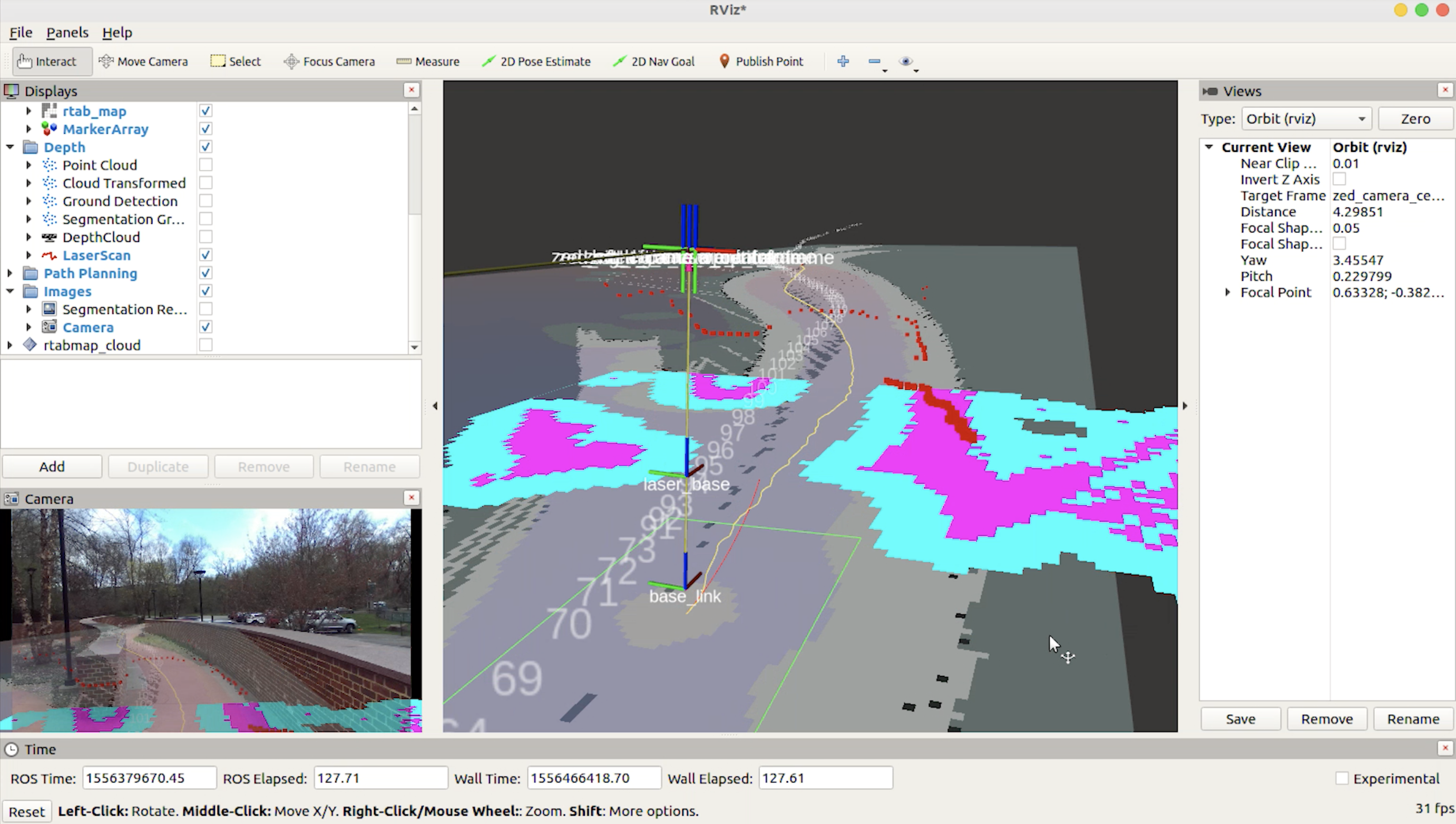

The project uses the move_base node from the navigation stack. The image below shows the costmap (in blue and purple), and the global occupancy grid (in black and gray). move_base also plans the local and global path. Global paths are shown in green and yellow below. You can find the yaml files here.

The move base node publishes /cmd_vel commands, which are processed and sent directly to the vehicle. There are two Arduinos on the golf cart that control steering, acceleration and braking.

If you are interested in the detailed development process of this project, you can visit Neil's blog at neilnie.com to find out more about it. Neil will make sure to keep you posted about all of the latest development on the club.