diff --git a/.github/workflows/cluster-it.yml b/.github/workflows/cluster-it.yml

new file mode 100644

index 000000000000..323bb3182936

--- /dev/null

+++ b/.github/workflows/cluster-it.yml

@@ -0,0 +1,79 @@

+name: New Cluster IT

+

+on:

+ push:

+ branches:

+ - master

+ paths-ignore:

+ - 'docs/**'

+ pull_request:

+ branches:

+ - master

+ paths-ignore:

+ - 'docs/**'

+ # allow manually run the action:

+ workflow_dispatch:

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.ref }}

+ cancel-in-progress: true

+

+env:

+ MAVEN_OPTS: -Dhttp.keepAlive=false -Dmaven.wagon.http.pool=false -Dmaven.wagon.http.retryHandler.class=standard -Dmaven.wagon.http.retryHandler.count=3

+

+jobs:

+ ClusterIT:

+ strategy:

+ fail-fast: false

+ max-parallel: 20

+ matrix:

+ java: [ 8, 11, 17 ]

+ os: [ ubuntu-latest, macos-latest, windows-latest ]

+ runs-on: ${{ matrix.os }}

+

+ steps:

+ - uses: actions/checkout@v2

+ - name: Set up JDK ${{ matrix.java }}

+ uses: actions/setup-java@v1

+ with:

+ java-version: ${{ matrix.java }}

+ - name: Cache Maven packages

+ uses: actions/cache@v2

+ with:

+ path: ~/.m2

+ key: ${{ runner.os }}-m2-${{ hashFiles('**/pom.xml') }}

+ restore-keys: ${{ runner.os }}-m2-

+ - name: Check Apache Rat

+ run: mvn -B apache-rat:check -P site -P code-coverage

+ - name: Adjust network dynamic TCP ports range

+ if: ${{ runner.os == 'Windows' }}

+ shell: pwsh

+ run: |

+ netsh int ipv4 set dynamicport tcp start=32768 num=32768

+ netsh int ipv4 set dynamicport udp start=32768 num=32768

+ netsh int ipv6 set dynamicport tcp start=32768 num=32768

+ netsh int ipv6 set dynamicport udp start=32768 num=32768

+ - name: Adjust Linux kernel somaxconn

+ if: ${{ runner.os == 'Linux' }}

+ shell: bash

+ run: sudo sysctl -w net.core.somaxconn=65535

+ - name: Adjust Mac kernel somaxconn

+ if: ${{ runner.os == 'macOS' }}

+ shell: bash

+ run: sudo sysctl -w kern.ipc.somaxconn=65535

+ - name: IT/UT Test

+ shell: bash

+ # we do not compile client-cpp for saving time, it is tested in client.yml

+ # we can skip influxdb-protocol because it has been tested separately in influxdb-protocol.yml

+ run: |

+ mvn clean verify \

+ -DskipUTs \

+ -pl integration-test \

+ -am -PClusterIT

+ - name: Upload Artifact

+ if: failure()

+ uses: actions/upload-artifact@v3

+ with:

+ name: cluster-log-java${{ matrix.java }}-${{ runner.os }}

+ path: integration-test/target/cluster-logs

+ retention-days: 1

diff --git a/.github/workflows/cluster.yml b/.github/workflows/cluster.yml

deleted file mode 100644

index e6c3f48ec386..000000000000

--- a/.github/workflows/cluster.yml

+++ /dev/null

@@ -1,52 +0,0 @@

-name: Cluster Test

-

-on:

- push:

- branches:

- - test_cluster

- paths-ignore:

- - 'docs/**'

- pull_request:

- branches:

- - test_cluster

- paths-ignore:

- - 'docs/**'

- # allow manually run the action:

- workflow_dispatch:

-

-concurrency:

- group: ${{ github.workflow }}-${{ github.ref }}

- cancel-in-progress: true

-

-env:

- MAVEN_OPTS: -Dhttp.keepAlive=false -Dmaven.wagon.http.pool=false -Dmaven.wagon.http.retryHandler.class=standard -Dmaven.wagon.http.retryHandler.count=3

-

-jobs:

- unix:

- strategy:

- fail-fast: false

- max-parallel: 20

- matrix:

- java: [ 8 ]

- os: [ ubuntu-latest ]

- runs-on: ${{ matrix.os}}

-

- steps:

- - uses: actions/checkout@v2

- - name: Set up JDK ${{ matrix.java }}

- uses: actions/setup-java@v1

- with:

- java-version: ${{ matrix.java }}

- - name: Cache Maven packages

- uses: actions/cache@v2

- with:

- path: ~/.m2

- key: ${{ runner.os }}-m2-${{ hashFiles('**/pom.xml') }}

- restore-keys: ${{ runner.os }}-m2-

- - name: Check Apache Rat

- run: mvn -B apache-rat:check -P site -P code-coverage

- - name: IT/UT Test

- shell: bash

- # we do not compile client-cpp for saving time, it is tested in client.yml

- # we can skip influxdb-protocol because it has been tested separately in influxdb-protocol.yml

- run: mvn -B clean verify -Dsession.test.skip=true -Diotdb.test.skip=true -Dcluster.test.skip=true -Dtsfile.test.skip=true -pl integration -am -PCluster

diff --git a/.github/workflows/sonar-coveralls.yml b/.github/workflows/sonar-coveralls.yml

index 84b30f0d0b97..8b8450789b47 100644

--- a/.github/workflows/sonar-coveralls.yml

+++ b/.github/workflows/sonar-coveralls.yml

@@ -70,4 +70,4 @@ jobs:

-Dsonar.projectKey=apache_incubator-iotdb \

-Dsonar.host.url=https://sonarcloud.io \

-Dsonar.login=${{ secrets.SONARCLOUD_TOKEN }} \

- -DskipTests -pl '!distribution' -P '!testcontainer' -am

+ -DskipTests -pl '!distribution,!integration-test' -P '!testcontainer' -am

diff --git a/.github/workflows/standalone-it-for-mpp.yml b/.github/workflows/standalone-it-for-mpp.yml

new file mode 100644

index 000000000000..6617292221ff

--- /dev/null

+++ b/.github/workflows/standalone-it-for-mpp.yml

@@ -0,0 +1,81 @@

+name: New Standalone IT

+

+on:

+ push:

+ branches:

+ - master

+ paths-ignore:

+ - 'docs/**'

+ pull_request:

+ branches:

+ - master

+ paths-ignore:

+ - 'docs/**'

+ # allow manually run the action:

+ workflow_dispatch:

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.ref }}

+ cancel-in-progress: true

+

+env:

+ MAVEN_OPTS: -Dhttp.keepAlive=false -Dmaven.wagon.http.pool=false -Dmaven.wagon.http.retryHandler.class=standard -Dmaven.wagon.http.retryHandler.count=3

+

+jobs:

+ StandaloneMppIT:

+ strategy:

+ fail-fast: false

+ max-parallel: 20

+ matrix:

+ java: [ 8, 11, 17 ]

+ os: [ ubuntu-latest, macos-latest, windows-latest ]

+ runs-on: ${{ matrix.os }}

+

+ steps:

+ - uses: actions/checkout@v2

+ - name: Set up JDK ${{ matrix.java }}

+ uses: actions/setup-java@v1

+ with:

+ java-version: ${{ matrix.java }}

+ - name: Cache Maven packages

+ uses: actions/cache@v2

+ with:

+ path: ~/.m2

+ key: ${{ runner.os }}-m2-${{ hashFiles('**/pom.xml') }}

+ restore-keys: ${{ runner.os }}-m2-

+ - name: Check Apache Rat

+ run: mvn -B apache-rat:check -P site -P code-coverage

+ - name: Adjust network dynamic TCP ports range

+ if: ${{ runner.os == 'Windows' }}

+ shell: pwsh

+ run: |

+ netsh int ipv4 set dynamicport tcp start=32768 num=32768

+ netsh int ipv4 set dynamicport udp start=32768 num=32768

+ netsh int ipv6 set dynamicport tcp start=32768 num=32768

+ netsh int ipv6 set dynamicport udp start=32768 num=32768

+ - name: Adjust Linux kernel somaxconn

+ if: ${{ runner.os == 'Linux' }}

+ shell: bash

+ run: sudo sysctl -w net.core.somaxconn=65535

+ - name: Adjust Mac kernel somaxconn

+ if: ${{ runner.os == 'macOS' }}

+ shell: bash

+ run: sudo sysctl -w kern.ipc.somaxconn=65535

+ - name: IT/UT Test

+ shell: bash

+ # we do not compile client-cpp for saving time, it is tested in client.yml

+ # we can skip influxdb-protocol because it has been tested separately in influxdb-protocol.yml

+ run: |

+ mvn clean verify \

+ -DskipUTs \

+ -DintegrationTest.forkCount=2 \

+ -pl integration-test \

+ -am -PLocalStandaloneOnMppIT

+ - name: Upload Artifact

+ if: failure()

+ uses: actions/upload-artifact@v3

+ with:

+ name: standalone-log-java${{ matrix.java }}-${{ runner.os }}

+ path: integration-test/target/cluster-logs

+ retention-days: 1

+

diff --git a/.gitignore b/.gitignore

index 68d7afef1865..6b90b2f1f5c6 100644

--- a/.gitignore

+++ b/.gitignore

@@ -40,6 +40,7 @@ tsfile-jdbc/src/main/resources/output/queryRes.csv

*.gz

*.tar.gz

*.tar

+*.tokens

#src/test/resources/logback.xml

### CSV ###

diff --git a/LICENSE b/LICENSE

index 2cabd6a47c68..6c45bf8f5d7c 100644

--- a/LICENSE

+++ b/LICENSE

@@ -237,10 +237,40 @@ License: http://www.apache.org/licenses/LICENSE-2.0

--------------------------------------------------------------------------------

+The following files include code modified from Apache HBase project.

+

+./confignode/src/main/java/org/apache/iotdb/procedure/Procedure.java

+./confignode/src/main/java/org/apache/iotdb/procedure/ProcedureExecutor.java

+./confignode/src/main/java/org/apache/iotdb/procedure/StateMachineProcedure.java

+./confignode/src/main/java/org/apache/iotdb/procedure/TimeoutExecutorThread.java

+./confignode/src/main/java/org/apache/iotdb/procedure/StoppableThread.java

+

+Copyright: 2016-2018 Michael Burman and/or other contributors

+Project page: https://github.com/burmanm/gorilla-tsc

+License: http://www.apache.org/licenses/LICENSE-2.0

+

+--------------------------------------------------------------------------------

+

The following files include code modified from Eclipse Collections project.

./tsfile/src/main/java/org/apache/iotdb/tsfile/utils/ByteArrayList.java

Copyright: 2021 Goldman Sachs

Project page: https://www.eclipse.org/collections

-License: https://github.com/eclipse/eclipse-collections/blob/master/LICENSE-EDL-1.0.txt

\ No newline at end of file

+License: https://github.com/eclipse/eclipse-collections/blob/master/LICENSE-EDL-1.0.txt

+

+--------------------------------------------------------------------------------

+

+The following files include code modified from Micrometer project.

+

+./metrics/interface/src/main/java/org/apache/iotdb/metrics/predefined/jvm/JvmClassLoaderMetrics

+./metrics/interface/src/main/java/org/apache/iotdb/metrics/predefined/jvm/JvmCompileMetrics

+./metrics/interface/src/main/java/org/apache/iotdb/metrics/predefined/jvm/JvmGcMetrics

+./metrics/interface/src/main/java/org/apache/iotdb/metrics/predefined/jvm/JvmMemoryMetrics

+./metrics/interface/src/main/java/org/apache/iotdb/metrics/predefined/jvm/JvmThreadMetrics

+./metrics/interface/src/main/java/org/apache/iotdb/metrics/predefined/logback/LogbackMetrics

+./metrics/interface/src/main/java/org/apache/iotdb/metrics/utils/JvmUtils

+

+Copyright: 2017 VMware

+Project page: https://github.com/micrometer-metrics/micrometer

+License: https://github.com/micrometer-metrics/micrometer/blob/main/LICENSE

\ No newline at end of file

diff --git a/README.md b/README.md

index 0ccc8d0dc834..3b05b9d6deef 100644

--- a/README.md

+++ b/README.md

@@ -175,26 +175,12 @@ and "`antlr/target/generated-sources/antlr4`" need to be added to sources roots

**In IDEA, you just need to right click on the root project name and choose "`Maven->Reload Project`" after

you run `mvn package` successfully.**

-#### Spotless problem

-**NOTE**: IF you are using JDK16+, you have to create a file called `jvm.config`,

-put it under `.mvn/`, before you use `spotless:apply`. The file contains the following content:

-```

---add-exports jdk.compiler/com.sun.tools.javac.api=ALL-UNNAMED

---add-exports jdk.compiler/com.sun.tools.javac.file=ALL-UNNAMED

---add-exports jdk.compiler/com.sun.tools.javac.parser=ALL-UNNAMED

---add-exports jdk.compiler/com.sun.tools.javac.tree=ALL-UNNAMED

---add-exports jdk.compiler/com.sun.tools.javac.util=ALL-UNNAMED

-```

-

-This is [an issue of Spotless](https://github.com/diffplug/spotless/issues/834),

-Once the issue is fixed, we can remove this file.

-

### Configurations

configuration files are under "conf" folder

- * environment config module (`iotdb-env.bat`, `iotdb-env.sh`),

- * system config module (`iotdb-engine.properties`)

+ * environment config module (`datanode-env.bat`, `datanode-env.sh`),

+ * system config module (`iotdb-datanode.properties`)

* log config module (`logback.xml`).

For more information, please see [Config Manual](https://iotdb.apache.org/UserGuide/Master/Reference/Config-Manual.html).

diff --git a/README_ZH.md b/README_ZH.md

index 9b50c1d13787..de98411dd7e7 100644

--- a/README_ZH.md

+++ b/README_ZH.md

@@ -161,24 +161,11 @@ git checkout vx.x.x

**IDEA的操作方法:在上述maven命令编译好后,右键项目名称,选择"`Maven->Reload project`",即可。**

-#### Spotless问题(JDK16+)

-**NOTE**: 如果你在使用 JDK16+, 并且要做`spotless:apply`或者`spotless:check`,

-那么需要在`.mvn/`文件夹下创建一个文件 `jvm.config`, 内容如下:

-```

---add-exports jdk.compiler/com.sun.tools.javac.api=ALL-UNNAMED

---add-exports jdk.compiler/com.sun.tools.javac.file=ALL-UNNAMED

---add-exports jdk.compiler/com.sun.tools.javac.parser=ALL-UNNAMED

---add-exports jdk.compiler/com.sun.tools.javac.tree=ALL-UNNAMED

---add-exports jdk.compiler/com.sun.tools.javac.util=ALL-UNNAMED

-```

-这是spotless依赖的googlecodeformat的 [问题](https://github.com/diffplug/spotless/issues/834),

-近期可能会被官方解决。

-

### 配置

配置文件在"conf"文件夹下

-* 环境配置模块(`iotdb-env.bat`, `iotdb-env.sh`),

-* 系统配置模块(`iotdb-engine.properties`)

+* 环境配置模块(`datanode-env.bat`, `datanode-env.sh`),

+* 系统配置模块(`iotdb-datanode.properties`)

* 日志配置模块(`logback.xml`)。

有关详细信息,请参见[配置参数](https://iotdb.apache.org/zh/UserGuide/Master/Reference/Config-Manual.html)。

diff --git a/antlr/pom.xml b/antlr/pom.xml

index 64015796385e..a66513db4c64 100644

--- a/antlr/pom.xml

+++ b/antlr/pom.xml

@@ -47,6 +47,7 @@

falsetrue

+ src/main/antlr4/org/apache/iotdb/db/qp/sqlantlr4

diff --git a/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/IdentifierParser.g4 b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/IdentifierParser.g4

new file mode 100644

index 000000000000..4387f7c22565

--- /dev/null

+++ b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/IdentifierParser.g4

@@ -0,0 +1,176 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+parser grammar IdentifierParser;

+

+options { tokenVocab=SqlLexer; }

+

+identifier

+ : keyWords

+ | ID

+ | QUOTED_ID

+ ;

+

+

+// List of keywords, new keywords that can be used as identifiers should be added into this list. For example, 'not' is an identifier but can not be used as an identifier in node name.

+

+keyWords

+ : ADD

+ | AFTER

+ | ALIAS

+ | ALIGN

+ | ALIGNED

+ | ALL

+ | ALTER

+ | ANY

+ | APPEND

+ | AS

+ | ASC

+ | ATTRIBUTES

+ | AUTOREGISTER

+ | BEFORE

+ | BEGIN

+ | BOUNDARY

+ | BY

+ | CACHE

+ | CHILD

+ | CLEAR

+ | CLUSTER

+ | CONCAT

+ | CONFIGURATION

+ | CONTINUOUS

+ | COUNT

+ | CONTAIN

+ | CQ

+ | CQS

+ | CREATE

+ | DATA

+ | DEBUG

+ | DELETE

+ | DESC

+ | DESCRIBE

+ | DEVICE

+ | DEVICES

+ | DISABLE

+ | DROP

+ | END

+ | EVERY

+ | EXPLAIN

+ | FILL

+ | FLUSH

+ | FOR

+ | FROM

+ | FULL

+ | FUNCTION

+ | FUNCTIONS

+ | GLOBAL

+ | GRANT

+ | GROUP

+ | INDEX

+ | INFO

+ | INSERT

+ | INTO

+ | KILL

+ | LABEL

+ | LAST

+ | LATEST

+ | LEVEL

+ | LIKE

+ | LIMIT

+ | LINEAR

+ | LINK

+ | LIST

+ | LOAD

+ | LOCAL

+ | LOCK

+ | MERGE

+ | METADATA

+ | NODES

+ | NOW

+ | OF

+ | OFF

+ | OFFSET

+ | ON

+ | ORDER

+ | PARTITION

+ | PASSWORD

+ | PATHS

+ | PIPE

+ | PIPES

+ | PIPESERVER

+ | PIPESINK

+ | PIPESINKS

+ | PIPESINKTYPE

+ | PREVIOUS

+ | PREVIOUSUNTILLAST

+ | PRIVILEGES

+ | PROCESSLIST

+ | PROPERTY

+ | PRUNE

+ | QUERIES

+ | QUERY

+ | READONLY

+ | REGEXP

+ | REGIONS

+ | REMOVE

+ | RENAME

+ | RESAMPLE

+ | RESOURCE

+ | REVOKE

+ | ROLE

+ | SCHEMA

+ | SELECT

+ | SET

+ | SETTLE

+ | SGLEVEL

+ | SHOW

+ | SLIMIT

+ | SOFFSET

+ | STORAGE

+ | START

+ | STOP

+ | SYSTEM

+ | TAGS

+ | TASK

+ | TEMPLATE

+ | TEMPLATES

+ | TIMESERIES

+ | TO

+ | TOLERANCE

+ | TOP

+ | TRACING

+ | TRIGGER

+ | TRIGGERS

+ | TTL

+ | UNLINK

+ | UNLOAD

+ | UNSET

+ | UPDATE

+ | UPSERT

+ | USER

+ | USING

+ | VALUES

+ | VERIFY

+ | VERSION

+ | WHERE

+ | WITH

+ | WITHOUT

+ | WRITABLE

+ | PRIVILEGE_VALUE

+ ;

\ No newline at end of file

diff --git a/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/InfluxDBSqlParser.g4 b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/InfluxDBSqlParser.g4

index 0ffdcb9f2288..381f3e297977 100644

--- a/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/InfluxDBSqlParser.g4

+++ b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/InfluxDBSqlParser.g4

@@ -21,6 +21,8 @@ parser grammar InfluxDBSqlParser;

options { tokenVocab=SqlLexer; }

+import IdentifierParser;

+

singleStatement

: statement SEMI? EOF

;

@@ -71,20 +73,12 @@ fromClause

nodeName

: STAR

- | ID

- | QUOTED_ID

+ | identifier

| LAST

| COUNT

| DEVICE

;

-// Identifier

-

-identifier

- : ID

- | QUOTED_ID

- ;

-

// Constant & Literal

@@ -126,4 +120,4 @@ realLiteral

datetimeLiteral

: DATETIME_LITERAL

| NOW LR_BRACKET RR_BRACKET

- ;

+ ;

\ No newline at end of file

diff --git a/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/IoTDBSqlParser.g4 b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/IoTDBSqlParser.g4

index 56ca7bd9b576..1dbb03f7cecf 100644

--- a/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/IoTDBSqlParser.g4

+++ b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/IoTDBSqlParser.g4

@@ -21,6 +21,7 @@ parser grammar IoTDBSqlParser;

options { tokenVocab=SqlLexer; }

+import IdentifierParser;

/**

* 1. Top Level Description

@@ -42,7 +43,7 @@ ddlStatement

| dropFunction | dropTrigger | dropContinuousQuery | dropSchemaTemplate

| setTTL | unsetTTL | startTrigger | stopTrigger | setSchemaTemplate | unsetSchemaTemplate

| showStorageGroup | showDevices | showTimeseries | showChildPaths | showChildNodes

- | showFunctions | showTriggers | showContinuousQueries | showTTL | showAllTTL

+ | showFunctions | showTriggers | showContinuousQueries | showTTL | showAllTTL | showCluster | showRegion

| showSchemaTemplates | showNodesInSchemaTemplate

| showPathsUsingSchemaTemplate | showPathsSetSchemaTemplate

| countStorageGroup | countDevices | countTimeseries | countNodes

@@ -59,7 +60,7 @@ dclStatement

;

utilityStatement

- : merge | fullMerge | flush | clearCache | settle

+ : merge | fullMerge | flush | clearCache | settle | explain

| setSystemStatus | showVersion | showFlushInfo | showLockInfo | showQueryResource

| showQueryProcesslist | killQuery | grantWatermarkEmbedding | revokeWatermarkEmbedding

| loadConfiguration | loadTimeseries | loadFile | removeFile | unloadFile;

@@ -75,15 +76,19 @@ syncStatement

// Create Storage Group

setStorageGroup

- : SET STORAGE GROUP TO prefixPath (WITH storageGroupAttributeClause (COMMA storageGroupAttributeClause)*)?

+ : SET STORAGE GROUP TO prefixPath storageGroupAttributesClause?

;

-storageGroupAttributeClause

- : (TTL | SCHEMA_REPLICATION_FACTOR | DATA_REPLICATION_FACTOR | TIME_PARTITION_INTERVAL) '=' INTEGER_LITERAL

+createStorageGroup

+ : CREATE STORAGE GROUP prefixPath storageGroupAttributesClause?

;

-createStorageGroup

- : CREATE STORAGE GROUP prefixPath

+storageGroupAttributesClause

+ : WITH storageGroupAttributeClause (COMMA storageGroupAttributeClause)*

+ ;

+

+storageGroupAttributeClause

+ : (TTL | SCHEMA_REPLICATION_FACTOR | DATA_REPLICATION_FACTOR | TIME_PARTITION_INTERVAL) '=' INTEGER_LITERAL

;

// Create Timeseries

@@ -114,7 +119,11 @@ createTimeseriesOfSchemaTemplate

// Create Function

createFunction

- : CREATE FUNCTION udfName=identifier AS className=STRING_LITERAL

+ : CREATE FUNCTION udfName=identifier AS className=STRING_LITERAL (USING uri (COMMA uri)*)?

+ ;

+

+uri

+ : STRING_LITERAL

;

// Create Trigger

@@ -160,7 +169,7 @@ alterTimeseries

alterClause

: RENAME beforeName=attributeKey TO currentName=attributeKey

| SET attributePair (COMMA attributePair)*

- | DROP STRING_LITERAL (COMMA STRING_LITERAL)*

+ | DROP attributeKey (COMMA attributeKey)*

| ADD TAGS attributePair (COMMA attributePair)*

| ADD ATTRIBUTES attributePair (COMMA attributePair)*

| UPSERT aliasClause? tagClause? attributeClause?

@@ -294,6 +303,16 @@ showAllTTL

: SHOW ALL TTL

;

+// Show Cluster

+showCluster

+ : SHOW CLUSTER

+ ;

+

+// Show Region

+showRegion

+ : SHOW (SCHEMA | DATA)? REGIONS

+ ;

+

// Show Schema Template

showSchemaTemplates

: SHOW SCHEMA? TEMPLATES

@@ -411,10 +430,10 @@ withoutNullClause

;

oldTypeClause

- : (dataType=DATATYPE_VALUE | ALL) LS_BRACKET linearClause RS_BRACKET

- | (dataType=DATATYPE_VALUE | ALL) LS_BRACKET previousClause RS_BRACKET

- | (dataType=DATATYPE_VALUE | ALL) LS_BRACKET specificValueClause RS_BRACKET

- | (dataType=DATATYPE_VALUE | ALL) LS_BRACKET previousUntilLastClause RS_BRACKET

+ : (ALL | dataType=attributeValue) LS_BRACKET linearClause RS_BRACKET

+ | (ALL | dataType=attributeValue) LS_BRACKET previousClause RS_BRACKET

+ | (ALL | dataType=attributeValue) LS_BRACKET specificValueClause RS_BRACKET

+ | (ALL | dataType=attributeValue) LS_BRACKET previousUntilLastClause RS_BRACKET

;

linearClause

@@ -491,7 +510,7 @@ alterUser

// Grant User Privileges

grantUser

- : GRANT USER userName=identifier PRIVILEGES privileges ON prefixPath

+ : GRANT USER userName=identifier PRIVILEGES privileges (ON prefixPath)?

;

// Grant Role Privileges

@@ -506,7 +525,7 @@ grantRoleToUser

// Revoke User Privileges

revokeUser

- : REVOKE USER userName=identifier PRIVILEGES privileges ON prefixPath

+ : REVOKE USER userName=identifier PRIVILEGES privileges (ON prefixPath)?

;

// Revoke Role Privileges

@@ -600,7 +619,7 @@ fullMerge

// Flush

flush

- : FLUSH prefixPath? (COMMA prefixPath)* BOOLEAN_LITERAL?

+ : FLUSH prefixPath? (COMMA prefixPath)* BOOLEAN_LITERAL? (ON (LOCAL | CLUSTER))?

;

// Clear Cache

@@ -613,6 +632,11 @@ settle

: SETTLE (prefixPath|tsFilePath=STRING_LITERAL)

;

+// Explain

+explain

+ : EXPLAIN selectStatement

+ ;

+

// Set System To ReadOnly/Writable

setSystemStatus

: SET SYSTEM TO (READONLY|WRITABLE)

@@ -789,14 +813,6 @@ wildcard

;

-// Identifier

-

-identifier

- : ID

- | QUOTED_ID

- ;

-

-

// Constant & Literal

constant

@@ -845,6 +861,7 @@ expression

| leftExpression=expression (PLUS | MINUS) rightExpression=expression

| leftExpression=expression (OPERATOR_GT | OPERATOR_GTE | OPERATOR_LT | OPERATOR_LTE | OPERATOR_SEQ | OPERATOR_DEQ | OPERATOR_NEQ) rightExpression=expression

| unaryBeforeRegularOrLikeExpression=expression (REGEXP | LIKE) STRING_LITERAL

+ | unaryBeforeIsNullExpression=expression OPERATOR_IS OPERATOR_NOT? NULL_LITERAL

| unaryBeforeInExpression=expression OPERATOR_NOT? (OPERATOR_IN | OPERATOR_CONTAINS) LR_BRACKET constant (COMMA constant)* RR_BRACKET

| leftExpression=expression OPERATOR_AND rightExpression=expression

| leftExpression=expression OPERATOR_OR rightExpression=expression

@@ -886,16 +903,12 @@ fromClause

// Attribute Clause

attributeClauses

- : aliasNodeName? WITH DATATYPE operator_eq dataType=DATATYPE_VALUE

- (COMMA ENCODING operator_eq encoding=ENCODING_VALUE)?

- (COMMA (COMPRESSOR | COMPRESSION) operator_eq compressor=COMPRESSOR_VALUE)?

+ : aliasNodeName? WITH attributeKey operator_eq dataType=attributeValue

(COMMA attributePair)*

tagClause?

attributeClause?

// Simplified version (supported since v0.13)

- | aliasNodeName? WITH? (DATATYPE operator_eq)? dataType=DATATYPE_VALUE

- (ENCODING operator_eq encoding=ENCODING_VALUE)?

- ((COMPRESSOR | COMPRESSION) operator_eq compressor=COMPRESSOR_VALUE)?

+ | aliasNodeName? WITH? (attributeKey operator_eq)? dataType=attributeValue

attributePair*

tagClause?

attributeClause?

@@ -914,7 +927,7 @@ attributeClause

;

attributePair

- : key=attributeKey (OPERATOR_SEQ | OPERATOR_DEQ) value=attributeValue

+ : key=attributeKey operator_eq value=attributeValue

;

attributeKey

diff --git a/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/PathParser.g4 b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/PathParser.g4

new file mode 100644

index 000000000000..546be58b6eee

--- /dev/null

+++ b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/PathParser.g4

@@ -0,0 +1,52 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+parser grammar PathParser;

+

+options { tokenVocab=SqlLexer; }

+

+import IdentifierParser;

+

+/**

+ * PartialPath and Path used by Session API and TsFile API should be parsed by Antlr4.

+ */

+

+path

+ : prefixPath EOF

+ | suffixPath EOF

+ ;

+

+prefixPath

+ : ROOT (DOT nodeName)*

+ ;

+

+suffixPath

+ : nodeName (DOT nodeName)*

+ ;

+

+nodeName

+ : wildcard

+ | wildcard? identifier wildcard?

+ | identifier

+ ;

+

+wildcard

+ : STAR

+ | DOUBLE_STAR

+ ;

diff --git a/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/SqlLexer.g4 b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/SqlLexer.g4

index f92fcbba5b4f..7e9b769e880e 100644

--- a/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/SqlLexer.g4

+++ b/antlr/src/main/antlr4/org/apache/iotdb/db/qp/sql/SqlLexer.g4

@@ -32,7 +32,7 @@ WS

/**

- * 2. Keywords

+ * 2. Keywords, new keywords should be added into IdentifierParser.g4

*/

// Common Keywords

@@ -117,12 +117,8 @@ CLEAR

: C L E A R

;

-COMPRESSION

- : C O M P R E S S I O N

- ;

-

-COMPRESSOR

- : C O M P R E S S O R

+CLUSTER

+ : C L U S T E R

;

CONCAT

@@ -157,8 +153,8 @@ CREATE

: C R E A T E

;

-DATATYPE

- : D A T A T Y P E

+DATA

+ : D A T A

;

DEBUG

@@ -193,10 +189,6 @@ DROP

: D R O P

;

-ENCODING

- : E N C O D I N G

- ;

-

END

: E N D

;

@@ -310,6 +302,10 @@ LOAD

: L O A D

;

+LOCAL

+ : L O C A L

+ ;

+

LOCK

: L O C K

;

@@ -426,6 +422,10 @@ REGEXP

: R E G E X P

;

+REGIONS

+ : R E G I O N S

+ ;

+

REMOVE

: R E M O V E

;

@@ -619,103 +619,6 @@ WRITABLE

;

-// Data Type Keywords

-

-DATATYPE_VALUE

- : BOOLEAN | DOUBLE | FLOAT | INT32 | INT64 | TEXT

- ;

-

-BOOLEAN

- : B O O L E A N

- ;

-

-DOUBLE

- : D O U B L E

- ;

-

-FLOAT

- : F L O A T

- ;

-

-INT32

- : I N T '3' '2'

- ;

-

-INT64

- : I N T '6' '4'

- ;

-

-TEXT

- : T E X T

- ;

-

-

-// Encoding Type Keywords

-

-ENCODING_VALUE

- : DICTIONARY | DIFF | GORILLA | PLAIN | REGULAR | RLE | TS_2DIFF | ZIGZAG | FREQ

- ;

-

-DICTIONARY

- : D I C T I O N A R Y

- ;

-

-DIFF

- : D I F F

- ;

-

-GORILLA

- : G O R I L L A

- ;

-

-PLAIN

- : P L A I N

- ;

-

-REGULAR

- : R E G U L A R

- ;

-

-RLE

- : R L E

- ;

-

-TS_2DIFF

- : T S '_' '2' D I F F

- ;

-

-ZIGZAG

- : Z I G Z A G

- ;

-

-FREQ

- : F R E Q

- ;

-

-

-// Compressor Type Keywords

-

-COMPRESSOR_VALUE

- : GZIP | LZ4 | SNAPPY | UNCOMPRESSED

- ;

-

-GZIP

- : G Z I P

- ;

-

-LZ4

- : L Z '4'

- ;

-

-SNAPPY

- : S N A P P Y

- ;

-

-UNCOMPRESSED

- : U N C O M P R E S S E D

- ;

-

-

// Privileges Keywords

PRIVILEGE_VALUE

@@ -870,6 +773,8 @@ OPERATOR_LT : '<';

OPERATOR_LTE : '<=';

OPERATOR_NEQ : '!=' | '<>';

+OPERATOR_IS : I S;

+

OPERATOR_IN : I N;

OPERATOR_AND

@@ -973,9 +878,8 @@ NAN_LITERAL

: N A N

;

-

/**

- * 6. Identifier

+ * 6. ID

*/

ID

@@ -1005,15 +909,15 @@ fragment CN_CHAR

;

fragment DQUOTA_STRING

- : '"' ( '\\'. | '""' | ~('"'| '\\') )* '"'

+ : '"' ( '\\'. | '""' | ~('"') )* '"'

;

fragment SQUOTA_STRING

- : '\'' ( '\\'. | '\'\'' |~('\''| '\\') )* '\''

+ : '\'' ( '\\'. | '\'\'' |~('\'') )* '\''

;

fragment BQUOTA_STRING

- : '`' ( '\\' ~('`') | '``' | ~('`'| '\\') )* '`'

+ : '`' ( '\\' ~('`') | '``' | ~('`') )* '`'

;

diff --git a/checkstyle.xml b/checkstyle.xml

index 4d4eb175a92d..f74e443f9e7c 100644

--- a/checkstyle.xml

+++ b/checkstyle.xml

@@ -40,7 +40,25 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

@@ -52,13 +70,6 @@

-

-

-

-

-

-

-

@@ -212,13 +223,10 @@

-

-

+

-

-

diff --git a/cli/src/assembly/resources/sbin/start-cli.bat b/cli/src/assembly/resources/sbin/start-cli.bat

index 21bb4000c522..cbd375e6f2b7 100644

--- a/cli/src/assembly/resources/sbin/start-cli.bat

+++ b/cli/src/assembly/resources/sbin/start-cli.bat

@@ -37,7 +37,7 @@ set JAVA_OPTS=-ea^

-DIOTDB_HOME="%IOTDB_HOME%"

REM For each jar in the IOTDB_HOME lib directory call append to build the CLASSPATH variable.

-set CLASSPATH="%IOTDB_HOME%\lib\*"

+if EXIST %IOTDB_HOME%\lib (set CLASSPATH="%IOTDB_HOME%\lib\*") else set CLASSPATH="%IOTDB_HOME%\..\lib\*"

REM -----------------------------------------------------------------------------

set PARAMETERS=%*

diff --git a/cli/src/assembly/resources/sbin/start-cli.sh b/cli/src/assembly/resources/sbin/start-cli.sh

index 20fb4506a027..dbeedc725059 100644

--- a/cli/src/assembly/resources/sbin/start-cli.sh

+++ b/cli/src/assembly/resources/sbin/start-cli.sh

@@ -30,8 +30,14 @@ IOTDB_CLI_CONF=${IOTDB_HOME}/conf

MAIN_CLASS=org.apache.iotdb.cli.Cli

+if [ -d ${IOTDB_HOME}/lib ]; then

+LIB_PATH=${IOTDB_HOME}/lib

+else

+LIB_PATH=${IOTDB_HOME}/../lib

+fi

+

CLASSPATH=""

-for f in ${IOTDB_HOME}/lib/*.jar; do

+for f in ${LIB_PATH}/*.jar; do

CLASSPATH=${CLASSPATH}":"$f

done

diff --git a/cli/src/main/java/org/apache/iotdb/tool/ImportCsv.java b/cli/src/main/java/org/apache/iotdb/tool/ImportCsv.java

index 14374603173a..ae0b97f31890 100644

--- a/cli/src/main/java/org/apache/iotdb/tool/ImportCsv.java

+++ b/cli/src/main/java/org/apache/iotdb/tool/ImportCsv.java

@@ -714,8 +714,7 @@ private static boolean queryType(

try {

sessionDataSet = session.executeQueryStatement(sql);

} catch (StatementExecutionException e) {

- System.out.println(

- "Meet error when query the type of timeseries because the IoTDB v0.13 don't support that the path contains any purely digital path.");

+ System.out.println("Meet error when query the type of timeseries because " + e.getMessage());

return false;

}

List columnNames = sessionDataSet.getColumnNames();

diff --git a/client-py/README.md b/client-py/README.md

index 41c0a113b8f1..beef55e3513b 100644

--- a/client-py/README.md

+++ b/client-py/README.md

@@ -273,6 +273,98 @@ session.execute_query_statement(sql)

session.execute_non_query_statement(sql)

```

+* Execute statement

+

+```python

+session.execute_statement(sql)

+```

+

+### Schema Template

+#### Create Schema Template

+The step for creating a metadata template is as follows

+1. Create the template class

+2. Adding child Node,InternalNode and MeasurementNode can be chose

+3. Execute create schema template function

+

+```python

+template = Template(name=template_name, share_time=True)

+

+i_node_gps = InternalNode(name="GPS", share_time=False)

+i_node_v = InternalNode(name="vehicle", share_time=True)

+m_node_x = MeasurementNode("x", TSDataType.FLOAT, TSEncoding.RLE, Compressor.SNAPPY)

+

+i_node_gps.add_child(m_node_x)

+i_node_v.add_child(m_node_x)

+

+template.add_template(i_node_gps)

+template.add_template(i_node_v)

+template.add_template(m_node_x)

+

+session.create_schema_template(template)

+```

+#### Modify Schema Template nodes

+Modify nodes in a template, the template must be already created. These are functions that add or delete some measurement nodes.

+* add node in template

+```python

+session.add_measurements_in_template(template_name, measurements_path, data_types, encodings, compressors, is_aligned)

+```

+

+* delete node in template

+```python

+session.delete_node_in_template(template_name, path)

+```

+

+#### Set Schema Template

+```python

+session.set_schema_template(template_name, prefix_path)

+```

+

+#### Uset Schema Template

+```python

+session.unset_schema_template(template_name, prefix_path)

+```

+

+#### Show Schema Template

+* Show all schema templates

+```python

+session.show_all_templates()

+```

+* Count all nodes in templates

+```python

+session.count_measurements_in_template(template_name)

+```

+

+* Judge whether the path is measurement or not in templates, This measurement must be in the template

+```python

+session.count_measurements_in_template(template_name, path)

+```

+

+* Judge whether the path is exist or not in templates, This path may not belong to the template

+```python

+session.is_path_exist_in_template(template_name, path)

+```

+

+* Show nodes under in schema template

+```python

+session.show_measurements_in_template(template_name)

+```

+

+* Show the path prefix where a schema template is set

+```python

+session.show_paths_template_set_on(template_name)

+```

+

+* Show the path prefix where a schema template is used (i.e. the time series has been created)

+```python

+session.show_paths_template_using_on(template_name)

+```

+

+#### Drop Schema Template

+Delete an existing metadata template,dropping an already set template is not supported

+```python

+session.drop_schema_template("template_python")

+```

+

### Pandas Support

@@ -322,6 +414,150 @@ class MyTestCase(unittest.TestCase):

by default it will load the image `apache/iotdb:latest`, if you want a specific version just pass it like e.g. `IoTDBContainer("apache/iotdb:0.12.0")` to get version `0.12.0` running.

+### IoTDB DBAPI

+

+IoTDB DBAPI implements the Python DB API 2.0 specification (https://peps.python.org/pep-0249/), which defines a common

+interface for accessing databases in Python.

+

+#### Examples

++ Initialization

+

+The initialized parameters are consistent with the session part (except for the sqlalchemy_mode).

+```python

+from iotdb.dbapi import connect

+

+ip = "127.0.0.1"

+port_ = "6667"

+username_ = "root"

+password_ = "root"

+conn = connect(ip, port_, username_, password_,fetch_size=1024,zone_id="UTC+8",sqlalchemy_mode=False)

+cursor = conn.cursor()

+```

++ simple SQL statement execution

+```python

+cursor.execute("SELECT * FROM root.*")

+for row in cursor.fetchall():

+ print(row)

+```

+

++ execute SQL with parameter

+

+IoTDB DBAPI supports pyformat style parameters

+```python

+cursor.execute("SELECT * FROM root.* WHERE time < %(time)s",{"time":"2017-11-01T00:08:00.000"})

+for row in cursor.fetchall():

+ print(row)

+```

+

++ execute SQL with parameter sequences

+```python

+seq_of_parameters = [

+ {"timestamp": 1, "temperature": 1},

+ {"timestamp": 2, "temperature": 2},

+ {"timestamp": 3, "temperature": 3},

+ {"timestamp": 4, "temperature": 4},

+ {"timestamp": 5, "temperature": 5},

+]

+sql = "insert into root.cursor(timestamp,temperature) values(%(timestamp)s,%(temperature)s)"

+cursor.executemany(sql,seq_of_parameters)

+```

+

++ close the connection and cursor

+```python

+cursor.close()

+conn.close()

+```

+

+### IoTDB SQLAlchemy Dialect (Experimental)

+The SQLAlchemy dialect of IoTDB is written to adapt to Apache Superset.

+This part is still being improved.

+Please do not use it in the production environment!

+#### Mapping of the metadata

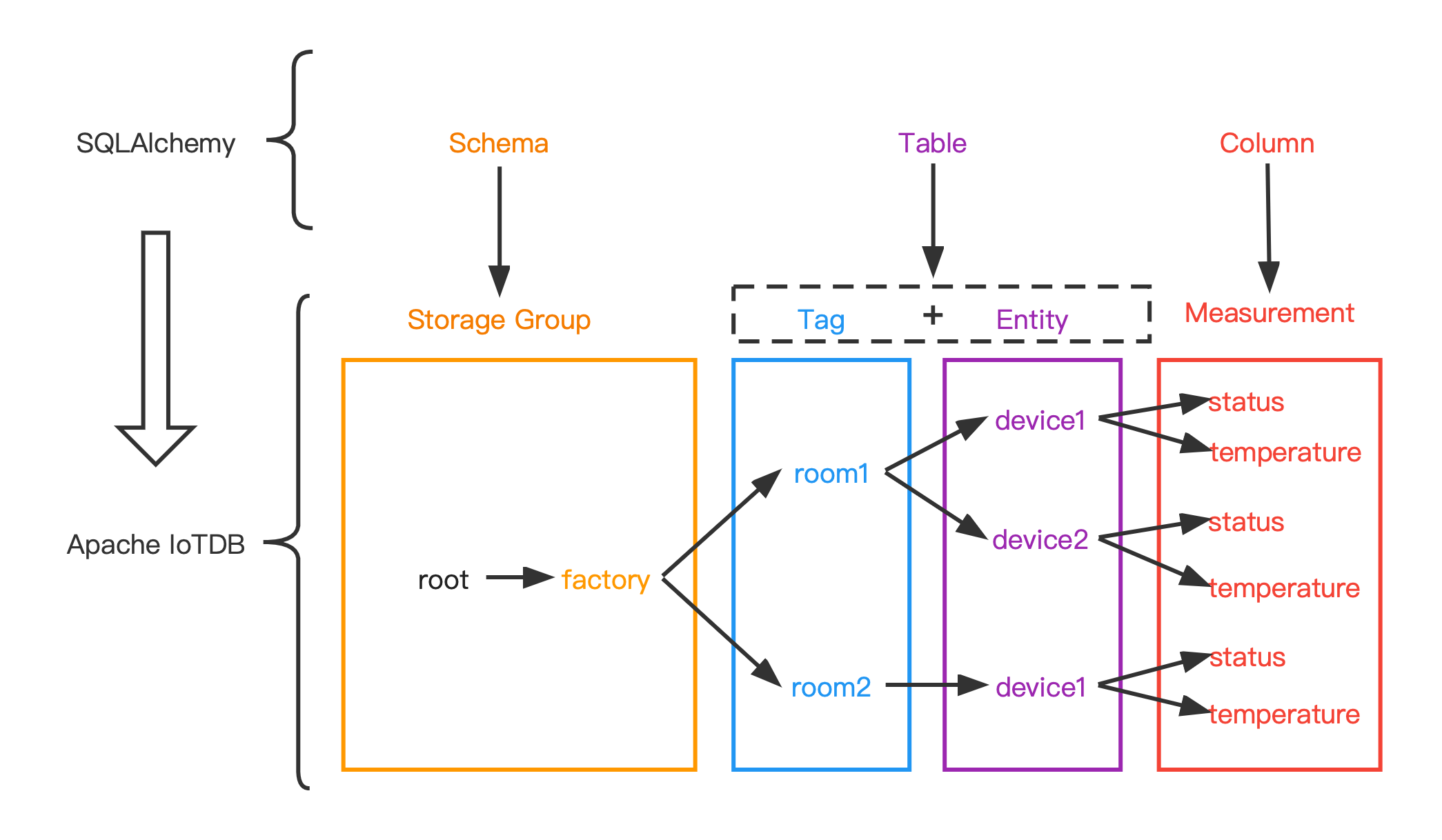

+The data model used by SQLAlchemy is a relational data model, which describes the relationships between different entities through tables.

+While the data model of IoTDB is a hierarchical data model, which organizes the data through a tree structure.

+In order to adapt IoTDB to the dialect of SQLAlchemy, the original data model in IoTDB needs to be reorganized.

+Converting the data model of IoTDB into the data model of SQLAlchemy.

+

+The metadata in the IoTDB are:

+

+1. Storage Group

+2. Path

+3. Entity

+4. Measurement

+

+The metadata in the SQLAlchemy are:

+1. Schema

+2. Table

+3. Column

+

+The mapping relationship between them is:

+

+| The metadata in the SQLAlchemy | The metadata in the IoTDB |

+| -------------------- | ---------------------------------------------- |

+| Schema | Storage Group |

+| Table | Path ( from storage group to entity ) + Entity |

+| Column | Measurement |

+

+The following figure shows the relationship between the two more intuitively:

+

+

+

+#### Data type mapping

+| data type in IoTDB | data type in SQLAlchemy |

+|--------------------|-------------------------|

+| BOOLEAN | Boolean |

+| INT32 | Integer |

+| INT64 | BigInteger |

+| FLOAT | Float |

+| DOUBLE | Float |

+| TEXT | Text |

+| LONG | BigInteger |

+#### Example

+

++ execute statement

+

+```python

+from sqlalchemy import create_engine

+

+engine = create_engine("iotdb://root:root@127.0.0.1:6667")

+connect = engine.connect()

+result = connect.execute("SELECT ** FROM root")

+for row in result.fetchall():

+ print(row)

+```

+

++ ORM (now only simple queries are supported)

+

+```python

+from sqlalchemy import create_engine, Column, Float, BigInteger, MetaData

+from sqlalchemy.ext.declarative import declarative_base

+from sqlalchemy.orm import sessionmaker

+

+metadata = MetaData(

+ schema='root.factory'

+)

+Base = declarative_base(metadata=metadata)

+

+

+class Device(Base):

+ __tablename__ = "room2.device1"

+ Time = Column(BigInteger, primary_key=True)

+ temperature = Column(Float)

+ status = Column(Float)

+

+

+engine = create_engine("iotdb://root:root@127.0.0.1:6667")

+

+DbSession = sessionmaker(bind=engine)

+session = DbSession()

+

+res = session.query(Device.status).filter(Device.temperature > 1)

+

+for row in res:

+ print(row)

+```

+

+

## Developers

### Introduction

diff --git a/client-py/SessionExample.py b/client-py/SessionExample.py

index 93aa839c3bd4..61e82234dbf9 100644

--- a/client-py/SessionExample.py

+++ b/client-py/SessionExample.py

@@ -20,6 +20,9 @@

import numpy as np

from iotdb.Session import Session

+from iotdb.template.InternalNode import InternalNode

+from iotdb.template.MeasurementNode import MeasurementNode

+from iotdb.template.Template import Template

from iotdb.utils.IoTDBConstants import TSDataType, TSEncoding, Compressor

from iotdb.utils.Tablet import Tablet

from iotdb.utils.NumpyTablet import NumpyTablet

@@ -280,6 +283,17 @@

while session_data_set.has_next():

print(session_data_set.next())

+# execute statement

+with session.execute_statement(

+ "select * from root.sg_test_01.d_01"

+) as session_data_set:

+ while session_data_set.has_next():

+ print(session_data_set.next())

+

+session.execute_statement(

+ "insert into root.sg_test_01.d_01(timestamp, s_02) values(16, 188)"

+)

+

# insert string records of one device

time_list = [1, 2, 3]

measurements_list = [

@@ -313,6 +327,90 @@

# delete storage group

session.delete_storage_group("root.sg_test_01")

+# create measurement node template

+template = Template(name="template_python", share_time=False)

+m_node_1 = MeasurementNode(

+ name="s1",

+ data_type=TSDataType.INT64,

+ encoding=TSEncoding.RLE,

+ compression_type=Compressor.SNAPPY,

+)

+m_node_2 = MeasurementNode(

+ name="s2",

+ data_type=TSDataType.INT64,

+ encoding=TSEncoding.RLE,

+ compression_type=Compressor.SNAPPY,

+)

+m_node_3 = MeasurementNode(

+ name="s3",

+ data_type=TSDataType.INT64,

+ encoding=TSEncoding.RLE,

+ compression_type=Compressor.SNAPPY,

+)

+template.add_template(m_node_1)

+template.add_template(m_node_2)

+template.add_template(m_node_3)

+session.create_schema_template(template)

+print("create template success template_python")

+

+# create internal node template

+template_name = "treeTemplate_python"

+template = Template(name=template_name, share_time=True)

+i_node_gps = InternalNode(name="GPS", share_time=False)

+i_node_v = InternalNode(name="vehicle", share_time=True)

+m_node_x = MeasurementNode("x", TSDataType.FLOAT, TSEncoding.RLE, Compressor.SNAPPY)

+

+i_node_gps.add_child(m_node_x)

+i_node_v.add_child(m_node_x)

+template.add_template(i_node_gps)

+template.add_template(i_node_v)

+template.add_template(m_node_x)

+

+session.create_schema_template(template)

+print("create template success treeTemplate_python}")

+

+print(session.is_measurement_in_template(template_name, "GPS"))

+print(session.is_measurement_in_template(template_name, "GPS.x"))

+print(session.show_all_templates())

+

+# # append schema template

+data_types = [TSDataType.FLOAT, TSDataType.FLOAT, TSDataType.DOUBLE]

+encoding_list = [TSEncoding.RLE, TSEncoding.RLE, TSEncoding.GORILLA]

+compressor_list = [Compressor.SNAPPY, Compressor.SNAPPY, Compressor.LZ4]

+

+measurements_aligned_path = ["aligned.s1", "aligned.s2", "aligned.s3"]

+session.add_measurements_in_template(

+ template_name,

+ measurements_aligned_path,

+ data_types,

+ encoding_list,

+ compressor_list,

+ is_aligned=True,

+)

+# session.drop_schema_template("add_template_python")

+measurements_aligned_path = ["unaligned.s1", "unaligned.s2", "unaligned.s3"]

+session.add_measurements_in_template(

+ template_name,

+ measurements_aligned_path,

+ data_types,

+ encoding_list,

+ compressor_list,

+ is_aligned=False,

+)

+session.delete_node_in_template(template_name, "aligned.s1")

+print(session.count_measurements_in_template(template_name))

+print(session.is_path_exist_in_template(template_name, "aligned.s1"))

+print(session.is_path_exist_in_template(template_name, "aligned.s2"))

+

+session.set_schema_template(template_name, "root.python.set")

+print(session.show_paths_template_using_on(template_name))

+print(session.show_paths_template_set_on(template_name))

+session.unset_schema_template(template_name, "root.python.set")

+

+# drop template

+session.drop_schema_template("template_python")

+session.drop_schema_template(template_name)

+print("drop template success, template_python and treeTemplate_python")

# close session connection.

session.close()

diff --git a/client-py/iotdb/Session.py b/client-py/iotdb/Session.py

index 780ecc7d7c35..c5a83d3e053f 100644

--- a/client-py/iotdb/Session.py

+++ b/client-py/iotdb/Session.py

@@ -18,12 +18,12 @@

import logging

import struct

import time

-

-from iotdb.utils.SessionDataSet import SessionDataSet

-

from thrift.protocol import TBinaryProtocol, TCompactProtocol

from thrift.transport import TSocket, TTransport

+from iotdb.utils.SessionDataSet import SessionDataSet

+from .template.Template import Template

+from .template.TemplateQueryType import TemplateQueryType

from .thrift.rpc.TSIService import (

Client,

TSCreateTimeseriesReq,

@@ -38,6 +38,13 @@

TSInsertTabletsReq,

TSInsertRecordsReq,

TSInsertRecordsOfOneDeviceReq,

+ TSCreateSchemaTemplateReq,

+ TSDropSchemaTemplateReq,

+ TSAppendSchemaTemplateReq,

+ TSPruneSchemaTemplateReq,

+ TSSetSchemaTemplateReq,

+ TSUnsetSchemaTemplateReq,

+ TSQueryTemplateReq,

)

from .thrift.rpc.ttypes import (

TSDeleteDataReq,

@@ -47,7 +54,6 @@

TSLastDataQueryReq,

TSInsertStringRecordsOfOneDeviceReq,

)

-

# for debug

# from IoTDBConstants import *

# from SessionDataSet import SessionDataSet

@@ -1027,12 +1033,19 @@ def verify_success(status):

if status.code == Session.SUCCESS_CODE:

return 0

- logger.error("error status is", status)

+ logger.error("error status is %s", status)

return -1

def execute_raw_data_query(

self, paths: list, start_time: int, end_time: int

) -> SessionDataSet:

+ """

+ execute query statement and returns SessionDataSet

+ :param paths: String path list

+ :param start_time: Query start time

+ :param end_time: Query end time

+ :return: SessionDataSet, contains query results and relevant info (see SessionDataSet.py)

+ """

request = TSRawDataQueryReq(

self.__session_id,

paths,

@@ -1057,6 +1070,12 @@ def execute_raw_data_query(

)

def execute_last_data_query(self, paths: list, last_time: int) -> SessionDataSet:

+ """

+ execute query statement and returns SessionDataSet

+ :param paths: String path list

+ :param last_time: Query last time

+ :return: SessionDataSet, contains query results and relevant info (see SessionDataSet.py)

+ """

request = TSLastDataQueryReq(

self.__session_id,

paths,

@@ -1088,6 +1107,16 @@ def insert_string_records_of_one_device(

values_list: list,

have_sorted: bool = False,

):

+ """

+ insert multiple row of string record into database:

+ timestamp, m1, m2, m3

+ 0, text1, text2, text3

+ :param device_id: String, device id

+ :param times: Timestamp list

+ :param measurements_list: Measurements list

+ :param values_list: Value list

+ :param have_sorted: have these list been sorted by timestamp

+ """

if (len(times) != len(measurements_list)) or (len(times) != len(values_list)):

raise RuntimeError(

"insert records of one device error: times, measurementsList and valuesList's size should be equal!"

@@ -1151,3 +1180,274 @@ def gen_insert_string_records_of_one_device_request(

is_aligned,

)

return request

+

+ def create_schema_template(self, template: Template):

+ """

+ create schema template, users using this method should use the template class as an argument

+ :param template: The template contains multiple child node(see Template.py)

+ """

+ bytes_array = template.serialize

+ request = TSCreateSchemaTemplateReq(

+ self.__session_id, template.get_name(), bytes_array

+ )

+ status = self.__client.createSchemaTemplate(request)

+ logger.debug(

+ "create one template {} template name: {}".format(

+ self.__session_id, template.get_name()

+ )

+ )

+ return Session.verify_success(status)

+

+ def drop_schema_template(self, template_name: str):

+ """

+ drop schema template, this method should be used to the template unset anything

+ :param template_name: template name

+ """

+ request = TSDropSchemaTemplateReq(self.__session_id, template_name)

+ status = self.__client.dropSchemaTemplate(request)

+ logger.debug(

+ "drop one template {} template name: {}".format(

+ self.__session_id, template_name

+ )

+ )

+ return Session.verify_success(status)

+

+ def execute_statement(self, sql: str, timeout=0):

+ request = TSExecuteStatementReq(

+ self.__session_id, sql, self.__statement_id, self.__fetch_size, timeout

+ )

+ try:

+ resp = self.__client.executeStatement(request)

+ status = resp.status

+ logger.debug("execute statement {} message: {}".format(sql, status.message))

+ if Session.verify_success(status) == 0:

+ if resp.columns:

+ return SessionDataSet(

+ sql,

+ resp.columns,

+ resp.dataTypeList,

+ resp.columnNameIndexMap,

+ resp.queryId,

+ self.__client,

+ self.__statement_id,

+ self.__session_id,

+ resp.queryDataSet,

+ resp.ignoreTimeStamp,

+ )

+ else:

+ return None

+ else:

+ raise RuntimeError(

+ "execution of statement fails because: {}", status.message

+ )

+ except TTransport.TException as e:

+ raise RuntimeError("execution of statement fails because: ", e)

+

+ def add_measurements_in_template(

+ self,

+ template_name: str,

+ measurements_path: list,

+ data_types: list,

+ encodings: list,

+ compressors: list,

+ is_aligned: bool = False,

+ ):

+ """

+ add measurements in the template, the template must already create. This function adds some measurements node.

+ :param template_name: template name, string list, like ["name_x", "name_y", "name_z"]

+ :param measurements_path: when ths is_aligned is True, recommend the name like a.b, like [python.x, python.y, iotdb.z]

+ :param data_types: using TSDataType(see IoTDBConstants.py)

+ :param encodings: using TSEncoding(see IoTDBConstants.py)

+ :param compressors: using Compressor(see IoTDBConstants.py)

+ :param is_aligned: True is aligned, False is unaligned

+ """

+ request = TSAppendSchemaTemplateReq(

+ self.__session_id,

+ template_name,

+ is_aligned,

+ measurements_path,

+ list(map(lambda x: x.value, data_types)),

+ list(map(lambda x: x.value, encodings)),

+ list(map(lambda x: x.value, compressors)),

+ )

+ status = self.__client.appendSchemaTemplate(request)

+ logger.debug(

+ "append unaligned template {} template name: {}".format(

+ self.__session_id, template_name

+ )

+ )

+ return Session.verify_success(status)

+

+ def delete_node_in_template(self, template_name: str, path: str):

+ """

+ delete a node in the template, this node must be already in the template

+ :param template_name: template name

+ :param path: measurements path

+ """

+ request = TSPruneSchemaTemplateReq(self.__session_id, template_name, path)

+ status = self.__client.pruneSchemaTemplate(request)

+ logger.debug(

+ "append unaligned template {} template name: {}".format(

+ self.__session_id, template_name

+ )

+ )

+ return Session.verify_success(status)

+

+ def set_schema_template(self, template_name, prefix_path):

+ """

+ set template in prefix path, template already exit, prefix path is not measurements

+ :param template_name: template name

+ :param prefix_path: prefix path

+ """

+ request = TSSetSchemaTemplateReq(self.__session_id, template_name, prefix_path)

+ status = self.__client.setSchemaTemplate(request)

+ logger.debug(

+ "set schema template to path{} template name: {}, path:{}".format(

+ self.__session_id, template_name, prefix_path

+ )

+ )

+ return Session.verify_success(status)

+

+ def unset_schema_template(self, template_name, prefix_path):

+ """

+ unset schema template from prefix path, this method unsetting the template from entities,

+ which have already inserted records using the template, is not supported.

+ :param template_name: template name

+ :param prefix_path:

+ """

+ request = TSUnsetSchemaTemplateReq(

+ self.__session_id, prefix_path, template_name

+ )

+ status = self.__client.unsetSchemaTemplate(request)

+ logger.debug(

+ "set schema template to path{} template name: {}, path:{}".format(

+ self.__session_id, template_name, prefix_path

+ )

+ )

+ return Session.verify_success(status)

+

+ def count_measurements_in_template(self, template_name: str):

+ """

+ drop schema template, this method should be used to the template unset anything

+ :param template_name: template name

+ """

+ request = TSQueryTemplateReq(

+ self.__session_id,

+ template_name,

+ TemplateQueryType.COUNT_MEASUREMENTS.value,

+ )

+ response = self.__client.querySchemaTemplate(request)

+ logger.debug(

+ "count measurements template {}, template name is {}, count is {}".format(

+ self.__session_id, template_name, response.measurements

+ )

+ )

+ return response.count

+

+ def is_measurement_in_template(self, template_name: str, path: str):

+ """

+ judge the node in the template is measurement or not, this node must in the template

+ :param template_name: template name

+ :param path:

+ """

+ request = TSQueryTemplateReq(

+ self.__session_id,

+ template_name,

+ TemplateQueryType.IS_MEASUREMENT.value,

+ path,

+ )

+ response = self.__client.querySchemaTemplate(request)

+ logger.debug(

+ "judge the path is measurement or not in template {}, template name is {}, result is {}".format(

+ self.__session_id, template_name, response.result

+ )

+ )

+ return response.result

+

+ def is_path_exist_in_template(self, template_name: str, path: str):

+ """

+ judge whether the node is a measurement or not in the template, this node must be in the template

+ :param template_name: template name

+ :param path:

+ """

+ request = TSQueryTemplateReq(

+ self.__session_id, template_name, TemplateQueryType.PATH_EXIST.value, path

+ )

+ response = self.__client.querySchemaTemplate(request)

+ logger.debug(

+ "judge the path is in template or not {}, template name is {}, result is {}".format(

+ self.__session_id, template_name, response.result

+ )

+ )

+ return response.result

+

+ def show_measurements_in_template(self, template_name: str, pattern: str = ""):

+ """

+ show all measurements under the pattern in template

+ :param template_name: template name

+ :param pattern: parent path, if default, show all measurements

+ """

+ request = TSQueryTemplateReq(

+ self.__session_id,

+ template_name,

+ TemplateQueryType.SHOW_MEASUREMENTS.value,

+ pattern,

+ )

+ response = self.__client.querySchemaTemplate(request)

+ logger.debug(

+ "show measurements in template {}, template name is {}, result is {}".format(

+ self.__session_id, template_name, response.measurements

+ )

+ )

+ return response.measurements

+

+ def show_all_templates(self):

+ """

+ show all schema templates

+ """

+ request = TSQueryTemplateReq(

+ self.__session_id,

+ "",

+ TemplateQueryType.SHOW_TEMPLATES.value,

+ )

+ response = self.__client.querySchemaTemplate(request)

+ logger.debug(

+ "show all template {}, measurements is {}".format(

+ self.__session_id, response.measurements

+ )

+ )

+ return response.measurements

+

+ def show_paths_template_set_on(self, template_name):

+ """

+ show the path prefix where a schema template is set

+ :param template_name:

+ """

+ request = TSQueryTemplateReq(

+ self.__session_id, template_name, TemplateQueryType.SHOW_SET_TEMPLATES.value

+ )

+ response = self.__client.querySchemaTemplate(request)

+ logger.debug(

+ "show paths template set {}, on {}".format(

+ self.__session_id, response.measurements

+ )

+ )

+ return response.measurements

+

+ def show_paths_template_using_on(self, template_name):

+ """

+ show the path prefix where a schema template is used

+ :param template_name:

+ """

+ request = TSQueryTemplateReq(

+ self.__session_id,

+ template_name,

+ TemplateQueryType.SHOW_USING_TEMPLATES.value,

+ )

+ response = self.__client.querySchemaTemplate(request)

+ logger.debug(

+ "show paths template using {}, on {}".format(

+ self.__session_id, response.measurements

+ )

+ )

+ return response.measurements

diff --git a/client-py/iotdb/dbapi/Connection.py b/client-py/iotdb/dbapi/Connection.py

new file mode 100644

index 000000000000..aee5520e9af9

--- /dev/null

+++ b/client-py/iotdb/dbapi/Connection.py

@@ -0,0 +1,91 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+import logging

+

+from iotdb.Session import Session

+

+from .Cursor import Cursor

+from .Exceptions import ConnectionError, ProgrammingError

+

+logger = logging.getLogger("IoTDB")

+

+

+class Connection(object):

+ def __init__(

+ self,

+ host,

+ port,

+ username=Session.DEFAULT_USER,

+ password=Session.DEFAULT_PASSWORD,

+ fetch_size=Session.DEFAULT_FETCH_SIZE,

+ zone_id=Session.DEFAULT_ZONE_ID,

+ enable_rpc_compression=False,

+ sqlalchemy_mode=False,

+ ):

+ self.__session = Session(host, port, username, password, fetch_size, zone_id)

+ self.__sqlalchemy_mode = sqlalchemy_mode

+ self.__is_close = True

+ try:

+ self.__session.open(enable_rpc_compression)

+ self.__is_close = False

+ except Exception as e:

+ raise ConnectionError(e)

+

+ def close(self):

+ """

+ Close the connection now

+ """

+ if self.__is_close:

+ return

+ self.__session.close()

+ self.__is_close = True

+

+ def cursor(self):

+ """

+ Return a new Cursor Object using the connection.

+ """

+ if not self.__is_close:

+ return Cursor(self, self.__session, self.__sqlalchemy_mode)

+ else:

+ raise ProgrammingError("Connection closed")

+

+ def commit(self):

+ """

+ Not supported method.

+ """

+ pass

+

+ def rollback(self):

+ """

+ Not supported method.

+ """

+ pass

+

+ @property

+ def is_close(self):

+ """

+ This read-only attribute specified whether the object is closed

+ """

+ return self.__is_close

+

+ def __enter__(self):

+ return self

+

+ def __exit__(self, exc_type, exc_val, exc_tb):

+ self.close()

diff --git a/client-py/iotdb/dbapi/Cursor.py b/client-py/iotdb/dbapi/Cursor.py

new file mode 100644

index 000000000000..a1d6e2caabac

--- /dev/null

+++ b/client-py/iotdb/dbapi/Cursor.py

@@ -0,0 +1,288 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+import logging

+import warnings

+

+from iotdb.Session import Session

+

+from .Exceptions import ProgrammingError

+

+logger = logging.getLogger("IoTDB")

+

+

+class Cursor(object):

+ def __init__(self, connection, session: Session, sqlalchemy_mode):

+ self.__connection = connection

+ self.__session = session

+ self.__sqlalchemy_mode = sqlalchemy_mode

+ self.__arraysize = 1

+ self.__is_close = False

+ self.__result = None

+ self.__rows = None

+ self.__rowcount = -1

+

+ @property

+ def description(self):

+ """

+ This read-only attribute is a sequence of 7-item sequences.

+ """

+ if self.__is_close or not self.__result["col_names"]:

+ return

+

+ description = []

+

+ col_names = self.__result["col_names"]

+ col_types = self.__result["col_types"]

+

+ for i in range(len(col_names)):

+ description.append(

+ (

+ col_names[i],

+ None if self.__sqlalchemy_mode is True else col_types[i].value,

+ None,

+ None,

+ None,

+ None,

+ col_names[i] == "Time",

+ )

+ )

+ return tuple(description)

+

+ @property

+ def arraysize(self):

+ """

+ This read/write attribute specifies the number of rows to fetch at a time with .fetchmany().

+ """

+ return self.__arraysize

+

+ @arraysize.setter

+ def arraysize(self, value):

+ """

+ Set the arraysize.

+ :param value: arraysize

+ """

+ try:

+ self.__arraysize = int(value)

+ except TypeError:

+ self.__arraysize = 1

+

+ @property

+ def rowcount(self):

+ """

+ This read-only attribute specifies the number of rows that the last

+ .execute*() produced (for DQL statements like ``SELECT``) or affected

+ (for DML statements like ``DELETE`` or ``INSERT`` return 0 if successful, -1 if unsuccessful).

+ """

+ if self.__is_close or self.__result is None or "row_count" not in self.__result:

+ return -1

+ return self.__result.get("row_count", -1)

+

+ def execute(self, operation, parameters=None):

+ """

+ Prepare and execute a database operation (query or command).

+ :param operation: a database operation

+ :param parameters: parameters of the operation

+ """

+ if self.__connection.is_close:

+ raise ProgrammingError("Connection closed!")

+

+ if self.__is_close:

+ raise ProgrammingError("Cursor closed!")

+

+ if parameters is None:

+ sql = operation

+ else:

+ sql = operation % parameters

+

+ time_index = []

+ time_names = []

+ if self.__sqlalchemy_mode:

+ sql_seqs = []

+ seqs = sql.split("\n")

+ for seq in seqs:

+ if seq.find("FROM Time Index") >= 0:

+ time_index = [

+ int(index)

+ for index in seq.replace("FROM Time Index", "").split()

+ ]

+ elif seq.find("FROM Time Name") >= 0:

+ time_names = [

+ name for name in seq.replace("FROM Time Name", "").split()

+ ]

+ else:

+ sql_seqs.append(seq)

+ sql = "\n".join(sql_seqs)

+

+ try:

+ data_set = self.__session.execute_statement(sql)

+ col_names = None

+ col_types = None

+ rows = []

+

+ if data_set:

+ data = data_set.todf()

+

+ if self.__sqlalchemy_mode and time_index:

+ time_column = data.columns[0]

+ time_column_value = data.Time

+ del data[time_column]

+ for i in range(len(time_index)):

+ data.insert(time_index[i], time_names[i], time_column_value)

+

+ col_names = data.columns.tolist()

+ col_types = data_set.get_column_types()

+ rows = data.values.tolist()

+ data_set.close_operation_handle()

+

+ self.__result = {

+ "col_names": col_names,

+ "col_types": col_types,

+ "rows": rows,

+ "row_count": len(rows),

+ }

+ except Exception:

+ logger.error("failed to execute statement:{}".format(sql))

+ self.__result = {

+ "col_names": None,

+ "col_types": None,

+ "rows": [],

+ "row_count": -1,

+ }

+ self.__rows = iter(self.__result["rows"])

+

+ def executemany(self, operation, seq_of_parameters=None):

+ """

+ Prepare a database operation (query or command) and then execute it

+ against all parameter sequences or mappings found in the sequence

+ ``seq_of_parameters``

+ :param operation: a database operation

+ :param seq_of_parameters: pyformat style parameter list of the operation

+ """

+ if self.__connection.is_close:

+ raise ProgrammingError("Connection closed!")

+

+ if self.__is_close:

+ raise ProgrammingError("Cursor closed!")

+

+ rows = []

+ if seq_of_parameters is None:

+ self.execute(operation)

+ rows.extend(self.__result["rows"])

+ else:

+ for parameters in seq_of_parameters:

+ self.execute(operation, parameters)

+ rows.extend(self.__result["rows"])

+

+ self.__result["rows"] = rows

+ self.__rows = iter(self.__result["rows"])

+

+ def fetchone(self):

+ """

+ Fetch the next row of a query result set, returning a single sequence,

+ or None when no more data is available.

+ Alias for ``next()``.

+ """

+ try:

+ return self.next()

+ except StopIteration:

+ return None

+

+ def fetchmany(self, count=None):

+ """

+ Fetch the next set of rows of a query result, returning a sequence of

+ sequences (e.g. a list of tuples). An empty sequence is returned when

+ no more rows are available.

+ """

+ if count is None:

+ count = self.__arraysize

+ if count == 0:

+ return self.fetchall()

+ result = []

+ for i in range(count):

+ try:

+ result.append(self.next())

+ except StopIteration:

+ pass

+ return result

+

+ def fetchall(self):

+ """

+ Fetch all (remaining) rows of a query result, returning them as a

+ sequence of sequences (e.g. a list of tuples). Note that the cursor's

+ arraysize attribute can affect the performance of this operation.

+ """

+ result = []

+ iterate = True

+ while iterate:

+ try:

+ result.append(self.next())

+ except StopIteration:

+ iterate = False

+ return result

+

+ def next(self):

+ """

+ Return the next row of a query result set, respecting if cursor was

+ closed.

+ """

+ if self.__result is None:

+ raise ProgrammingError(

+ "No result available. execute() or executemany() must be called first."

+ )

+ elif not self.__is_close:

+ return next(self.__rows)

+ else:

+ raise ProgrammingError("Cursor closed!")

+

+ __next__ = next

+

+ def close(self):

+ """

+ Close the cursor now.

+ """

+ self.__is_close = True

+ self.__result = None

+

+ def setinputsizes(self, sizes):

+ """

+ Not supported method.

+ """

+ pass

+

+ def setoutputsize(self, size, column=None):

+ """

+ Not supported method.

+ """

+ pass

+

+ def __iter__(self):

+ """

+ Support iterator interface:

+ http://legacy.python.org/dev/peps/pep-0249/#iter

+ This iterator is shared. Advancing this iterator will advance other

+ iterators created from this cursor.

+ """

+ warnings.warn("DB-API extension cursor.__iter__() used")

+ return self

+

+ def __enter__(self):

+ return self

+

+ def __exit__(self, exc_type, exc_val, exc_tb):

+ self.close()

diff --git a/client-py/iotdb/dbapi/Exceptions.py b/client-py/iotdb/dbapi/Exceptions.py

new file mode 100644

index 000000000000..d58689c86930

--- /dev/null

+++ b/client-py/iotdb/dbapi/Exceptions.py

@@ -0,0 +1,61 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+

+class Error(Exception):

+ pass

+

+

+class Warning(Exception):

+ pass

+

+

+class DatabaseError(Error):

+ pass

+

+

+class DataError(DatabaseError):

+ pass

+

+

+class InterfaceError(Error):

+ pass

+

+

+class InternalError(DatabaseError):

+ pass

+

+

+class IntegrityError(DatabaseError):

+ pass

+

+

+class OperationalError(DatabaseError):

+ pass

+

+

+class ProgrammingError(DatabaseError):

+ pass

+

+

+class NotSupportedError(DatabaseError):

+ pass

+

+

+class ConnectionError(DatabaseError):

+ pass

diff --git a/client-py/iotdb/dbapi/__init__.py b/client-py/iotdb/dbapi/__init__.py

new file mode 100644

index 000000000000..9f8006175008

--- /dev/null

+++ b/client-py/iotdb/dbapi/__init__.py

@@ -0,0 +1,26 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+

+from .Connection import Connection as connect

+from .Exceptions import Error

+

+__all__ = [connect, Error]

+

+apilevel = "2.0"

+threadsafety = 2

+paramstyle = "pyformat"

diff --git a/client-py/iotdb/dbapi/tests/__init__.py b/client-py/iotdb/dbapi/tests/__init__.py

new file mode 100644

index 000000000000..2a1e720805f2

--- /dev/null

+++ b/client-py/iotdb/dbapi/tests/__init__.py

@@ -0,0 +1,17 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information