We store some data (here is user.id) into session: req.session.userId = user.id

Since we use redis to store our sessions, it would look something like this: {userId: 1}

-

Redis is a key-value store which means you would have a key to lookup value. In this case, our key would be:

sess:REDIS_SECRET_KEY->{userId: 1} -

The

express-sessionwill set a cookie in my browser (in header actually), which is asignedversion ofREDIS_SECRET_KEY -

When user makes a request, the

signed REDIS_SECRET_KEYwill be sent to the server -

The server decrypt the signed cookie to the key

sess:REDIS_SECRET_KEY -

The server makes a request to redis server with the decrypt key above and look up data with that key.

-

Now, the server will send

{userId: 1}to client and store it inreq.session.

- The first time we go to the page (index.js), it is server side rendering (ssr):

browser => nextjs server => graphql apis

browser sends the cookie to next.js server

- Later requests are client side rendering:

browser => graphql apis

browser sends the cookie to graphql apis.

In this project, we have Post entity which includes a view called creator

representing the post creator information: username, id, etc. At the first time,

I write an inner join within SQL query to get the creator information, but I

think the creator would not be needed for every query related to post. And the

creator may be duplicated in posts query and post query.

According to Ben's video, I've learnt a practice that we can split our big query

into smaller parts. In this case, I can split the creator view as a

@FieldResolver() so that when a request need creator, it will fetch the data

for us:

@FieldResolver()

creator(@Root() post: Post) {

return User.findOne(post.creatorId);

}So I can grip out inner join part in posts query, post query and the

result in logging console when I refresh the home page would be something likes

this:

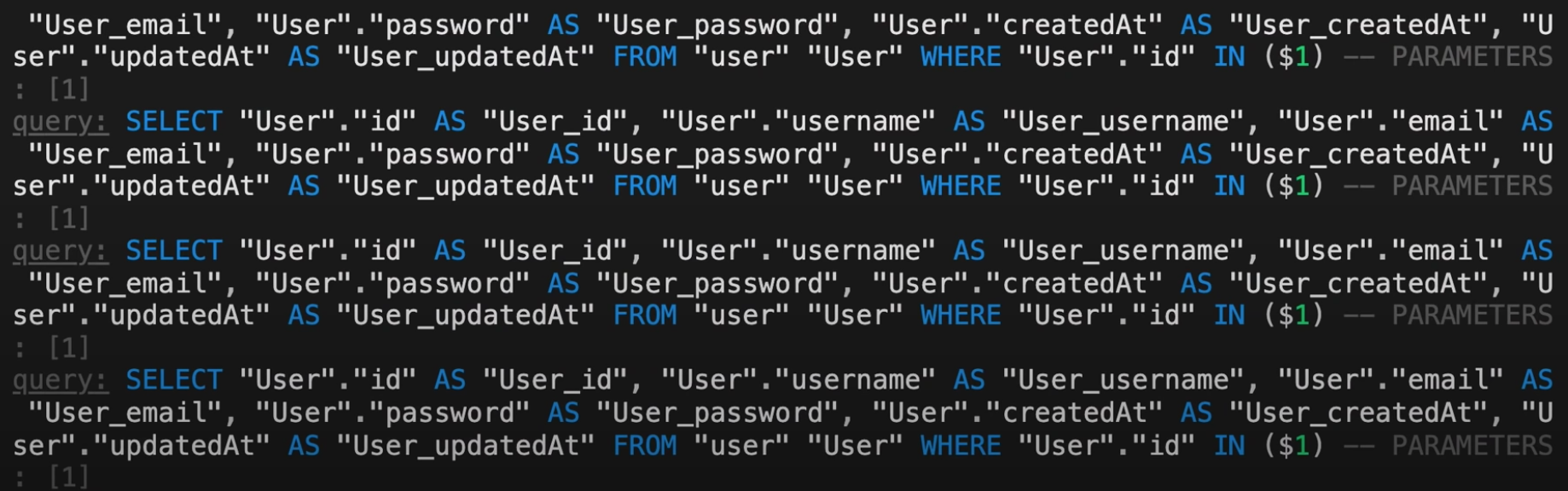

As you can see, if there are 15 - 20 posts in our homepage, there are 15 - 20

queries for fetch creator. Moreover, in our case, we have at least two

sections Popular posts and Recent posts so it would be duplicated the number

of query creator, which could lead our performance downward. That's why Data Loader comes in.

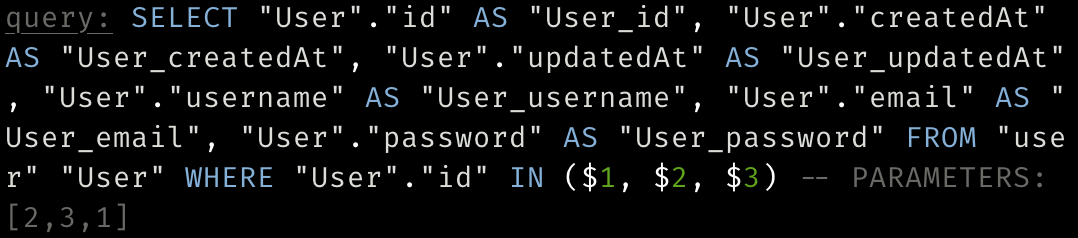

Actually, with Data Loader, our system will cache the creator query, reducing

the number of total queries we would have to fetch.

@FieldResolver(() => User)

async creator(@Root() post: Post, @Ctx() { userLoader }: MyContext) {

return await userLoader.load(post.creatorId);

}We create userLoader for caching the creator requests and pass it to our

context so that we can call it at anywhere else.

/**

* createCreatorLoader batches creator queries at one

*

* userIds: [1, 7, 8, 10, 9]

* users: [{id: 1, username: "ben"}, {id: 7, username: "bob"}, {...}]

*/

export const createUserLoader = () =>

new DataLoader<number, User>(async (userIds) => {

const users = await User.findByIds(userIds as number[]);

const userIdToUser: Record<number, User> = {};

users.forEach((u) => {

userIdToUser[u.id] = u;

});

return userIds.map((userId) => userIdToUser[userId]);

});Now we can refresh the home page and our creator query is cached successfully.

- User does something that triggers a notification (like your post, comment on your post, subscribe request, etc)

- Screen to view all notifications

- Set notifications as read

- queue

- push notifications

- toggle notification on/off

- React (hooks)

- TypeScript (version 4.0)

- Elixir

- PostgreSQL

- Node.js

- RabbitMQ

Why?