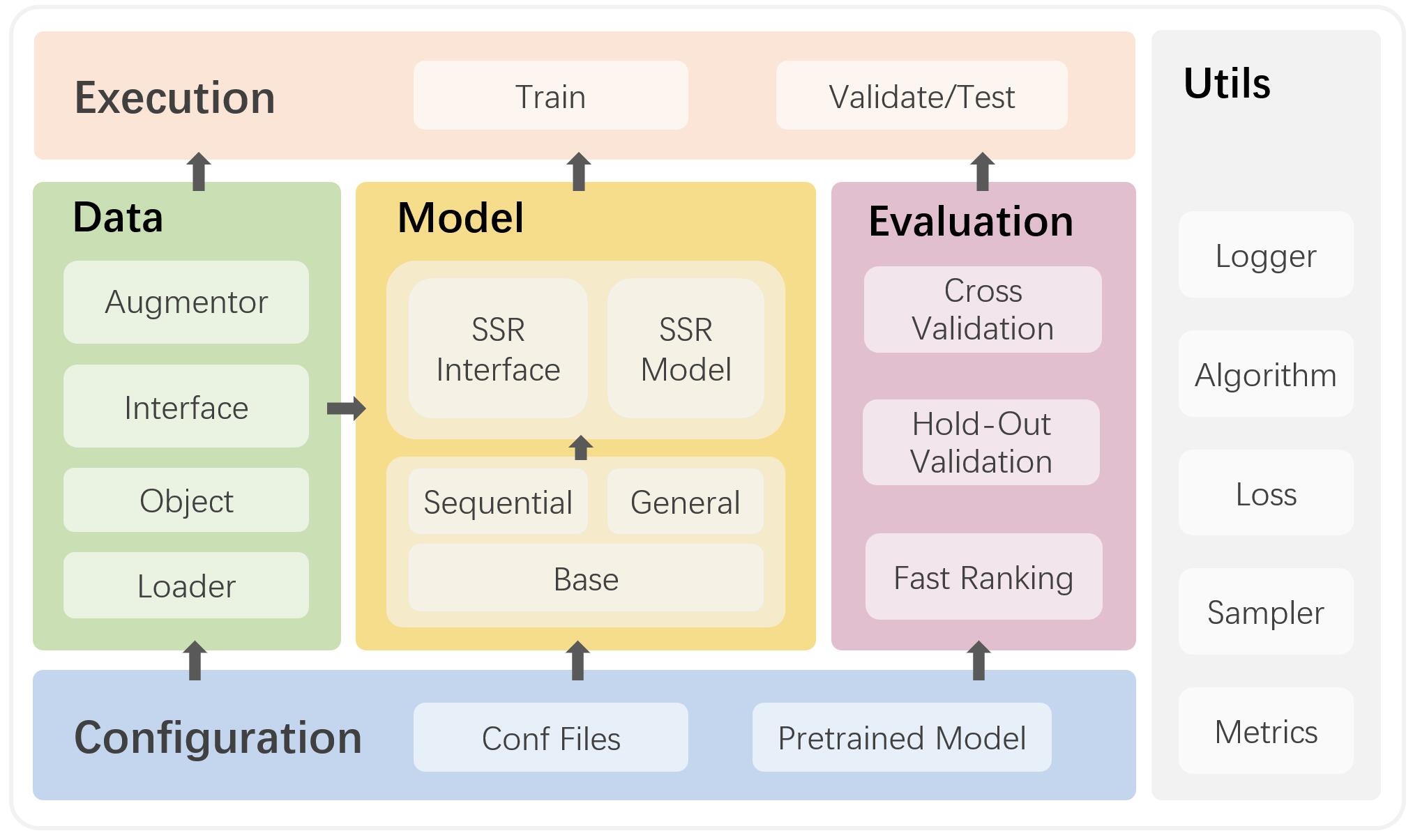

SELFRec is a Python framework for self-supervised recommendation (SSR) which integrates commonly used datasets and metrics, and implements many state-of-the-art SSR models. SELFRec has a lightweight architecture and provides user-friendly interfaces. It can facilitate model implementation and evaluation.

Founder and principal contributor: @Coder-Yu @xiaxin1998

This repo is released with our survey paper on self-supervised learning for recommender systems. We organized a tutorial on self-supervised recommendation at WWW'22. Visit the tutorial page for more information.

Supported by:

Prof. Hongzhi Yin, The University of Queensland, Australia, [email protected]

Prof. Shazia Sadiq, ARC Training Centre for Information Resilience (CIRES), University of Queensland, Australia

- Fast execution: SELFRec is compatible with Python 3.9+, Tensorflow 1.14+ (optional), and PyTorch 1.8+ and powered by GPUs. We also optimize the time-consuming item ranking procedure, drastically reducing ranking time to seconds.

- Easy configuration: SELFRec provides simple and high-level interfaces, making it easy to add new SSR models in a plug-and-play fashion.

- Highly Modularized: SELFRec is divided into multiple discrete and independent modules. This design decouples model design from other procedures, allowing users to focus on the logic of their method and streamlining development.

- SSR-Specific: SELFRec is designed specifically for SSR. It provides specific modules and interfaces for rapid development of data augmentation and self-supervised tasks.

- Execute pip install -r requirements.txt under the SELFRec directory

- Configure the xx.yaml file in ./conf . (xx is the name of the model you want to run)

- Run main.py and choose the model you want to run.

| Model | Paper | Type | Code |

|---|---|---|---|

| SASRec | Kang et al. Self-Attentive Sequential Recommendation, ICDM'18. | Sequential | PyTorch |

| CL4SRec | Xie et al. Contrastive Learning for Sequential Recommendation, ICDE'22. | Sequential | PyTorch |

| BERT4Rec | Sun et al. BERT4Rec: Sequential Recommendation with Bidirectional Encoder Representations from Transformer, CIKM'19. | Sequential | PyTorch |

General hyperparameter settings are: batch_size: 2048, emb_size: 64, learning rate: 0.001, L2 reg: 0.0001.

| Model | Recall@20 | NDCG@20 | Hyperparameter settings |

|---|---|---|---|

| MF | 0.0543 | 0.0445 | |

| LightGCN | 0.0639 | 0.0525 | layer=3 |

| NCL | 0.0670 | 0.0562 | layer=3, ssl_reg=1e-6, proto_reg=1e-7, tau=0.05, hyper_layers=1, alpha=1.5, num_clusters=2000 |

| SGL | 0.0675 | 0.0555 | λ=0.1, ρ=0.1, tau=0.2 layer=3 |

| MixGCF | 0.0691 | 0.0577 | layer=3, n_nes=64, layer=3 |

| DirectAU | 0.0695 | 0.0583 | 𝛾=2, layer=3 |

| SimGCL | 0.0721 | 0.0601 | λ=0.5, eps=0.1, tau=0.2, layer=3 |

| XSimGCL | 0.0723 | 0.0604 | λ=0.2, eps=0.2, l∗=1 tau=0.15 layer=3 |

- Create a .conf file for your model in the directory named conf.

- Make your model inherit the proper base class.

- Reimplement the following functions.

- build(), train(), save(), predict()

- Register your model in main.py.

| Data Set | Basic Meta | User Context | ||||||

|---|---|---|---|---|---|---|---|---|

| Users | Items | Ratings (Scale) | Density | Users | Links (Type) | |||

| Douban | 2,848 | 39,586 | 894,887 | [1, 5] | 0.794% | 2,848 | 35,770 | Trust |

| LastFM | 1,892 | 17,632 | 92,834 | implicit | 0.27% | 1,892 | 25,434 | Trust |

| Yelp | 19,539 | 21,266 | 450,884 | implicit | 0.11% | 19,539 | 864,157 | Trust |

| Amazon-Book | 52,463 | 91,599 | 2,984,108 | implicit | 0.11% | - | - | - |

@article{yu2023self,

title={Self-supervised learning for recommender systems: A survey},

author={Yu, Junliang and Yin, Hongzhi and Xia, Xin and Chen, Tong and Li, Jundong and Huang, Zi},

journal={IEEE Transactions on Knowledge and Data Engineering},

year={2023},

publisher={IEEE}

}