This repo is from my Master's degree thesis work develped at Addfor s.p.a

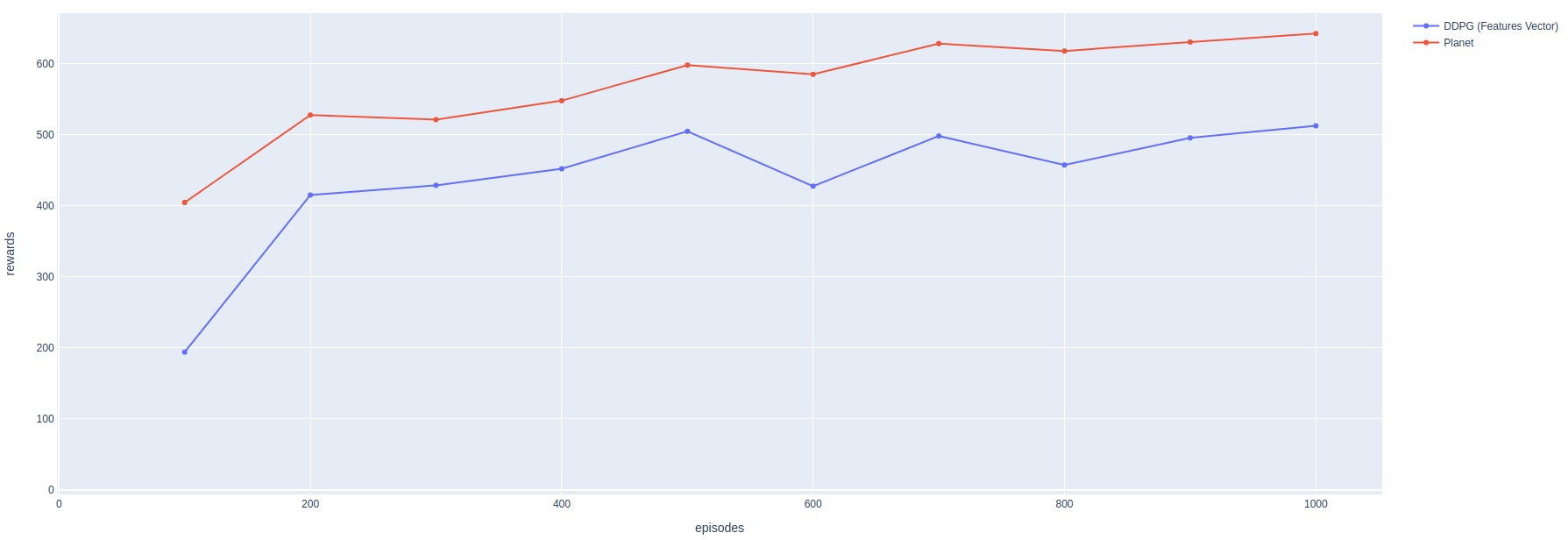

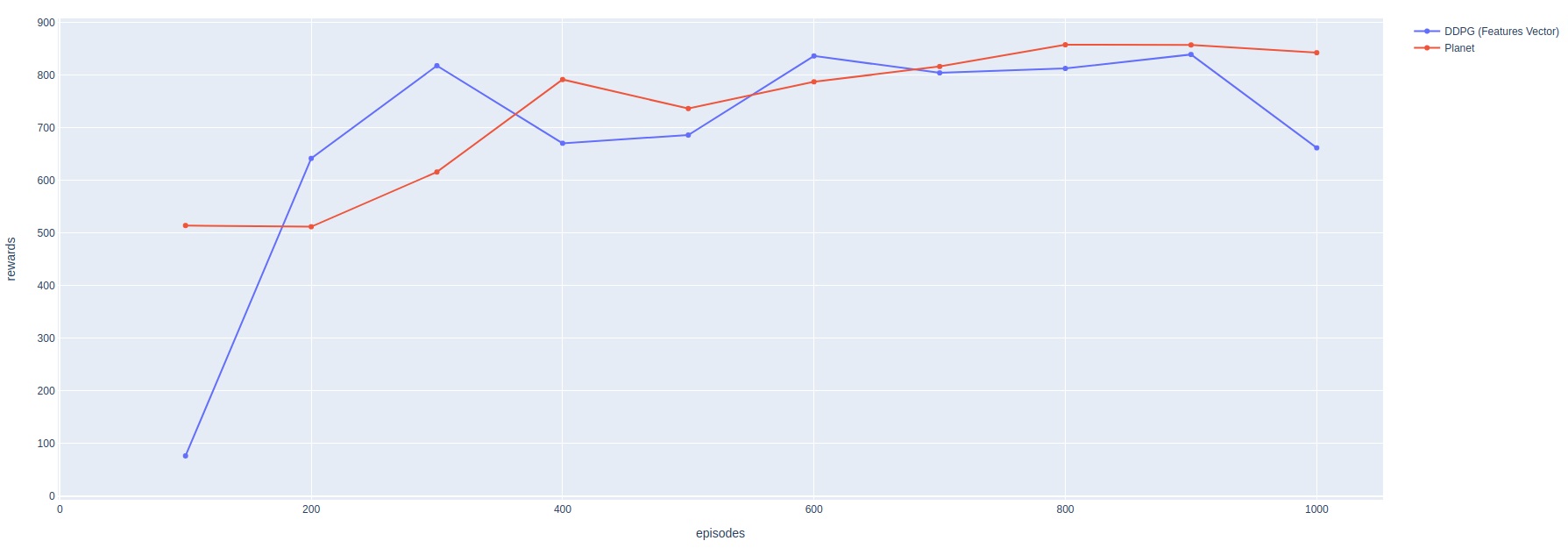

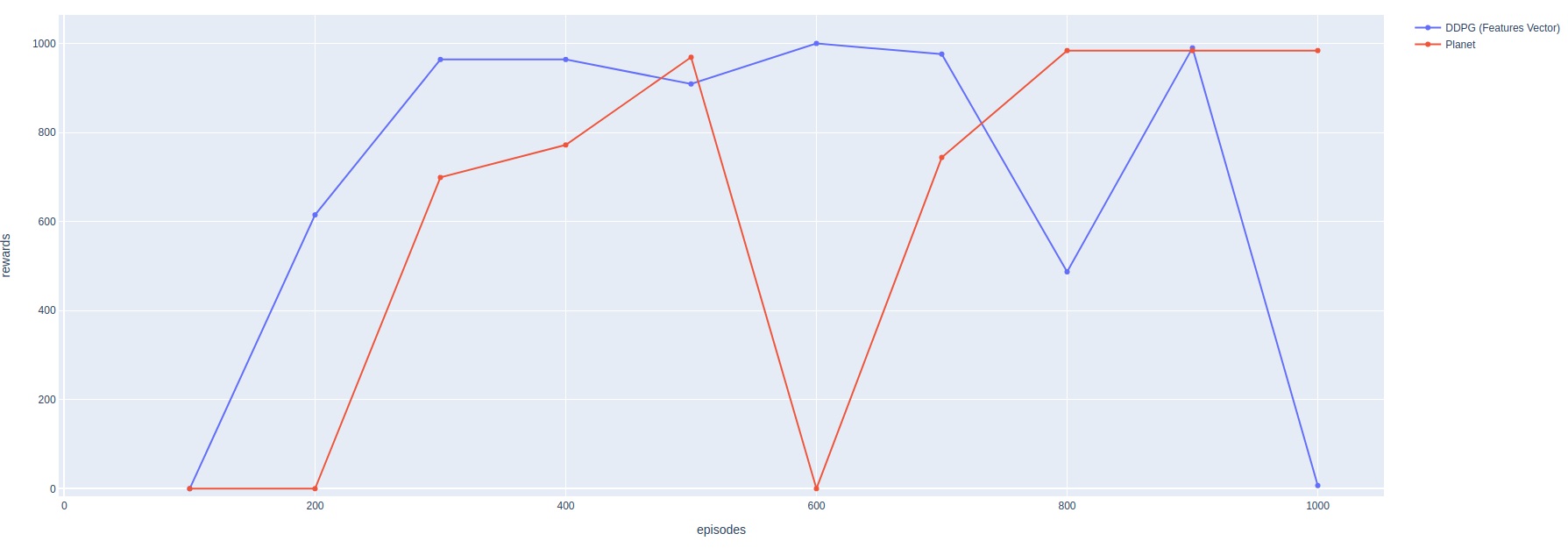

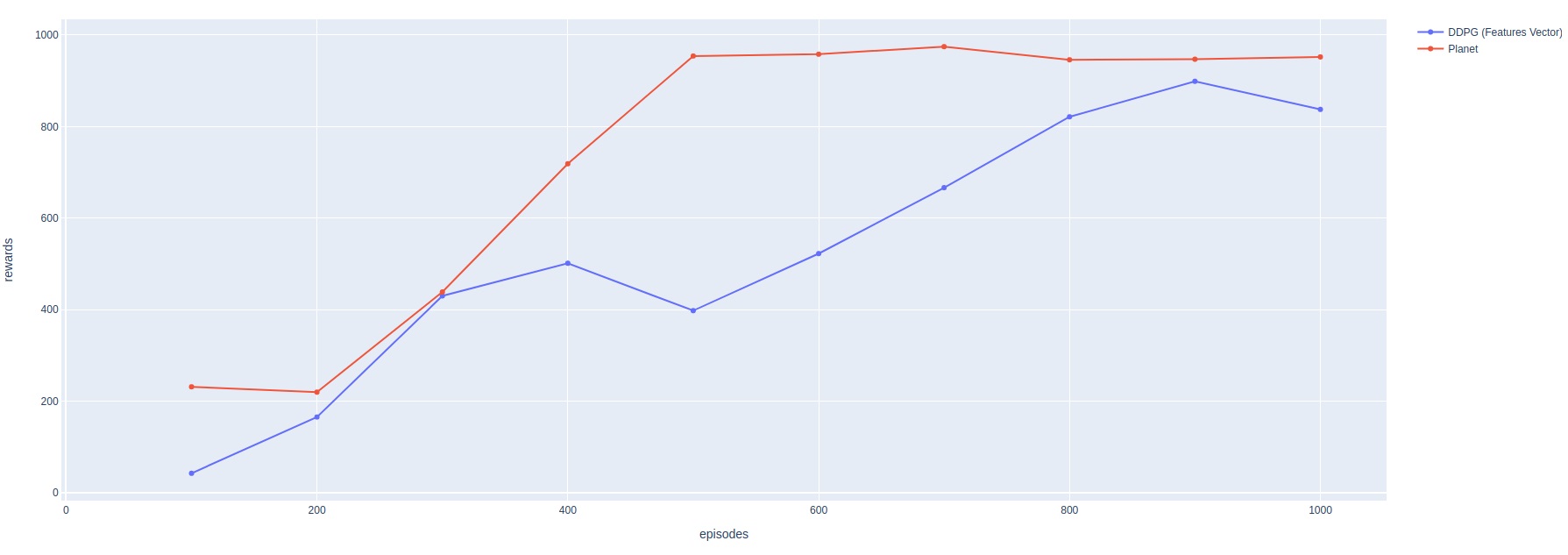

I used PlaNet to prove that model-based DRL can overcome the model-free algorithms in terms of sample efficiency.

My implementation of PlaNet is based on the Kaixhin one, but I reach better results. I also experiment with a regularizer based on DAE to reduce the gap between the real and the predicted rewards.

The company asks me to note publish that feature, but you can find all the explanations in my blog article (you can also contact me).

General overview of Planet model architecture. If you want a full explanation, click on it!

-

Part 1: Deep Reinforcement Learning doesn’t really work… Yet

-

Part 4: Our quest to make Reinforcement Learning 200 times more efficient

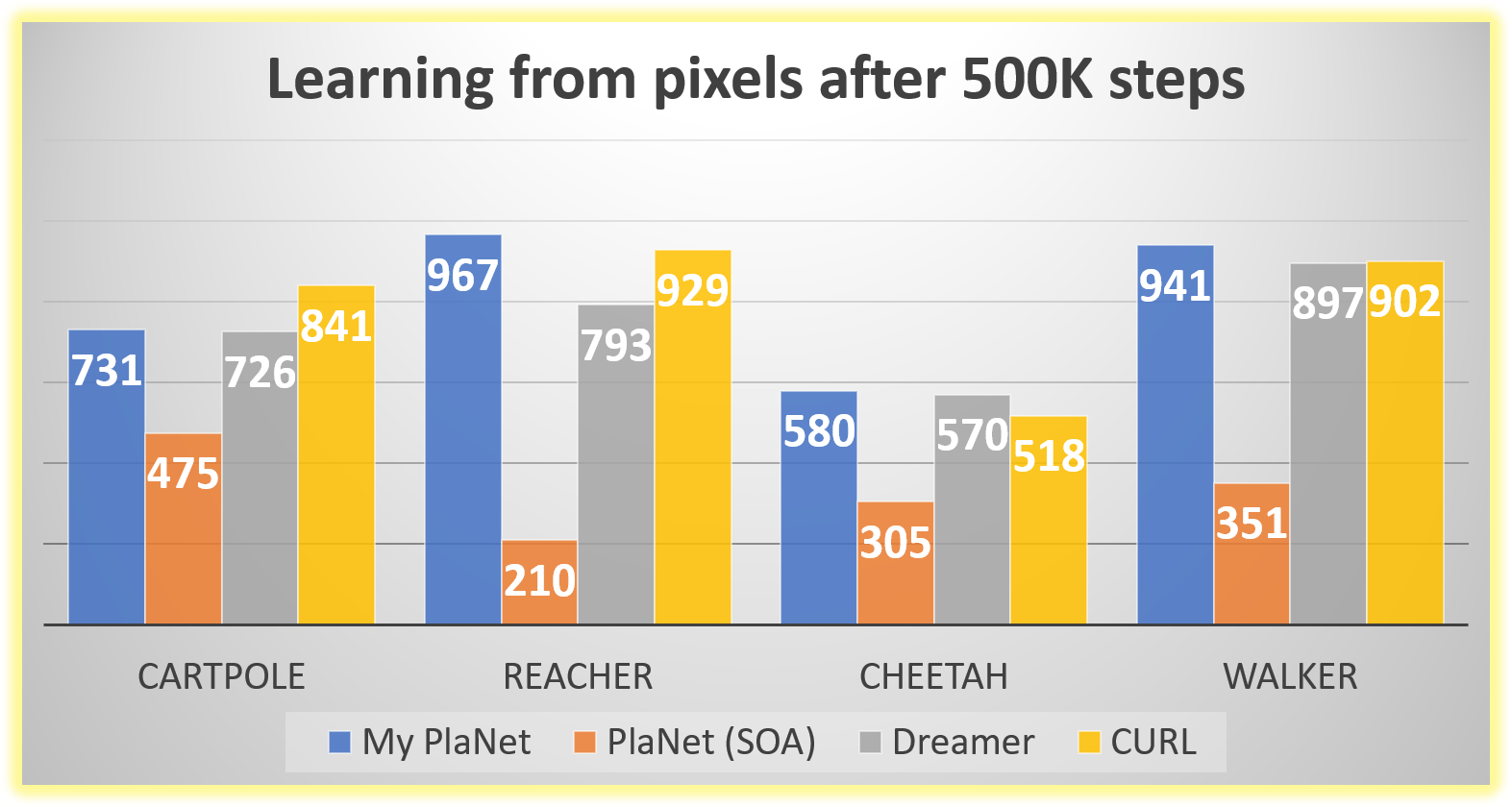

Comparison's data are form: Curl: Contrastive unsupervised representations for reinforcement learning. Laskin, M., Srinivas, A., & Abbeel, P. (2020, July)

- Python 3

- DeepMind Control Suite (optional)

- Gym

- OpenCV Python

- Plotly

- PyTorch

- Overcoming the limits of DRL using a model-based approach

- Introducing PlaNet: A Deep Planning Network for Reinforcement Learning

- Kaixhin/planet

- google-research/planet

- Curl: Contrastive unsupervised representations for reinforcement learning

- @Kaixhin for its reimplementation of google-research/planet

[1] Learning Latent Dynamics for Planning from Pixels

[2] Overcoming the limits of DRL using a model-based approach