We will provide our PyTorch implementation and pretrained models for our paper very soon.

Semantic Image Synthesis with Spatially-Adaptive Normalization.

Taesung Park, Ming-Yu Liu, Ting-Chun Wang, and Jun-Yan Zhu.

In CVPR 2019 (Oral).

In the meantime, please visit our project webpage for more information.

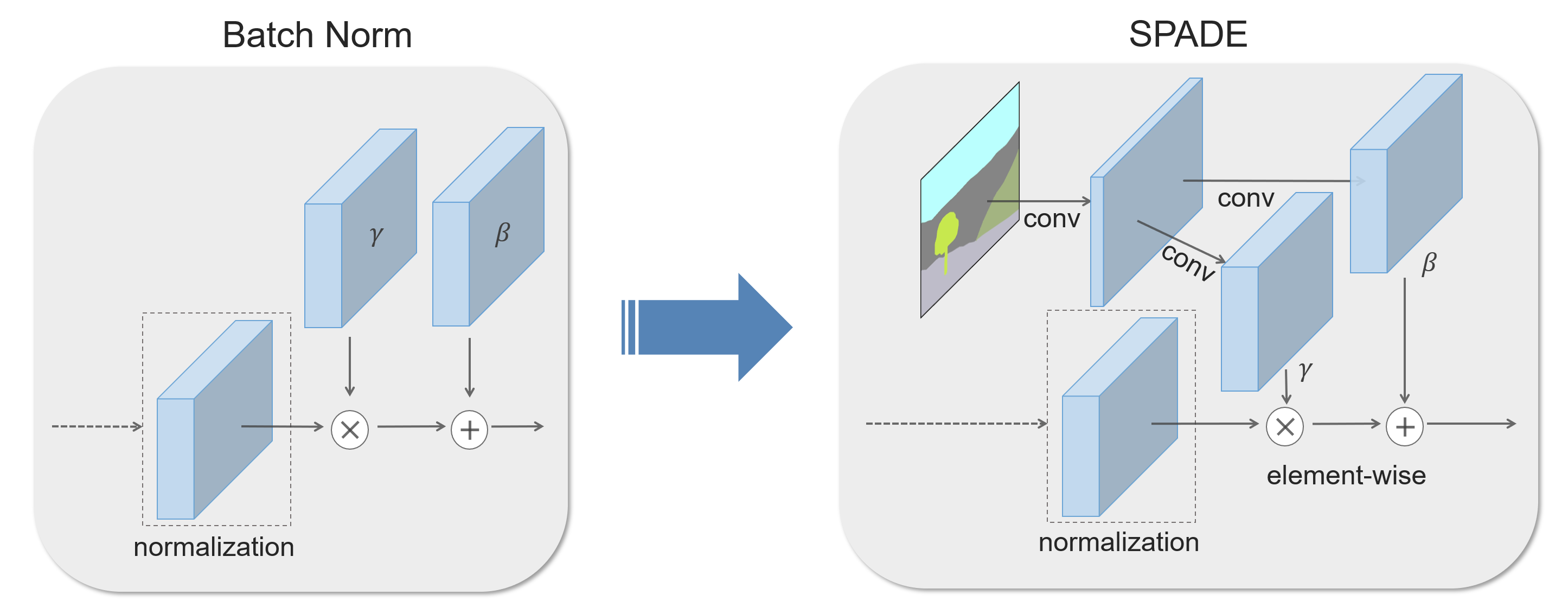

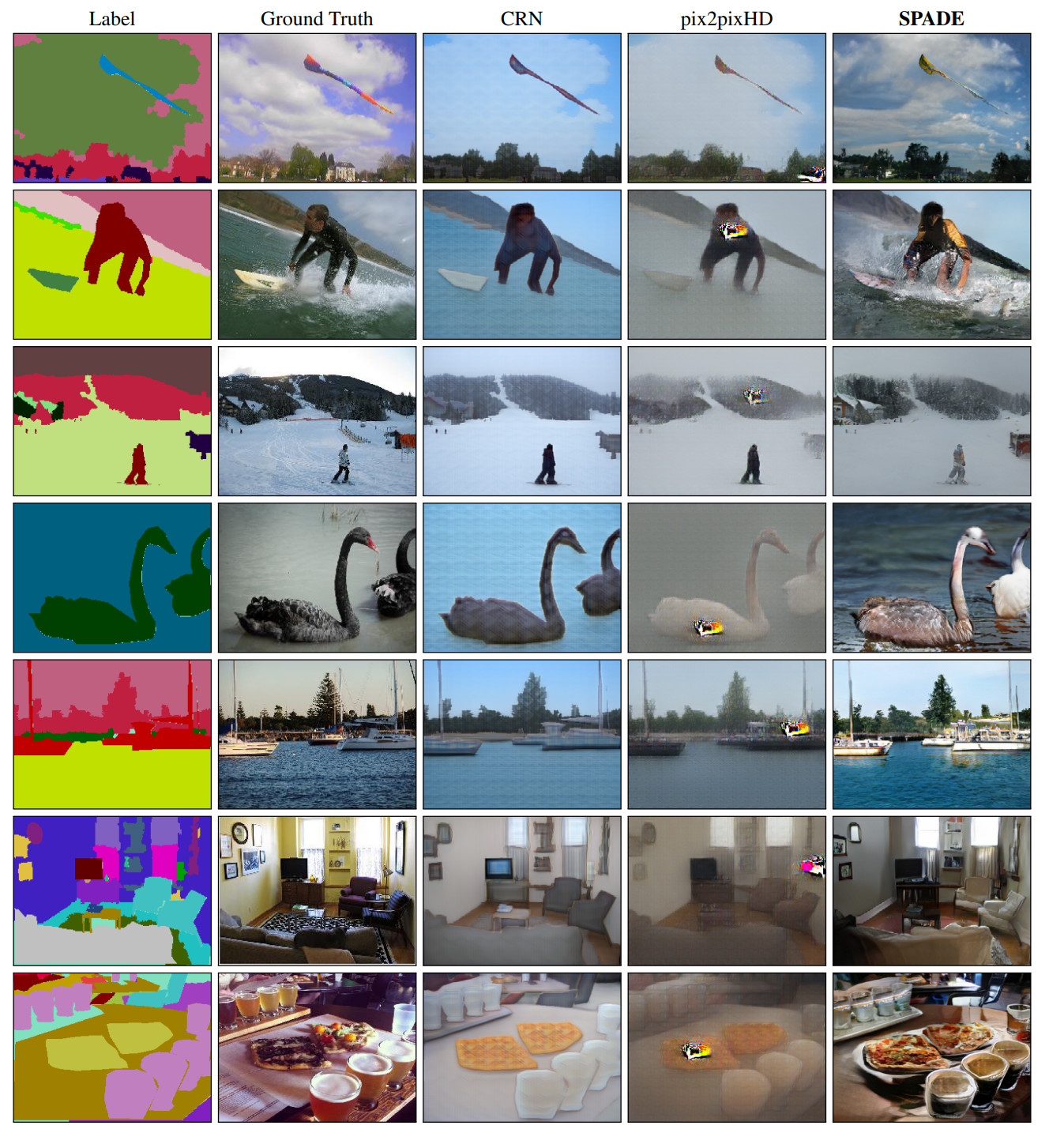

In many common normalization techniques such as Batch Normalization (Ioffe et al., 2015), there are learned affine layers (as in PyTorch and TensorFlow) that are applied after the actual normalization step. In SPADE, the affine layer is learned from semantic segmentation map. This is similar to Conditional Normalization (De Vries et al., 2017 and Dumoulin et al., 2016), except that the learned affine parameters now need to be spatially-adaptive, which means we will use different scaling and bias for each semantic label. Using this simple method, semantic signal can act on all layer outputs, unaffected by the normalization process which may lose such information. Moreover, because the semantic information is provided via SPADE layers, random latent vector may be used as input to the network, which can be used to manipulate the style of the generated images.

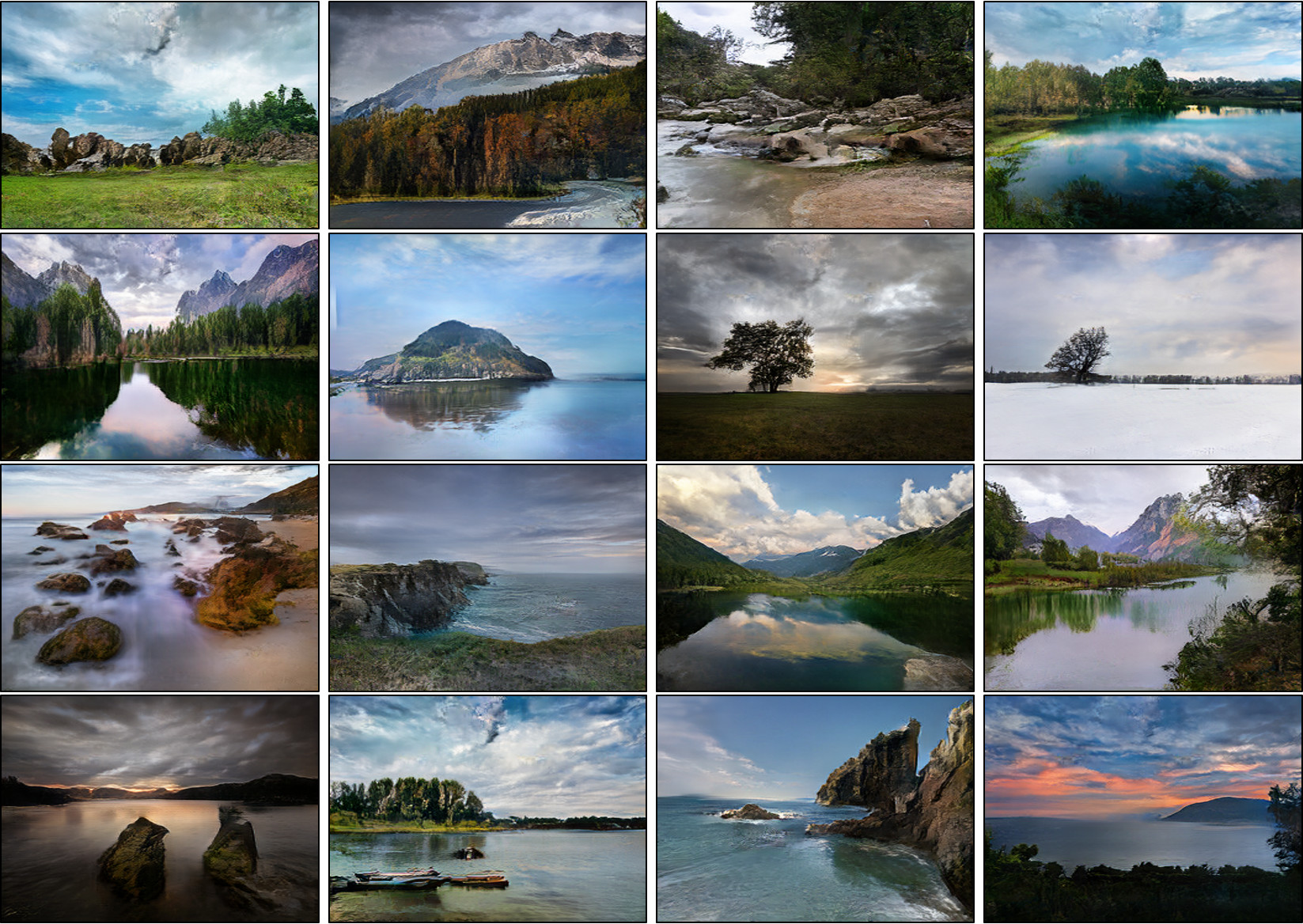

Since SPADE works on diverse labels, it can be trained with an existing semantic segmentation network to learn the reverse mapping from semantic maps to photos. These images were generated from SPADE trained on 40k images scraped from Flickr.

If you use this code for your research, please cite our papers.

@inproceedings{park2019SPADE,

title={Semantic Image Synthesis with Spatially-Adaptive Normalization},

author={Park, Taesung and Ming-Yu Liu and Ting-Chun Wang and Jun-Yan Zhu},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2019}

}

- A. Hertzmann, C. Jacobs, N. Oliver, B. Curless, D. Salesin. "Image Analogies", in SIGGRAPH 2001.

- V. Dumoulin, J. Shlens, and M. Kudlur. "A learned representation for artistic style", in ICLR 2016.

- H. De Vries, F. Strub, J. Mary, H. Larochelle, O. Pietquin, and A. C. Courville. "Modulating early visual processing by language", in NeurIPS 2017.

- T. Wang, M. Liu, J.-Y. Zhu, A. Tao, J. Kautz, and B. Catanzaro. "High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs", in CVPR 2018. (pix2pixHD)

- P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros. "Image-to-Image Translation with Conditional Adversarial Networks", in CVPR 2017. (pix2pix)

- Q. Chen and V. Koltun. "Photographic image synthesis with cascaded refinement networks., ICCV 2017. (CRN)

We thank Alyosha Efros and Jan Kautz for insightful advice. Taesung Park contributed to the work during his internship at NVIDIA. His Ph.D. is supported by the Samsung Scholarship.