-

Notifications

You must be signed in to change notification settings - Fork 16

Using TAU to Profile and or Trace ADIOS

Instructions tested with ADIOS2 v2.4.0 release on September 5, 2019

ADIOS2 performance can be measured using the tau_exec program which is included in TAU (https://github.com/UO-OACISS/tau2). TAU configuration should auto-detect the architecture that it is building on, and with systems that require cross-compilation (Cray) it will build the measurement library for the compute nodes and the front-end tools for the login nodes. To configure/install TAU, do one of the following (for more details, see the main Installing TAU page):

With a recent Spack (http://github.com:spack/spack) installation, do:

spack install tau@develop

By default, that will build TAU with binutils, libdwarf, libelf, libunwind (all used for sampling, call site resolution and compiler-based instrumentation for address resolution), otf2 (for tracing support), papi (hardware counter support), PDT (auto-instrumentation support), pthread (for threading support). That is, the TAU configuration in Spack will look something like: tau@develop%[email protected]~bgq+binutils~comm~craycnl~cuda~gasnet+libdwarf+libelf+libunwind~likwid~mpi~ompt~opari~openmp+otf2+papi+pdt~phase~ppc64le+pthreads~python~scorep~shmem~x86_64. If your only interest is in using TAU to measure ADIOS2, you can save time/space by installing TAU without PDT:

spack install tau@develop~pdt

Note: As of September 5, 2019, the TAU spack package was missing support for the POSIX I/O wrapper. That's been requested as a pull request, but if not yet merged and you want POSIX I/O wrapper support, use this installation package: https://github.com/khuck/spack/blob/develop/var/spack/repos/builtin/packages/tau/package.py

TAU will download and build many dependencies, with the exception of PAPI - usually pre-installed on systems. TAU assumes the GCC compilers by default. For ABI compatibility, TAU requires you build with the same compiler and MPI configuration used with ADIOS2 and the application(s). By default TAU will install in-place, in tau2/$arch.

$ git clone https://github.com/UO-OACISS/tau2.git

$ cd tau2

$ ./configure -bfd=download -dwarf=download -elf=download -unwind=download -otf=download -papi=/path/to/papi -pthread -mpi -iowrapper -prefix=/path/to/installation

$ make -j install

When using different compilers (i.e. PGI), you specify them on the configuration line like so (for historical reasons, the fortran compiler is specified by vendor, not name...in most cases):

$ ./configure -cc=pgcc -c++=pgc++ -fortran=pgi ...

Using TAU at runtime just requires the tau_exec wrapper script at runtime. First, make sure that TAU is in your path. If you installed with Spack and you have used either spack bootstrap or installed modules with Spack, you can use spack load tau. For all others:

# bash:

$ export PATH=$PATH:/home/users/khuck/src/tau2/x86_64/bin

# csh:

> setenv PATH $PATH:/home/users/khuck/src/tau2/x86_64/bin

The primary argument passed to tau_exec is the TAU configuration (because many different TAU configurations/builds can live in the same installation directory). To see which configurations are available, use the tau-config program. In the following example, there is only one configuration.

$ tau-config --list-matching

shared-papi-mpi-pthread

By replacing the dashes (-) with commas (,), add tau_exec to the mpirun/srun/jsrun command. For example:

$ mpirun -n 12 ./heatSimulation sim.bp 4 3 5 10 10 10 : \

-n 2 ./heatAnalysis sim.bp analysis.bp 2 1

becomes (to measure the heatSimulation application):

$ mpirun -n 12 tau_exec -T shared,papi,mpi,pthread ./heatSimulation sim.bp 4 3 5 10 10 10 : \

-n 2 ./heatAnalysis sim.bp analysis.bp 2 1

Other useful arguments to tau_exec:

-

-ebswill enable sampling -

-memorywill enable memory usage tracking (malloc/free) -

-iowill enable the posix IO wrapper -

-cuptiwill enable CUDA/CUPTI measurement (TAU needs to be configured with-cuda=${OLCF_CUDA_ROOT}) -

-openclwill enable the OpenCL wrapper (TAU needs to be configured with-opencl=/path/to/opencl/headers) -

-papi_componentswill enable a plugin to read OS/HW metrics periodically (see tau2/examples/papi_components or https://github.com/UO-OACISS/tau2/wiki/Using-the-PAPI-Components-Plugin for more information)

After execution, you should have a profile.* file for each thread of execution and each MPI rank. (There are TAU options for only generating one file, but for now we will work with the profile.* files.) To get a quick text dump of the measurement, use the pprof program in the TAU path:

$ pprof

Reading Profile files in profile.*

NODE 0;CONTEXT 0;THREAD 0:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 4 630 1 412 630275 .TAU application

64.3 405 405 1 2 405193 MPI_Init()

16.3 46 102 1 2 102709 MPI_Finalize()

14.9 44 93 1 3 93820 BP4Writer::Close

9.0 56 56 2 0 28320 pthread_join

7.9 33 49 1 5 49630 BP4Writer::WriteProfilingJSONFile

2.8 0.189 17 1 4 17398 IO::Open

2.7 17 17 1 5 17103 BP4Writer::Open

2.6 16 16 31 0 532 MPI_Gather()

0.5 0.48 3 10 26 319 BP4Writer::EndStep

0.4 0.285 2 10 20 229 BP4Writer::Flush

0.3 1 1 11 280 175 BP4Writer::WriteCollectiveMetadataFile

0.2 1 1 182 0 7 MPI_Recv()

0.1 0.767 0.767 182 0 4 MPI_Send()

0.1 0.696 0.696 1 0 696 MPI_Comm_split()

0.1 0.381 0.409 10 20 41 BP4Writer::PerformPuts

0.0 0.271 0.271 10 0 27 MPI_Reduce()

0.0 0.198 0.198 31 0 6 MPI_Gatherv()

0.0 0.163 0.163 2 0 82 MPI_Comm_dup()

0.0 0.127 0.127 11 0 12 BP4Writer::WriteData

0.0 0.112 0.112 2 0 56 pthread_create

0.0 0.104 0.104 2 0 52 MPI_Bcast()

0.0 0.087 0.087 1 0 87 IO::DefineVariable

0.0 0.087 0.087 182 0 0 MPI_Comm_rank()

0.0 0.07 0.07 15 0 5 IO::other

0.0 0.062 0.062 2 0 31 MPI_Allreduce()

0.0 0.051 0.051 2 0 26 MPI_Comm_free()

0.0 0.043 0.049 3 2 16 IO::DefineAttribute

0.0 0.033 0.033 2 0 16 MPI_Barrier()

0.0 0.019 0.019 10 0 2 BP4Writer::BeginStep

0.0 0.015 0.015 34 0 0 MPI_Comm_size()

0.0 0.014 0.014 10 0 1 BP4Writer::PopulateMetadataIndexFileContent

0.0 0.013 0.013 10 0 1 IO::InquireVariable

0.0 0.009 0.009 6 0 2 IO::InquireAttribute

0.0 0.004 0.004 1 0 4 MPI_Finalized()

---------------------------------------------------------------------------------------

USER EVENTS Profile :NODE 0, CONTEXT 0, THREAD 0

---------------------------------------------------------------------------------------

NumSamples MaxValue MinValue MeanValue Std. Dev. Event Name

---------------------------------------------------------------------------------------

2 8 8 8 0 Message size for all-reduce

2 1816 8 912 904 Message size for broadcast

62 8676 0 530.9 1250 Message size for gather

10 8 8 8 0 Message size for reduce

---------------------------------------------------------------------------------------

NODE 0;CONTEXT 0;THREAD 1:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 6 565 1 1 565356 .TAU application

98.8 558 558 1 0 558697 progress_engine

NODE 0;CONTEXT 0;THREAD 2:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 0.693 531 1 1 531646 .TAU application

99.9 530 530 1 0 530953 addr=<7f6cebb6de60>

(more ranks follow)

In this case (BP4), the main thread did all the work, and the two additional threads were spawned by OpenMPI as progress threads.

Adding the -ebs flag, we get:

$ pprof

Reading Profile files in profile.*

NODE 0;CONTEXT 0;THREAD 0:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 5 317 1 412 317863 .TAU application

43.8 139 139 1 2 139270 MPI_Init()

33.2 105 105 2 0 52700 [SAMPLE] __close_nocancel

28.8 67 91 1 3 91469 BP4Writer::Close

25.4 0 80 1 0 80841 BP4Writer::Close => [CONTEXT] BP4Writer::Close

25.4 80 80 1 0 80841 BP4Writer::Close => [CONTEXT] BP4Writer::Close => [SAMPLE] __close_nocancel

25.4 0 80 1 0 80841 [CONTEXT] BP4Writer::Close

21.7 61 68 1 2 68870 MPI_Finalize()

7.7 0 24 1 0 24558 BP4Writer::WriteProfilingJSONFile => [CONTEXT] BP4Writer::WriteProfilingJSONFile

7.7 24 24 1 0 24558 BP4Writer::WriteProfilingJSONFile => [CONTEXT] BP4Writer::WriteProfilingJSONFile => [SAMPLE] __close_nocancel

7.7 0 24 1 0 24558 [CONTEXT] BP4Writer::WriteProfilingJSONFile

7.6 23 24 1 5 24017 BP4Writer::WriteProfilingJSONFile

2.4 7 7 2 0 3788 pthread_join

1.8 0.197 5 1 4 5651 IO::Open

1.6 4 4 1 5 4941 BP4Writer::Open

1.3 0.836 4 10 26 414 BP4Writer::EndStep

0.9 0.286 2 10 20 296 BP4Writer::Flush

0.8 0.984 2 11 280 239 BP4Writer::WriteCollectiveMetadataFile

0.4 1 1 182 0 7 MPI_Recv()

0.4 1 1 10 0 112 MPI_Reduce()

0.3 0.864 0.864 1 0 864 MPI_Comm_split()

0.2 0.681 0.681 2 0 340 MPI_Comm_dup()

0.2 0.523 0.523 182 0 3 MPI_Send()

0.1 0.319 0.339 10 20 34 BP4Writer::PerformPuts

0.1 0.288 0.288 31 0 9 MPI_Gather()

0.1 0.191 0.191 31 0 6 MPI_Gatherv()

0.1 0.189 0.189 2 0 94 MPI_Barrier()

0.0 0.117 0.117 2 0 58 pthread_create

0.0 0.083 0.083 11 0 8 BP4Writer::WriteData

0.0 0.08 0.08 2 0 40 MPI_Bcast()

0.0 0.063 0.063 1 0 63 IO::DefineVariable

0.0 0.062 0.062 182 0 0 MPI_Comm_rank()

0.0 0.048 0.048 2 0 24 MPI_Allreduce()

0.0 0.046 0.046 2 0 23 MPI_Comm_free()

0.0 0.035 0.035 15 0 2 IO::other

0.0 0.029 0.033 3 2 11 IO::DefineAttribute

0.0 0.016 0.016 10 0 2 BP4Writer::BeginStep

0.0 0.011 0.011 34 0 0 MPI_Comm_size()

0.0 0.01 0.01 10 0 1 IO::InquireVariable

0.0 0.009 0.009 10 0 1 BP4Writer::PopulateMetadataIndexFileContent

0.0 0.006 0.006 6 0 1 IO::InquireAttribute

0.0 0.003 0.003 1 0 3 MPI_Finalized()

---------------------------------------------------------------------------------------

USER EVENTS Profile :NODE 0, CONTEXT 0, THREAD 0

---------------------------------------------------------------------------------------

NumSamples MaxValue MinValue MeanValue Std. Dev. Event Name

---------------------------------------------------------------------------------------

2 8 8 8 0 Message size for all-reduce

2 1816 8 912 904 Message size for broadcast

62 8676 0 530.9 1249 Message size for gather

10 8 8 8 0 Message size for reduce

---------------------------------------------------------------------------------------

NODE 0;CONTEXT 0;THREAD 1:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 5 267 1 1 267043 .TAU application

98.0 261 261 1 0 261670 progress_engine

NODE 0;CONTEXT 0;THREAD 2:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 0.993 240 1 1 240100 .TAU application

99.6 239 239 1 0 239107 addr=<7f09eb916e60>

(more ranks follow)

Adding the -memory flag, we get:

USER EVENTS Profile :NODE 0, CONTEXT 0, THREAD 0

---------------------------------------------------------------------------------------

NumSamples MaxValue MinValue MeanValue Std. Dev. Event Name

---------------------------------------------------------------------------------------

89 3789 0.0166 42.74 399.4 Decrease in Heap Memory (KB)

10 0.02051 0.02051 0.02051 3.293E-10 Decrease in Heap Memory (KB) : BP4Writer::BeginStep

1 0.0166 0.0166 0.0166 2.328E-10 Decrease in Heap Memory (KB) : BP4Writer::Close

1 0.03809 0.03809 0.03809 4.657E-10 Decrease in Heap Memory (KB) : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile

1 0.02051 0.02051 0.02051 2.328E-10 Decrease in Heap Memory (KB) : BP4Writer::Close => BP4Writer::WriteData

1 0.0332 0.0332 0.0332 0 Decrease in Heap Memory (KB) : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile

9 0.1279 0.1279 0.1279 0 Decrease in Heap Memory (KB) : BP4Writer::EndStep

9 0.0166 0.0166 0.0166 2.328E-10 Decrease in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::Flush

9 0.03809 0.03809 0.03809 6.585E-10 Decrease in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile

10 0.04297 0.04297 0.04297 0 Decrease in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => BP4Writer::PopulateMetadataIndexFileContent

10 0.02051 0.02051 0.02051 3.293E-10 Decrease in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteData

9 0.1318 0.1318 0.1318 2.634E-09 Decrease in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::PerformPuts

10 0.01953 0.01953 0.01953 0 Decrease in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::PerformPuts => IO::InquireVariable

6 0.02051 0.02051 0.02051 2.328E-10 Decrease in Heap Memory (KB) : BP4Writer::EndStep => IO::InquireAttribute

2 5.34 5.309 5.324 0.01562 Decrease in Heap Memory (KB) : MPI_Comm_free()

1 3789 3789 3789 6.104E-05 Decrease in Heap Memory (KB) : MPI_Finalize()

2.84E+04 1.052E+06 1 228.1 7723 Heap Allocate

517 3.277E+04 16 156.5 1494 Heap Allocate : .TAU application

6 39 21 31.33 7.587 Heap Allocate : BP4Writer::Close

2 40 38 39 1 Heap Allocate : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile

45 8192 3 321.3 1291 Heap Allocate : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile

83 944 8 56.06 125.7 Heap Allocate : BP4Writer::EndStep

40 39 21 30 9 Heap Allocate : BP4Writer::EndStep => BP4Writer::Flush

361 1.058E+04 22 212 763.5 Heap Allocate : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile

22 1040 8 61.27 215.6 Heap Allocate : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Reduce()

89 200 1 25.63 23 Heap Allocate : BP4Writer::EndStep => BP4Writer::PerformPuts

12 397 24 145.5 126.2 Heap Allocate : IO::DefineAttribute

12 552 16 95.67 157.3 Heap Allocate : IO::DefineVariable

3 1328 80 536 562.2 Heap Allocate : IO::Open

114 1.638E+04 1 198.4 1524 Heap Allocate : IO::Open => BP4Writer::Open

91 1200 1 97.81 214.5 Heap Allocate : IO::Open => MPI_Comm_dup()

3 96 96 96 0 Heap Allocate : IO::other

2 8 8 8 0 Heap Allocate : MPI_Allreduce()

2 148 32 90 58 Heap Allocate : MPI_Bcast()

91 1200 1 112.8 216.6 Heap Allocate : MPI_Comm_dup()

100 1920 1 140.2 324 Heap Allocate : MPI_Comm_split()

267 3.282E+04 7 656.9 4444 Heap Allocate : MPI_Finalize()

2.654E+04 1.052E+06 1 228.3 7973 Heap Allocate : MPI_Init()

1 4 4 4 0 Heap Allocate : progress_engine

2.805E+04 1.052E+06 1 222.9 7771 Heap Free

544 3.277E+04 16 214.5 1675 Heap Free : .TAU application

10 21 21 21 0 Heap Free : BP4Writer::BeginStep

4 39 17 27.75 9.038 Heap Free : BP4Writer::Close

3 40 38 39 0.8165 Heap Free : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile

1 21 21 21 0 Heap Free : BP4Writer::Close => BP4Writer::WriteData

46 8192 3 315.1 1278 Heap Free : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile

56 944 8 54.25 139.5 Heap Free : BP4Writer::EndStep

30 39 17 25.67 9.568 Heap Free : BP4Writer::EndStep => BP4Writer::Flush

361 8676 22 182.7 533.5 Heap Free : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile

10 44 44 44 0 Heap Free : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => BP4Writer::PopulateMetadataIndexFileContent

20 8 8 8 0 Heap Free : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Reduce()

10 21 21 21 0 Heap Free : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteData

126 64 1 24.63 14.81 Heap Free : BP4Writer::EndStep => BP4Writer::PerformPuts

10 20 20 20 0 Heap Free : BP4Writer::EndStep => BP4Writer::PerformPuts => IO::InquireVariable

6 21 21 21 0 Heap Free : BP4Writer::EndStep => IO::InquireAttribute

6 397 20 84.83 139.6 Heap Free : IO::DefineAttribute

7 296 16 65 95.51 Heap Free : IO::DefineVariable

57 96 1 28.82 19.14 Heap Free : IO::Open => BP4Writer::Open

39 525 4 80.51 143.8 Heap Free : IO::Open => MPI_Comm_dup()

2 8 8 8 0 Heap Free : MPI_Allreduce()

44 525 4 87.52 138 Heap Free : MPI_Comm_dup()

23 1200 32 474.1 412.4 Heap Free : MPI_Comm_free()

46 525 4 83.57 134.6 Heap Free : MPI_Comm_split()

1.161E+04 1.052E+06 1 348.7 1.191E+04 Heap Free : MPI_Finalize()

1.498E+04 6.554E+04 1 131.8 1712 Heap Free : MPI_Init()

1 48 48 48 0 Heap Free : progress_engine

6.214E+04 4454 0.03906 1608 1207 Heap Memory Used (KB)

782 4445 136.6 4411 319.3 Heap Memory Used (KB) at Entry

1 136.6 136.6 136.6 0 Heap Memory Used (KB) at Entry : .TAU application

10 4441 4428 4440 3.985 Heap Memory Used (KB) at Entry : BP4Writer::BeginStep

1 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Entry : BP4Writer::Close

1 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Entry : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile

1 4441 4441 4441 0 Heap Memory Used (KB) at Entry : BP4Writer::Close => BP4Writer::WriteData

1 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Entry : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile

2 4445 4441 4443 1.736 Heap Memory Used (KB) at Entry : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => MPI_Comm_rank()

1 4441 4441 4441 0 Heap Memory Used (KB) at Entry : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => MPI_Comm_size()

1 4441 4441 4441 0 Heap Memory Used (KB) at Entry : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => MPI_Gather()

1 4445 4445 4445 6.104E-05 Heap Memory Used (KB) at Entry : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => MPI_Gatherv()

10 4441 4428 4440 3.899 Heap Memory Used (KB) at Entry : BP4Writer::EndStep

10 4441 4430 4440 3.428 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush

10 4441 4430 4440 3.428 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile

10 4441 4441 4441 0 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => BP4Writer::PopulateMetadataIndexFileContent

170 4444 4430 4441 2.619 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Comm_rank()

30 4441 4431 4440 2.777 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Comm_size()

30 4442 4431 4441 2.777 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Gather()

30 4443 4432 4441 2.499 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Gatherv()

10 4441 4430 4440 3.428 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Reduce()

10 4441 4430 4440 3.428 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteData

10 4441 4428 4440 3.899 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::PerformPuts

10 4441 4428 4440 3.899 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::PerformPuts => IO::InquireVariable

10 4441 4428 4440 3.899 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => BP4Writer::PerformPuts => IO::other

6 4430 4429 4429 0.3899 Heap Memory Used (KB) at Entry : BP4Writer::EndStep => IO::InquireAttribute

3 4401 4400 4400 0.49 Heap Memory Used (KB) at Entry : IO::DefineAttribute

2 4400 4400 4400 0.1445 Heap Memory Used (KB) at Entry : IO::DefineAttribute => IO::other

1 4399 4399 4399 0 Heap Memory Used (KB) at Entry : IO::DefineVariable

1 4402 4402 4402 0 Heap Memory Used (KB) at Entry : IO::Open

1 4408 4408 4408 6.104E-05 Heap Memory Used (KB) at Entry : IO::Open => BP4Writer::Open

1 4425 4425 4425 6.104E-05 Heap Memory Used (KB) at Entry : IO::Open => BP4Writer::Open => MPI_Barrier()

4 4428 4425 4427 1.146 Heap Memory Used (KB) at Entry : IO::Open => BP4Writer::Open => MPI_Comm_rank()

1 4402 4402 4402 0 Heap Memory Used (KB) at Entry : IO::Open => MPI_Comm_dup()

1 4408 4408 4408 6.104E-05 Heap Memory Used (KB) at Entry : IO::Open => MPI_Comm_rank()

1 4408 4408 4408 6.104E-05 Heap Memory Used (KB) at Entry : IO::Open => MPI_Comm_size()

3 4433 4429 4431 1.518 Heap Memory Used (KB) at Entry : IO::other

2 4428 4428 4428 0 Heap Memory Used (KB) at Entry : MPI_Allreduce()

1 4441 4441 4441 0 Heap Memory Used (KB) at Entry : MPI_Barrier()

2 4398 4396 4397 0.9751 Heap Memory Used (KB) at Entry : MPI_Bcast()

1 4388 4388 4388 0 Heap Memory Used (KB) at Entry : MPI_Comm_dup()

2 4441 4436 4438 2.684 Heap Memory Used (KB) at Entry : MPI_Comm_free()

5 4396 4378 4390 6.982 Heap Memory Used (KB) at Entry : MPI_Comm_rank()

2 4387 4378 4382 4.501 Heap Memory Used (KB) at Entry : MPI_Comm_size()

1 4378 4378 4378 6.104E-05 Heap Memory Used (KB) at Entry : MPI_Comm_split()

1 4387 4387 4387 6.104E-05 Heap Memory Used (KB) at Entry : MPI_Finalize()

2 1629 1185 1407 222.3 Heap Memory Used (KB) at Entry : MPI_Finalize() => pthread_join

1 4436 4436 4436 0 Heap Memory Used (KB) at Entry : MPI_Finalized()

1 254.1 254.1 254.1 0 Heap Memory Used (KB) at Entry : MPI_Init()

2 1421 360.5 890.5 530 Heap Memory Used (KB) at Entry : MPI_Init() => pthread_create

182 4441 4428 4441 1.385 Heap Memory Used (KB) at Entry : MPI_Recv()

182 4441 4428 4441 1.385 Heap Memory Used (KB) at Entry : MPI_Send()

0 0 0 0 0 Heap Memory Used (KB) at Entry : progress_engine

782 4445 360.5 4413 306.1 Heap Memory Used (KB) at Exit

1 597.6 597.6 597.6 0 Heap Memory Used (KB) at Exit : .TAU application

10 4441 4428 4440 3.985 Heap Memory Used (KB) at Exit : BP4Writer::BeginStep

1 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Exit : BP4Writer::Close

1 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Exit : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile

1 4441 4441 4441 0 Heap Memory Used (KB) at Exit : BP4Writer::Close => BP4Writer::WriteData

1 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Exit : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile

2 4445 4441 4443 1.736 Heap Memory Used (KB) at Exit : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => MPI_Comm_rank()

1 4441 4441 4441 0 Heap Memory Used (KB) at Exit : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => MPI_Comm_size()

1 4441 4441 4441 0 Heap Memory Used (KB) at Exit : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => MPI_Gather()

1 4445 4445 4445 6.104E-05 Heap Memory Used (KB) at Exit : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => MPI_Gatherv()

10 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Exit : BP4Writer::EndStep

10 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush

10 4441 4441 4441 6.104E-05 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile

10 4441 4441 4441 0 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => BP4Writer::PopulateMetadataIndexFileContent

170 4444 4430 4441 2.619 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Comm_rank()

30 4441 4431 4440 2.777 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Comm_size()

30 4442 4431 4441 2.777 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Gather()

30 4443 4432 4441 2.499 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Gatherv()

10 4441 4431 4440 3.08 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Reduce()

10 4441 4430 4440 3.428 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteData

10 4441 4429 4440 3.802 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::PerformPuts

10 4441 4428 4440 3.899 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::PerformPuts => IO::InquireVariable

10 4441 4428 4440 3.899 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => BP4Writer::PerformPuts => IO::other

6 4430 4429 4429 0.3899 Heap Memory Used (KB) at Exit : BP4Writer::EndStep => IO::InquireAttribute

3 4402 4400 4401 0.6682 Heap Memory Used (KB) at Exit : IO::DefineAttribute

2 4400 4400 4400 0.1445 Heap Memory Used (KB) at Exit : IO::DefineAttribute => IO::other

1 4400 4400 4400 0 Heap Memory Used (KB) at Exit : IO::DefineVariable

1 4428 4428 4428 0 Heap Memory Used (KB) at Exit : IO::Open

1 4428 4428 4428 6.104E-05 Heap Memory Used (KB) at Exit : IO::Open => BP4Writer::Open

1 4425 4425 4425 6.104E-05 Heap Memory Used (KB) at Exit : IO::Open => BP4Writer::Open => MPI_Barrier()

4 4428 4425 4427 1.146 Heap Memory Used (KB) at Exit : IO::Open => BP4Writer::Open => MPI_Comm_rank()

1 4406 4406 4406 0 Heap Memory Used (KB) at Exit : IO::Open => MPI_Comm_dup()

1 4408 4408 4408 6.104E-05 Heap Memory Used (KB) at Exit : IO::Open => MPI_Comm_rank()

1 4408 4408 4408 6.104E-05 Heap Memory Used (KB) at Exit : IO::Open => MPI_Comm_size()

3 4433 4429 4431 1.518 Heap Memory Used (KB) at Exit : IO::other

2 4428 4428 4428 0 Heap Memory Used (KB) at Exit : MPI_Allreduce()

1 4441 4441 4441 0 Heap Memory Used (KB) at Exit : MPI_Barrier()

2 4398 4396 4397 0.8872 Heap Memory Used (KB) at Exit : MPI_Bcast()

1 4394 4394 4394 6.104E-05 Heap Memory Used (KB) at Exit : MPI_Comm_dup()

2 4436 4430 4433 2.7 Heap Memory Used (KB) at Exit : MPI_Comm_free()

5 4396 4378 4390 6.982 Heap Memory Used (KB) at Exit : MPI_Comm_rank()

2 4387 4378 4382 4.501 Heap Memory Used (KB) at Exit : MPI_Comm_size()

1 4387 4387 4387 6.104E-05 Heap Memory Used (KB) at Exit : MPI_Comm_split()

1 597.6 597.6 597.6 0 Heap Memory Used (KB) at Exit : MPI_Finalize()

2 1629 1185 1407 222.3 Heap Memory Used (KB) at Exit : MPI_Finalize() => pthread_join

1 4436 4436 4436 0 Heap Memory Used (KB) at Exit : MPI_Finalized()

1 4378 4378 4378 6.104E-05 Heap Memory Used (KB) at Exit : MPI_Init()

2 1421 360.5 890.5 530 Heap Memory Used (KB) at Exit : MPI_Init() => pthread_create

182 4441 4428 4441 1.385 Heap Memory Used (KB) at Exit : MPI_Recv()

182 4441 4428 4441 1.385 Heap Memory Used (KB) at Exit : MPI_Send()

0 0 0 0 0 Heap Memory Used (KB) at Exit : progress_engine

20 4123 0.09375 234.5 897.7 Increase in Heap Memory (KB)

1 461 461 461 5.395E-06 Increase in Heap Memory (KB) : .TAU application

1 12.87 12.87 12.87 2.384E-07 Increase in Heap Memory (KB) : BP4Writer::EndStep

1 11.41 11.41 11.41 0 Increase in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::Flush

1 11.39 11.39 11.39 1.686E-07 Increase in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile

1 1.16 1.16 1.16 2.107E-08 Increase in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Reduce()

1 0.1885 0.1885 0.1885 2.634E-09 Increase in Heap Memory (KB) : BP4Writer::EndStep => BP4Writer::PerformPuts

3 0.665 0.2461 0.4027 0.1867 Increase in Heap Memory (KB) : IO::DefineAttribute

1 0.6768 0.6768 0.6768 7.451E-09 Increase in Heap Memory (KB) : IO::DefineVariable

1 26.45 26.45 26.45 0 Increase in Heap Memory (KB) : IO::Open

1 20.48 20.48 20.48 0 Increase in Heap Memory (KB) : IO::Open => BP4Writer::Open

1 4.395 4.395 4.395 5.96E-08 Increase in Heap Memory (KB) : IO::Open => MPI_Comm_dup()

3 0.09375 0.09375 0.09375 0 Increase in Heap Memory (KB) : IO::other

1 0.1758 0.1758 0.1758 0 Increase in Heap Memory (KB) : MPI_Bcast()

1 5.517 5.517 5.517 0 Increase in Heap Memory (KB) : MPI_Comm_dup()

1 9.002 9.002 9.002 0 Increase in Heap Memory (KB) : MPI_Comm_split()

1 4123 4123 4123 6.104E-05 Increase in Heap Memory (KB) : MPI_Init()

0 0 0 0 0 Increase in Heap Memory (KB) : progress_engine

285 1.857E+05 8 1191 1.134E+04 MEMORY LEAK! Heap Allocate : .TAU application

1 1040 1040 1040 0 MEMORY LEAK! Heap Allocate : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => MPI_Reduce()

1 15 15 15 0 MEMORY LEAK! Heap Allocate : IO::Open => BP4Writer::Open

3 396 4 149.7 175.2 MEMORY LEAK! Heap Allocate : IO::Open => MPI_Comm_dup()

4 200 4 82.5 77.09 MEMORY LEAK! Heap Allocate : MPI_Comm_dup()

4 396 102 211.2 112.2 MEMORY LEAK! Heap Allocate : MPI_Comm_split()

657 1.782E+04 1 362.8 1196 MEMORY LEAK! Heap Allocate : MPI_Init()

16 136 51 56.31 20.58 MEMORY LEAK! Heap Allocate : progress_engine

2 8 8 8 0 Message size for all-reduce

2 1816 8 912 904 Message size for broadcast

62 8676 0 530.9 1249 Message size for gather

10 8 8 8 0 Message size for reduce

---------------------------------------------------------------------------------------

Adding the -io flag, we get:

$ pprof 0

Reading Profile files in profile.*

NODE 0;CONTEXT 0;THREAD 0:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 2 357 1 459 357101 .TAU application

38.5 124 137 1 336 137443 MPI_Init()

35.3 75 126 1 21 126186 MPI_Finalize()

22.4 0.119 80 1 6 80089 BP4Writer::Close

14.0 49 49 2 0 24952 pthread_join

11.2 0.091 40 1 8 40168 BP4Writer::WriteProfilingJSONFile

11.2 39 39 68 0 587 close()

9.1 32 32 27 0 1210 fclose()

2.2 7 7 29 0 271 fopen()

1.9 6 6 31 0 220 MPI_Gather()

1.9 6 6 51 0 131 open()

1.4 0.118 5 1 4 5006 IO::Open

1.3 0.357 4 1 8 4725 BP4Writer::Open

0.7 0.291 2 10 26 260 BP4Writer::EndStep

0.6 0.192 2 10 20 206 BP4Writer::Flush

0.5 0.674 1 11 303 170 BP4Writer::WriteCollectiveMetadataFile

0.4 1 1 147 0 10 write()

0.4 1 1 2 0 677 fopen64()

0.3 0.904 0.904 182 0 5 MPI_Recv()

0.2 0.873 0.873 42 0 21 read()

0.2 0.543 0.543 1 0 543 MPI_Comm_split()

0.1 0.534 0.534 10 0 53 MPI_Reduce()

0.1 0.497 0.497 182 0 3 MPI_Send()

0.1 0.29 0.29 8 0 36 socket()

0.1 0.255 0.255 43 0 6 fwrite()

0.1 0.246 0.246 3 0 82 socketpair()

0.1 0.225 0.242 10 20 24 BP4Writer::PerformPuts

0.1 0.203 0.203 2 0 102 MPI_Comm_dup()

0.1 0.181 0.181 31 0 6 MPI_Gatherv()

0.0 0.027 0.142 11 10 13 BP4Writer::WriteData

0.0 0.098 0.098 8 0 12 fscanf()

0.0 0.086 0.086 2 0 43 pthread_create

0.0 0.078 0.078 1 0 78 send()

0.0 0.069 0.069 2 0 34 MPI_Barrier()

0.0 0.061 0.061 1 0 61 connect()

0.0 0.057 0.057 2 0 28 MPI_Bcast()

0.0 0.054 0.054 1 0 54 writev()

0.0 0.048 0.048 182 0 0 MPI_Comm_rank()

0.0 0.048 0.048 2 0 24 recv()

0.0 0.038 0.038 2 0 19 MPI_Allreduce()

0.0 0.029 0.029 1 0 29 IO::DefineVariable

0.0 0.026 0.026 2 0 13 MPI_Comm_free()

0.0 0.015 0.017 3 2 6 IO::DefineAttribute

0.0 0.017 0.017 15 0 1 IO::other

0.0 0.016 0.016 5 0 3 bind()

0.0 0.014 0.014 2 0 7 rewind()

0.0 0.011 0.011 10 0 1 BP4Writer::BeginStep

0.0 0.009 0.009 10 0 1 BP4Writer::PopulateMetadataIndexFileContent

0.0 0.009 0.009 34 0 0 MPI_Comm_size()

0.0 0.008 0.008 10 0 1 IO::InquireVariable

0.0 0.006 0.006 6 0 1 IO::InquireAttribute

0.0 0.002 0.002 2 0 1 lseek()

0.0 0.001 0.001 1 0 1 MPI_Finalized()

---------------------------------------------------------------------------------------

USER EVENTS Profile :NODE 0, CONTEXT 0, THREAD 0

---------------------------------------------------------------------------------------

NumSamples MaxValue MinValue MeanValue Std. Dev. Event Name

---------------------------------------------------------------------------------------

44 8192 1 1535 2814 Bytes Read

7 21 21 21 0 Bytes Read : MPI_Init() => fscanf()

34 8192 1 1928 3079 Bytes Read : MPI_Init() => read()

2 4 4 4 0 Bytes Read : MPI_Init() => recv()

0 0 0 0 0 Bytes Read : progress_engine => read()

1 1816 1816 1816 0 Bytes Read : read()

7 21 21 21 0 Bytes Read <file=/proc/net/if_inet6>

7 21 21 21 0 Bytes Read <file=/proc/net/if_inet6> : MPI_Init() => fscanf()

1 1 1 1 0 Bytes Read <file=/proc/sys/kernel/yama/ptrace_scope>

1 1 1 1 0 Bytes Read <file=/proc/sys/kernel/yama/ptrace_scope> : MPI_Init() => read()

0 0 0 0 0 Bytes Read <file=/storage/packages/openmpi/4.0.1-gcc8.1/lib/libopen-pal.so.40.20.1>

0 0 0 0 0 Bytes Read <file=/storage/packages/openmpi/4.0.1-gcc8.1/lib/libopen-pal.so.40.20.1> : read()

0 0 0 0 0 Bytes Read <file=/storage/packages/openmpi/4.0.1-gcc8.1/lib/openmpi/mca_pmix_pmix3x.so>

0 0 0 0 0 Bytes Read <file=/storage/packages/openmpi/4.0.1-gcc8.1/lib/openmpi/mca_pmix_pmix3x.so> : read()

2 20 20 20 0 Bytes Read <file=/sys/class/infiniband/hfi1_0/node_guid>

2 20 20 20 0 Bytes Read <file=/sys/class/infiniband/hfi1_0/node_guid> : MPI_Init() => read()

1 6 6 6 0 Bytes Read <file=/sys/class/infiniband/hfi1_0/node_type>

1 6 6 6 0 Bytes Read <file=/sys/class/infiniband/hfi1_0/node_type> : MPI_Init() => read()

3 40 40 40 0 Bytes Read <file=/sys/class/infiniband/hfi1_0/ports/1/gids/0>

3 40 40 40 0 Bytes Read <file=/sys/class/infiniband/hfi1_0/ports/1/gids/0> : MPI_Init() => read()

1 2 2 2 0 Bytes Read <file=/sys/class/infiniband_verbs/abi_version>

1 2 2 2 0 Bytes Read <file=/sys/class/infiniband_verbs/abi_version> : MPI_Init() => read()

4 2 2 2 0 Bytes Read <file=/sys/class/infiniband_verbs/uverbs0/abi_version>

4 2 2 2 0 Bytes Read <file=/sys/class/infiniband_verbs/uverbs0/abi_version> : MPI_Init() => read()

4 54 54 54 0 Bytes Read <file=/sys/class/infiniband_verbs/uverbs0/device/modalias>

4 54 54 54 0 Bytes Read <file=/sys/class/infiniband_verbs/uverbs0/device/modalias> : MPI_Init() => read()

5 7 7 7 0 Bytes Read <file=/sys/class/infiniband_verbs/uverbs0/ibdev>

5 7 7 7 0 Bytes Read <file=/sys/class/infiniband_verbs/uverbs0/ibdev> : MPI_Init() => read()

1 2 2 2 0 Bytes Read <file=/sys/class/misc/rdma_cm/abi_version>

1 2 2 2 0 Bytes Read <file=/sys/class/misc/rdma_cm/abi_version> : MPI_Init() => read()

2 2886 2886 2886 0 Bytes Read <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/etc/openmpi-mca-params.conf>

2 2886 2886 2886 0 Bytes Read <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/etc/openmpi-mca-params.conf> : MPI_Init() => read()

2 2902 2902 2902 0 Bytes Read <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/etc/pmix-mca-params.conf>

2 2902 2902 2902 0 Bytes Read <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/etc/pmix-mca-params.conf> : MPI_Init() => read()

6 8192 986 6991 2686 Bytes Read <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/share/openmpi/help-mpi-btl-openib.txt>

6 8192 986 6991 2686 Bytes Read <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/share/openmpi/help-mpi-btl-openib.txt> : MPI_Init() => read()

2 8192 3408 5800 2392 Bytes Read <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/share/openmpi/mca-btl-openib-device-params.ini>

2 8192 3408 5800 2392 Bytes Read <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/share/openmpi/mca-btl-openib-device-params.ini> : MPI_Init() => read()

2 4 4 4 0 Bytes Read <file=127.0.0.1,port=34423>

2 4 4 4 0 Bytes Read <file=127.0.0.1,port=34423> : MPI_Init() => recv()

0 0 0 0 0 Bytes Read <file=127.0.0.1,port=34423> : progress_engine => read()

1 1816 1816 1816 0 Bytes Read <file=adios2.xml>

1 1816 1816 1816 0 Bytes Read <file=adios2.xml> : read()

0 0 0 0 0 Bytes Read <file=unknown>

0 0 0 0 0 Bytes Read <file=unknown> : progress_engine => read()

192 3461 1 166.2 492.3 Bytes Written

1 1 1 1 0 Bytes Written : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile => write()

1 3461 3461 3461 0 Bytes Written : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => writev()

20 2555 64 964.5 909.2 Bytes Written : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => write()

10 1183 590 656.6 175.6 Bytes Written : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteData => write()

8 8 8 8 0 Bytes Written : MPI_Finalize() => write()

1 66 66 66 0 Bytes Written : MPI_Init() => send()

108 144 8 19.59 19.3 Bytes Written : MPI_Init() => write()

43 34 1 7.86 9.27 Bytes Written : fwrite()

0 0 0 0 0 Bytes Written : progress_engine => writev()

5 144 24 49.6 47.3 Bytes Written <file=/dev/infiniband/rdma_cm>

5 144 24 49.6 47.3 Bytes Written <file=/dev/infiniband/rdma_cm> : MPI_Init() => write()

51 64 12 28.47 16.28 Bytes Written <file=/dev/infiniband/uverbs0>

51 64 12 28.47 16.28 Bytes Written <file=/dev/infiniband/uverbs0> : MPI_Init() => write()

1 66 66 66 0 Bytes Written <file=127.0.0.1,port=34423>

1 66 66 66 0 Bytes Written <file=127.0.0.1,port=34423> : MPI_Init() => send()

0 0 0 0 0 Bytes Written <file=127.0.0.1,port=34423> : progress_engine => writev()

10 1183 590 656.6 175.6 Bytes Written <file=sim.bp/data.0>

10 1183 590 656.6 175.6 Bytes Written <file=sim.bp/data.0> : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteData => write()

10 2555 1780 1859 232.1 Bytes Written <file=sim.bp/md.0>

10 2555 1780 1859 232.1 Bytes Written <file=sim.bp/md.0> : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => write()

11 128 1 64.09 27.08 Bytes Written <file=sim.bp/md.idx>

1 1 1 1 0 Bytes Written <file=sim.bp/md.idx> : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile => write()

10 128 64 70.4 19.2 Bytes Written <file=sim.bp/md.idx> : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => write()

1 3461 3461 3461 0 Bytes Written <file=sim.bp/profiling.json>

1 3461 3461 3461 0 Bytes Written <file=sim.bp/profiling.json> : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => writev()

43 34 1 7.86 9.27 Bytes Written <file=stdout>

43 34 1 7.86 9.27 Bytes Written <file=stdout> : fwrite()

60 8 8 8 0 Bytes Written <file=unknown>

8 8 8 8 0 Bytes Written <file=unknown> : MPI_Finalize() => write()

52 8 8 8 0 Bytes Written <file=unknown> : MPI_Init() => write()

2 8 8 8 0 Message size for all-reduce

2 1816 8 912 904 Message size for broadcast

62 8676 0 530.9 1249 Message size for gather

10 8 8 8 0 Message size for reduce

44 8192 0.3333 476.8 1458 Read Bandwidth (MB/s)

7 21 0.7778 9.611 5.654 Read Bandwidth (MB/s) : MPI_Init() => fscanf()

34 8192 0.3333 604.3 1636 Read Bandwidth (MB/s) : MPI_Init() => read()

2 2 0.6667 1.333 0.6667 Read Bandwidth (MB/s) : MPI_Init() => recv()

0 0 0 0 0 Read Bandwidth (MB/s) : progress_engine => read()

1 363.2 363.2 363.2 0 Read Bandwidth (MB/s) : read()

7 21 0.7778 9.611 5.654 Read Bandwidth (MB/s) <file=/proc/net/if_inet6>

7 21 0.7778 9.611 5.654 Read Bandwidth (MB/s) <file=/proc/net/if_inet6> : MPI_Init() => fscanf()

1 0.3333 0.3333 0.3333 0 Read Bandwidth (MB/s) <file=/proc/sys/kernel/yama/ptrace_scope>

1 0.3333 0.3333 0.3333 0 Read Bandwidth (MB/s) <file=/proc/sys/kernel/yama/ptrace_scope> : MPI_Init() => read()

0 0 0 0 0 Read Bandwidth (MB/s) <file=/storage/packages/openmpi/4.0.1-gcc8.1/lib/libopen-pal.so.40.20.1>

0 0 0 0 0 Read Bandwidth (MB/s) <file=/storage/packages/openmpi/4.0.1-gcc8.1/lib/libopen-pal.so.40.20.1> : read()

0 0 0 0 0 Read Bandwidth (MB/s) <file=/storage/packages/openmpi/4.0.1-gcc8.1/lib/openmpi/mca_pmix_pmix3x.so>

0 0 0 0 0 Read Bandwidth (MB/s) <file=/storage/packages/openmpi/4.0.1-gcc8.1/lib/openmpi/mca_pmix_pmix3x.so> : read()

2 6.667 6.667 6.667 0 Read Bandwidth (MB/s) <file=/sys/class/infiniband/hfi1_0/node_guid>

2 6.667 6.667 6.667 0 Read Bandwidth (MB/s) <file=/sys/class/infiniband/hfi1_0/node_guid> : MPI_Init() => read()

1 1.5 1.5 1.5 0 Read Bandwidth (MB/s) <file=/sys/class/infiniband/hfi1_0/node_type>

1 1.5 1.5 1.5 0 Read Bandwidth (MB/s) <file=/sys/class/infiniband/hfi1_0/node_type> : MPI_Init() => read()

3 20 13.33 15.56 3.143 Read Bandwidth (MB/s) <file=/sys/class/infiniband/hfi1_0/ports/1/gids/0>

3 20 13.33 15.56 3.143 Read Bandwidth (MB/s) <file=/sys/class/infiniband/hfi1_0/ports/1/gids/0> : MPI_Init() => read()

1 0.5 0.5 0.5 0 Read Bandwidth (MB/s) <file=/sys/class/infiniband_verbs/abi_version>

1 0.5 0.5 0.5 0 Read Bandwidth (MB/s) <file=/sys/class/infiniband_verbs/abi_version> : MPI_Init() => read()

4 1 1 1 0 Read Bandwidth (MB/s) <file=/sys/class/infiniband_verbs/uverbs0/abi_version>

4 1 1 1 0 Read Bandwidth (MB/s) <file=/sys/class/infiniband_verbs/uverbs0/abi_version> : MPI_Init() => read()

4 18 13.5 16.88 1.949 Read Bandwidth (MB/s) <file=/sys/class/infiniband_verbs/uverbs0/device/modalias>

4 18 13.5 16.88 1.949 Read Bandwidth (MB/s) <file=/sys/class/infiniband_verbs/uverbs0/device/modalias> : MPI_Init() => read()

5 3.5 1.75 2.333 0.639 Read Bandwidth (MB/s) <file=/sys/class/infiniband_verbs/uverbs0/ibdev>

5 3.5 1.75 2.333 0.639 Read Bandwidth (MB/s) <file=/sys/class/infiniband_verbs/uverbs0/ibdev> : MPI_Init() => read()

1 1 1 1 0 Read Bandwidth (MB/s) <file=/sys/class/misc/rdma_cm/abi_version>

1 1 1 1 0 Read Bandwidth (MB/s) <file=/sys/class/misc/rdma_cm/abi_version> : MPI_Init() => read()

2 169.8 151.9 160.8 8.935 Read Bandwidth (MB/s) <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/etc/openmpi-mca-params.conf>

2 169.8 151.9 160.8 8.935 Read Bandwidth (MB/s) <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/etc/openmpi-mca-params.conf> : MPI_Init() => read()

2 193.5 90.69 142.1 51.39 Read Bandwidth (MB/s) <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/etc/pmix-mca-params.conf>

2 193.5 90.69 142.1 51.39 Read Bandwidth (MB/s) <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/etc/pmix-mca-params.conf> : MPI_Init() => read()

6 8192 493 3024 2794 Read Bandwidth (MB/s) <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/share/openmpi/help-mpi-btl-openib.txt>

6 8192 493 3024 2794 Read Bandwidth (MB/s) <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/share/openmpi/help-mpi-btl-openib.txt> : MPI_Init() => read()

2 1136 512 824 312 Read Bandwidth (MB/s) <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/share/openmpi/mca-btl-openib-device-params.ini>

2 1136 512 824 312 Read Bandwidth (MB/s) <file=/usr/local/packages/openmpi/4.0.1-gcc8.1/share/openmpi/mca-btl-openib-device-params.ini> : MPI_Init() => read()

2 2 0.6667 1.333 0.6667 Read Bandwidth (MB/s) <file=127.0.0.1,port=34423>

2 2 0.6667 1.333 0.6667 Read Bandwidth (MB/s) <file=127.0.0.1,port=34423> : MPI_Init() => recv()

0 0 0 0 0 Read Bandwidth (MB/s) <file=127.0.0.1,port=34423> : progress_engine => read()

1 363.2 363.2 363.2 0 Read Bandwidth (MB/s) <file=adios2.xml>

1 363.2 363.2 363.2 0 Read Bandwidth (MB/s) <file=adios2.xml> : read()

0 0 0 0 0 Read Bandwidth (MB/s) <file=unknown>

0 0 0 0 0 Read Bandwidth (MB/s) <file=unknown> : progress_engine => read()

168 597.3 0.06667 44.01 114.3 Write Bandwidth (MB/s)

1 0.25 0.25 0.25 0 Write Bandwidth (MB/s) : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile => write()

1 384.6 384.6 384.6 9.344E-06 Write Bandwidth (MB/s) : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => writev()

20 597.3 25.6 232.3 227.3 Write Bandwidth (MB/s) : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => write()

10 200.3 76.88 153.6 36.51 Write Bandwidth (MB/s) : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteData => write()

8 1.143 0.7273 0.9488 0.141 Write Bandwidth (MB/s) : MPI_Finalize() => write()

1 2.538 2.538 2.538 5.162E-08 Write Bandwidth (MB/s) : MPI_Init() => send()

108 36 0.3636 6.716 7.112 Write Bandwidth (MB/s) : MPI_Init() => write()

19 25 0.06667 4.724 7.48 Write Bandwidth (MB/s) : fwrite()

0 0 0 0 0 Write Bandwidth (MB/s) : progress_engine => writev()

5 36 6.4 19.68 11.1 Write Bandwidth (MB/s) <file=/dev/infiniband/rdma_cm>

5 36 6.4 19.68 11.1 Write Bandwidth (MB/s) <file=/dev/infiniband/rdma_cm> : MPI_Init() => write()

51 24 0.9275 9.902 6.884 Write Bandwidth (MB/s) <file=/dev/infiniband/uverbs0>

51 24 0.9275 9.902 6.884 Write Bandwidth (MB/s) <file=/dev/infiniband/uverbs0> : MPI_Init() => write()

1 2.538 2.538 2.538 5.162E-08 Write Bandwidth (MB/s) <file=127.0.0.1,port=34423>

1 2.538 2.538 2.538 5.162E-08 Write Bandwidth (MB/s) <file=127.0.0.1,port=34423> : MPI_Init() => send()

0 0 0 0 0 Write Bandwidth (MB/s) <file=127.0.0.1,port=34423> : progress_engine => writev()

10 200.3 76.88 153.6 36.51 Write Bandwidth (MB/s) <file=sim.bp/data.0>

10 200.3 76.88 153.6 36.51 Write Bandwidth (MB/s) <file=sim.bp/data.0> : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteData => write()

10 597.3 196.5 423.7 172.7 Write Bandwidth (MB/s) <file=sim.bp/md.0>

10 597.3 196.5 423.7 172.7 Write Bandwidth (MB/s) <file=sim.bp/md.0> : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => write()

11 64 0.25 37.26 18.63 Write Bandwidth (MB/s) <file=sim.bp/md.idx>

1 0.25 0.25 0.25 0 Write Bandwidth (MB/s) <file=sim.bp/md.idx> : BP4Writer::Close => BP4Writer::WriteCollectiveMetadataFile => write()

10 64 25.6 40.96 15.2 Write Bandwidth (MB/s) <file=sim.bp/md.idx> : BP4Writer::EndStep => BP4Writer::Flush => BP4Writer::WriteCollectiveMetadataFile => write()

1 384.6 384.6 384.6 9.344E-06 Write Bandwidth (MB/s) <file=sim.bp/profiling.json>

1 384.6 384.6 384.6 9.344E-06 Write Bandwidth (MB/s) <file=sim.bp/profiling.json> : BP4Writer::Close => BP4Writer::WriteProfilingJSONFile => writev()

19 25 0.06667 4.724 7.48 Write Bandwidth (MB/s) <file=stdout>

19 25 0.06667 4.724 7.48 Write Bandwidth (MB/s) <file=stdout> : fwrite()

60 8 0.3636 2.158 1.233 Write Bandwidth (MB/s) <file=unknown>

8 1.143 0.7273 0.9488 0.141 Write Bandwidth (MB/s) <file=unknown> : MPI_Finalize() => write()

52 8 0.3636 2.344 1.222 Write Bandwidth (MB/s) <file=unknown> : MPI_Init() => write()

---------------------------------------------------------------------------------------

NODE 0;CONTEXT 0;THREAD 1:

---------------------------------------------------------------------------------------

%Time Exclusive Inclusive #Call #Subrs Inclusive Name

msec total msec usec/call

---------------------------------------------------------------------------------------

100.0 7 301 1 61 301393 .TAU application

97.2 292 292 1 6 292867 progress_engine

0.2 0.648 0.648 63 0 10 read()

0.1 0.259 0.259 1 0 259 fopen64()

0.0 0.102 0.102 1 0 102 fopen()

0.0 0.028 0.028 1 0 28 fclose()

---------------------------------------------------------------------------------------

(other threads/nodes follow)

Note: on a test system at uoregon.edu, the machine has an Infiniband interface and LibFabric installed. The CMake configuration for ADIOS2 detected LibFabric and decided to use RDMA as the default protocol. Runtime errors led us to force the SST transport to be WAN instead of RDMA. That is done with:

<io name="SimulationOutput">

<engine type="SST">

<parameter key="OpenTimeoutSecs" value="10.0"/>

<parameter key="DataTransport" value="WAN"/>

</engine>

</io>In order to collect a trace of an ADIOS2 coupled application, the easiest way is to launch the writer and reader using MPMD. The ADIOS2 tutorial example HeatTransfer is normally launched like this:

mpirun \

-n 16 $te ./heatSimulation sim.bp 4 4 64 64 100 10 : \

-n 4 $te ./heatAnalysis sim.bp analysis.bp 2 2

To collect a trace, tau_exec is used to wrap both applications, and a TAU environment variable is set:

te="tau_exec -T mpi,pthread"

export TAU_TRACE=1

rm -f tautrace* events*

mpirun \

-n 16 $te ./heatSimulation sim.bp 4 4 64 64 100 10 : \

-n 4 $te ./heatAnalysis sim.bp analysis.bp 2 2

...and after the trace files are generated, they have to be merged and converted to either OTF2 (to be read by Vampir) or SLOG2 (to be read by JumpShot). Keep in mind that both applications will be merged into the same trace.

rm -f tau.trc tau.edf

tau_treemerge.pl

rm -rf traces*

tau2otf2 tau.trc tau.edf traces

tau2slog2 tau.trc tau.edf -o traces.slog2

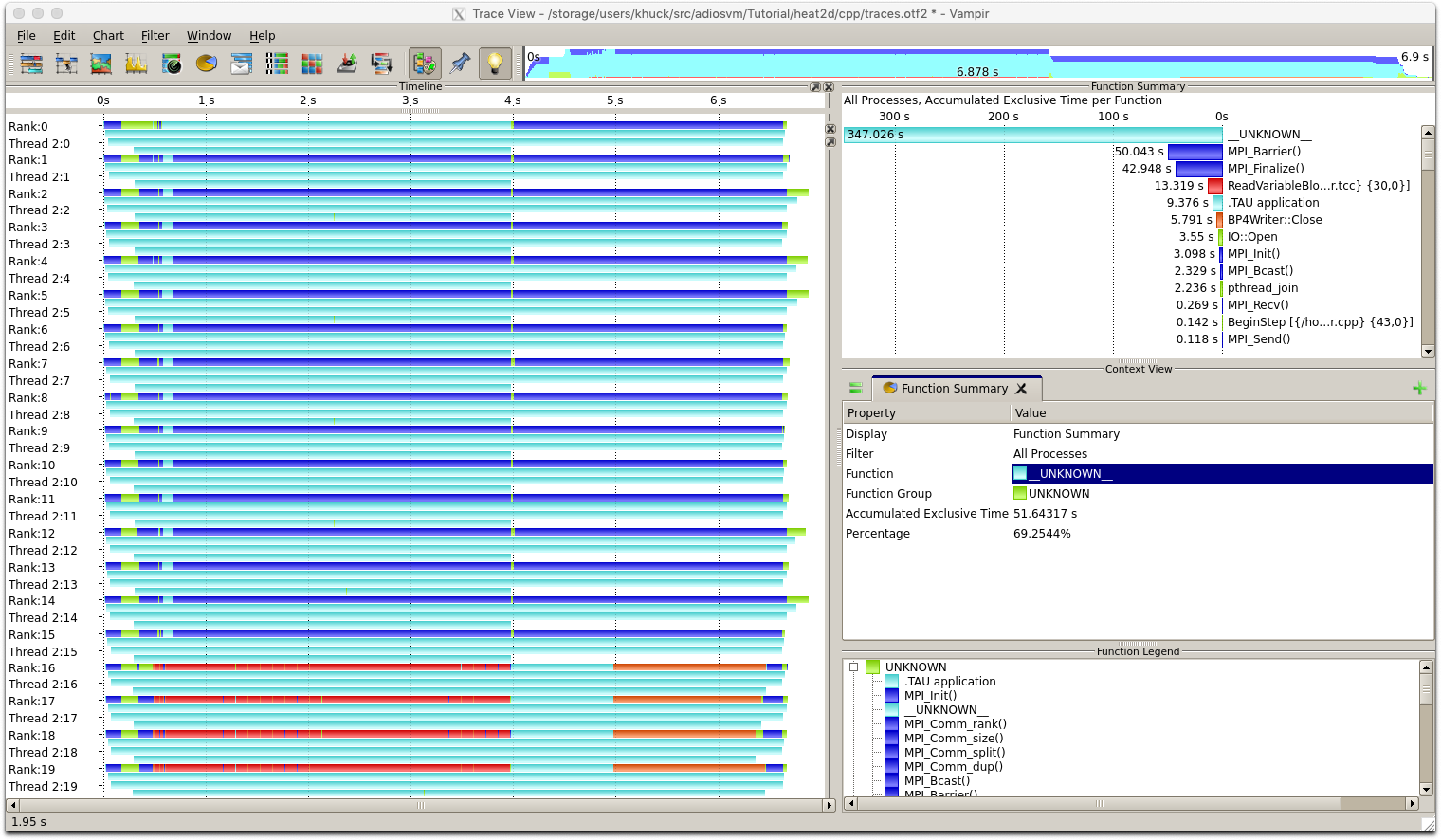

If you are eventually targeting OTF2 (Vampir), you can set the TAU_TRACE_FORMAT=otf2 environment variable before writing, and the trace will be written directly. Regardless, this is what the trace of the above example looks like in Vampir (after setting colors for groups of events):

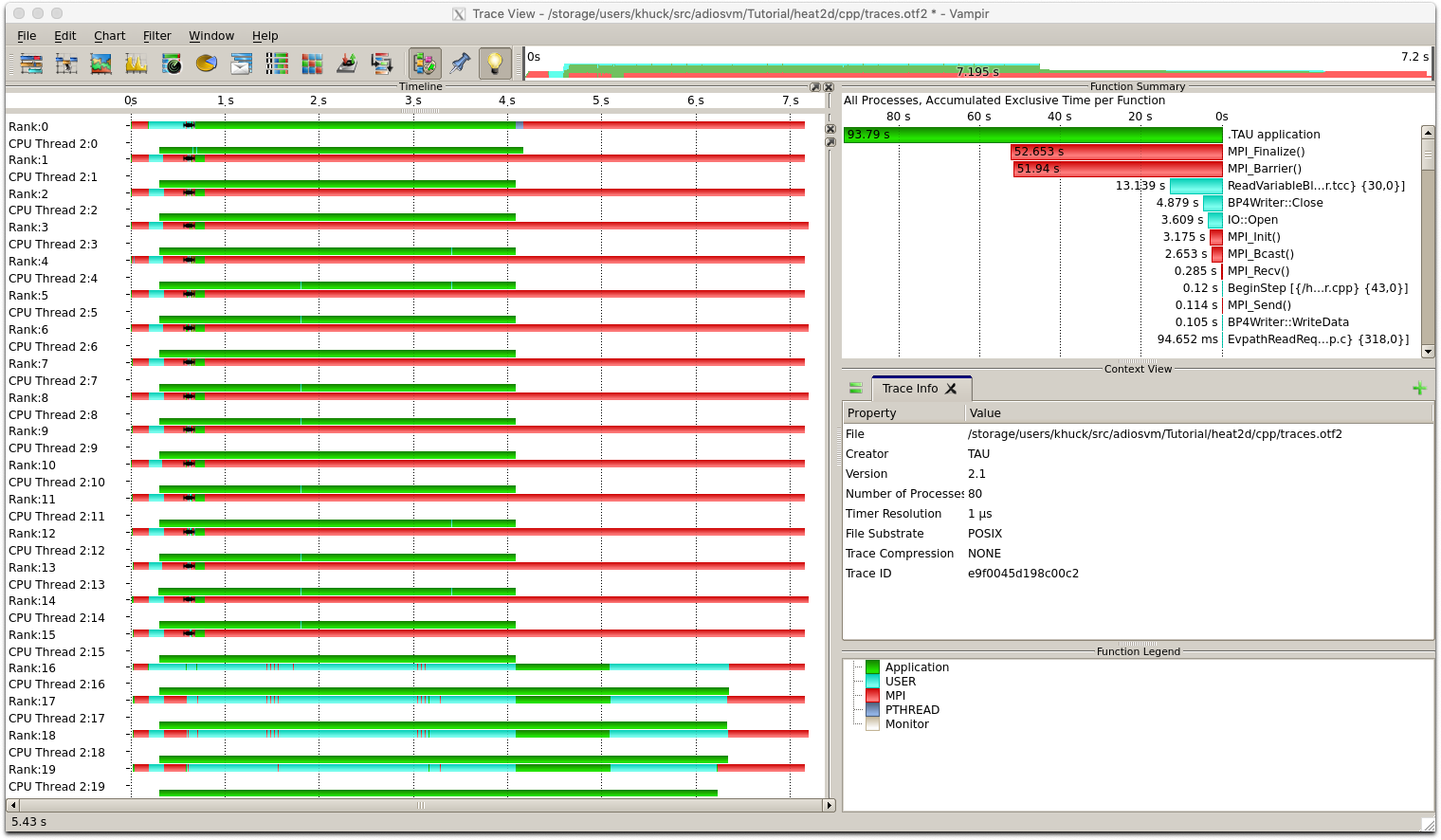

...and this is what it looks like in Vampir when written straight to OTF2 (no conversion):

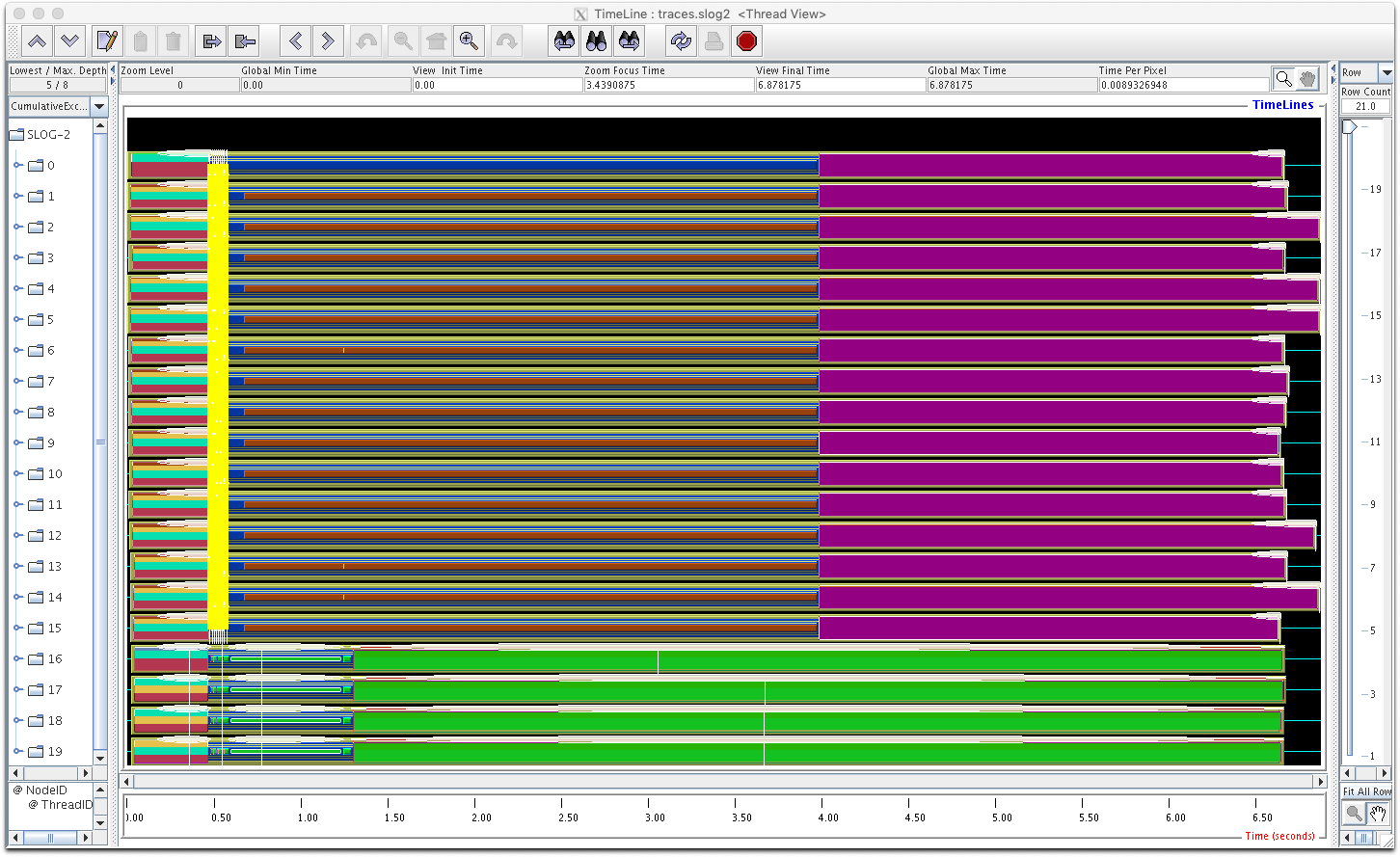

...and this is what it looks like in JumpShot:

Still have questions? Check out the official documentation or contact [email protected] for help.

- Home

- Installing TAU

- Using TAU

- Measuring XGC with TAU on Summit and Spock

- Configuring TAU to measure IO libraries

- Instrumenting CXX Applications

- Measuring the Papyrus Key Value Store

- Using TAU to Profile and or Trace ADIOS

- Using the Monitoring Plugin

- Quick Start for p2z with TAU

- Quick Start for LULESH with TAU

- Paraprof with X11 Forwarding

- Using the TAU Skel Plugin

- Using TAU with Python

- Streaming TAU data to ADIOS2 Profiles

- Frequently Asked Questions (FAQ)