Background:

(a) https://open5gs.org/

(b) https://github.com/open5gs/open5gs

(c) https://github.com/aligungr/UERANSIM

Prerequisites:

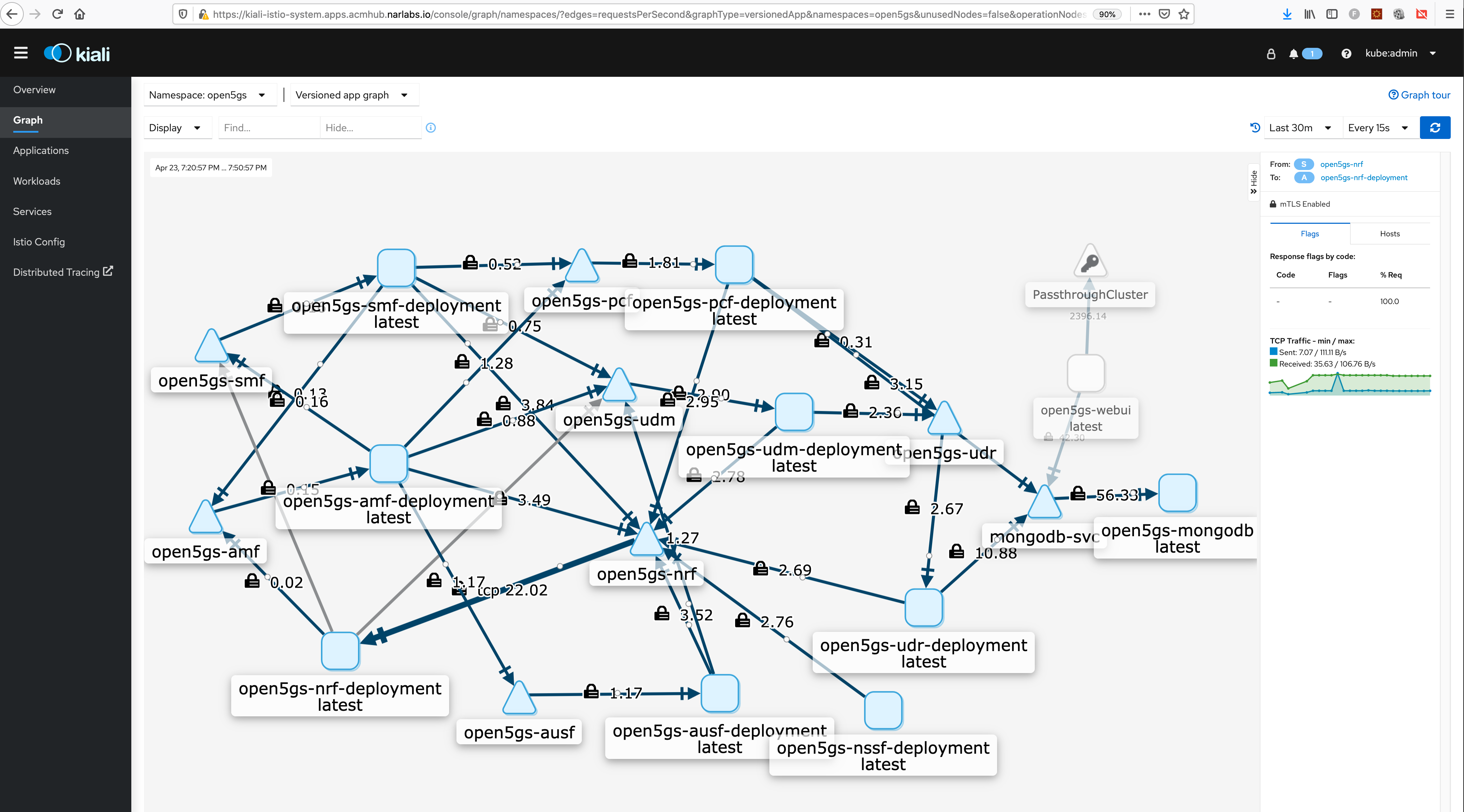

(i) OCP with OSM installed and configured

(ii) Enable SCTP on OCP Reference

oc create -f enablesctp.yaml

Wait for machine-config to be applied on all worker nodes and all worker nodes return to ready state. Check with;

oc get nodes

(1) Run 0-deploy5gcore.sh that creates the project. Add the project to the service mesh member-roll, provisions necessary role bindings, deploy helm-charts for you, and also create virtual istio ingress for Webui.

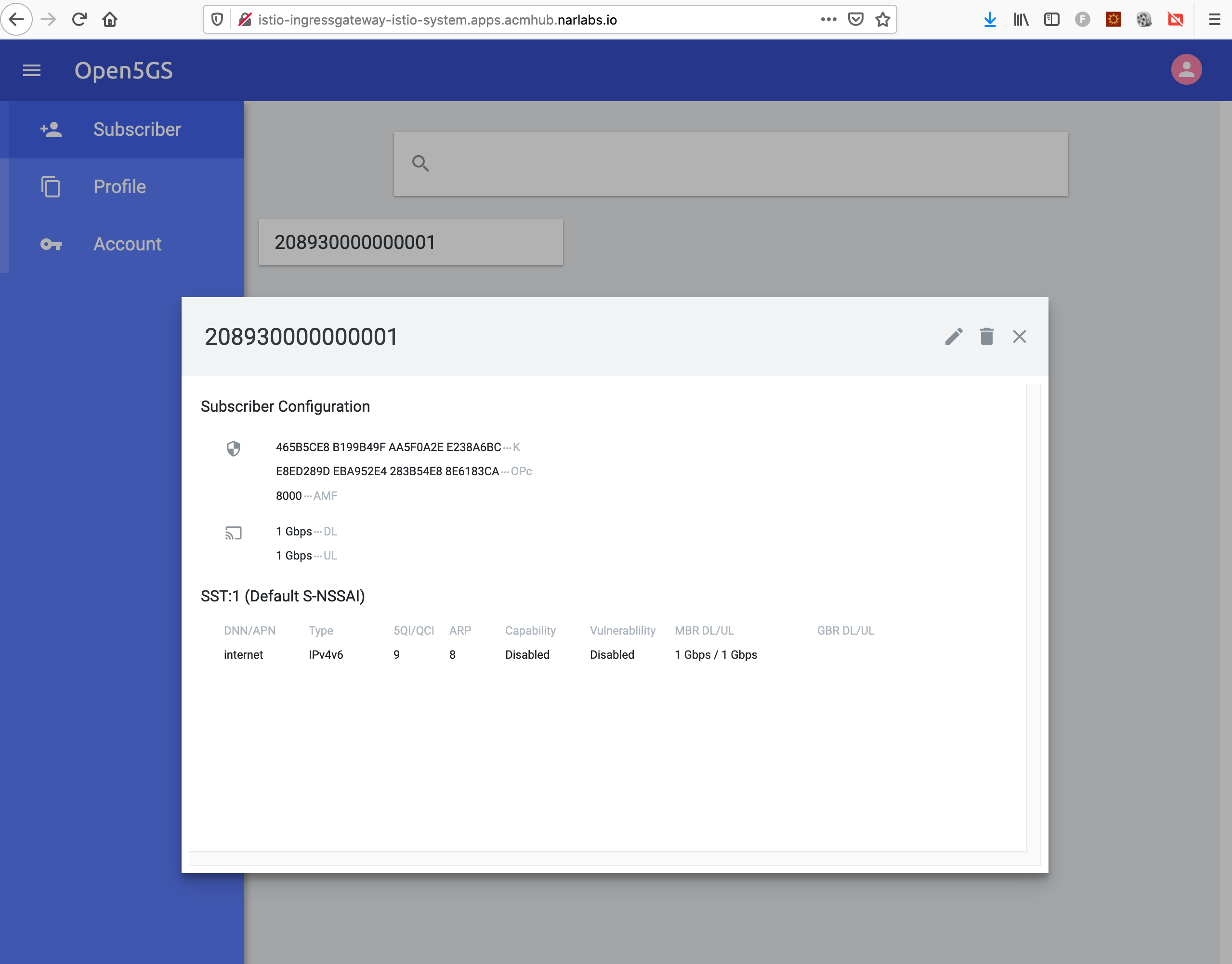

(2) Provision user equipment (UE) IMSI (see ueransim/ueransim-ue-configmap.yaml, default IMSI is 208930000000001) to 5gcore so your UE registration (i.e. running ueransim UE mode) will be allowed.

The default Webui password is admin/1423

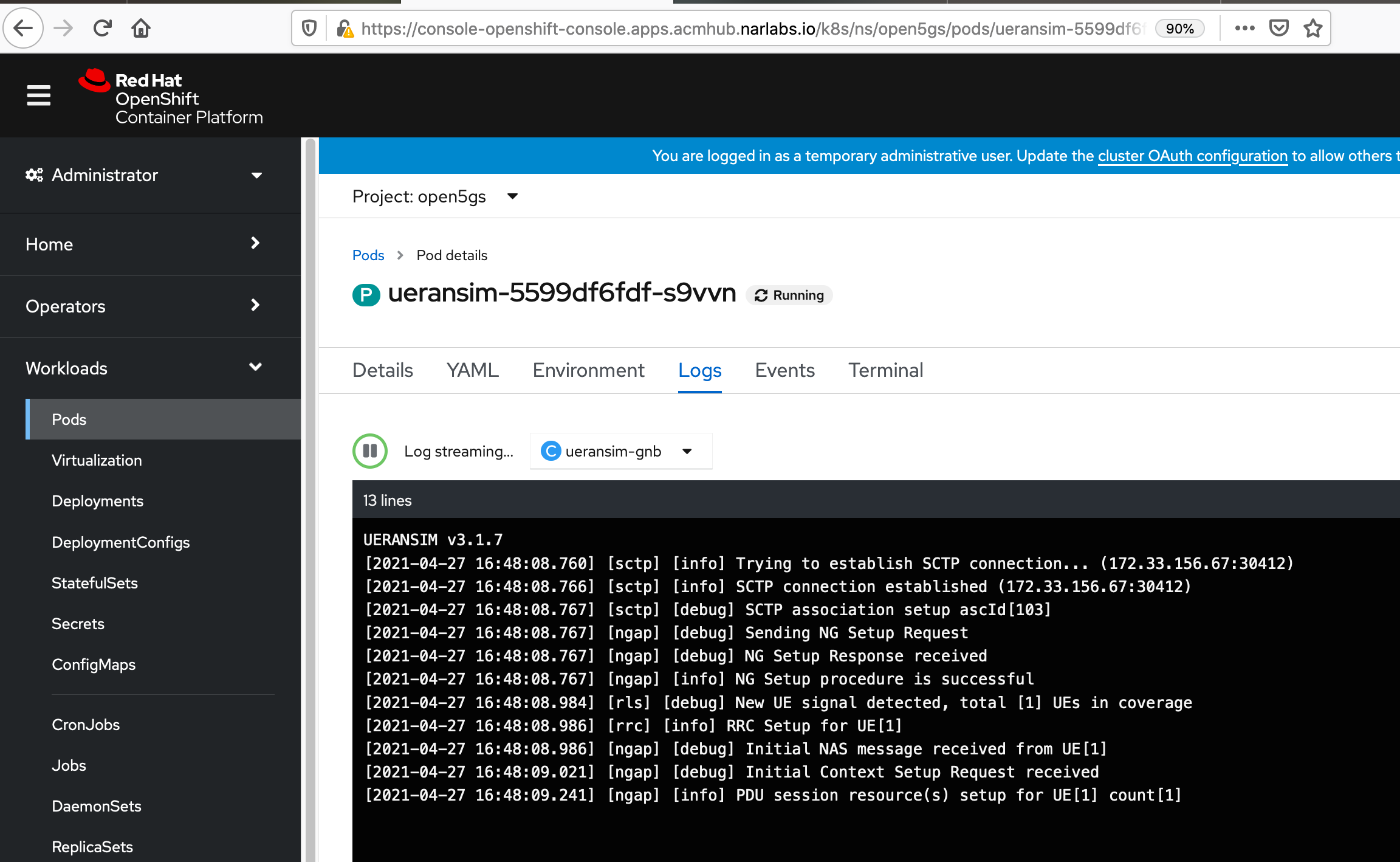

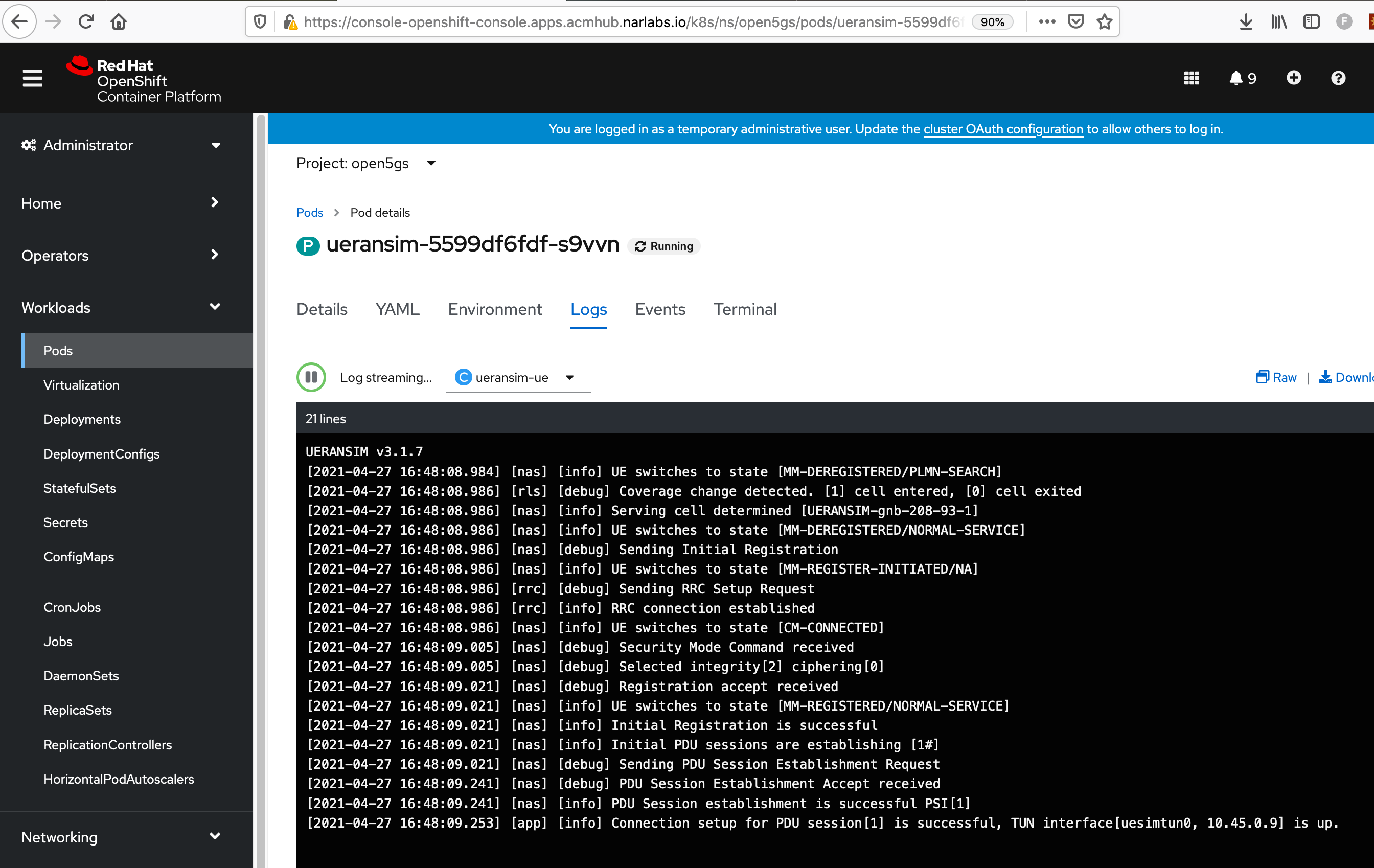

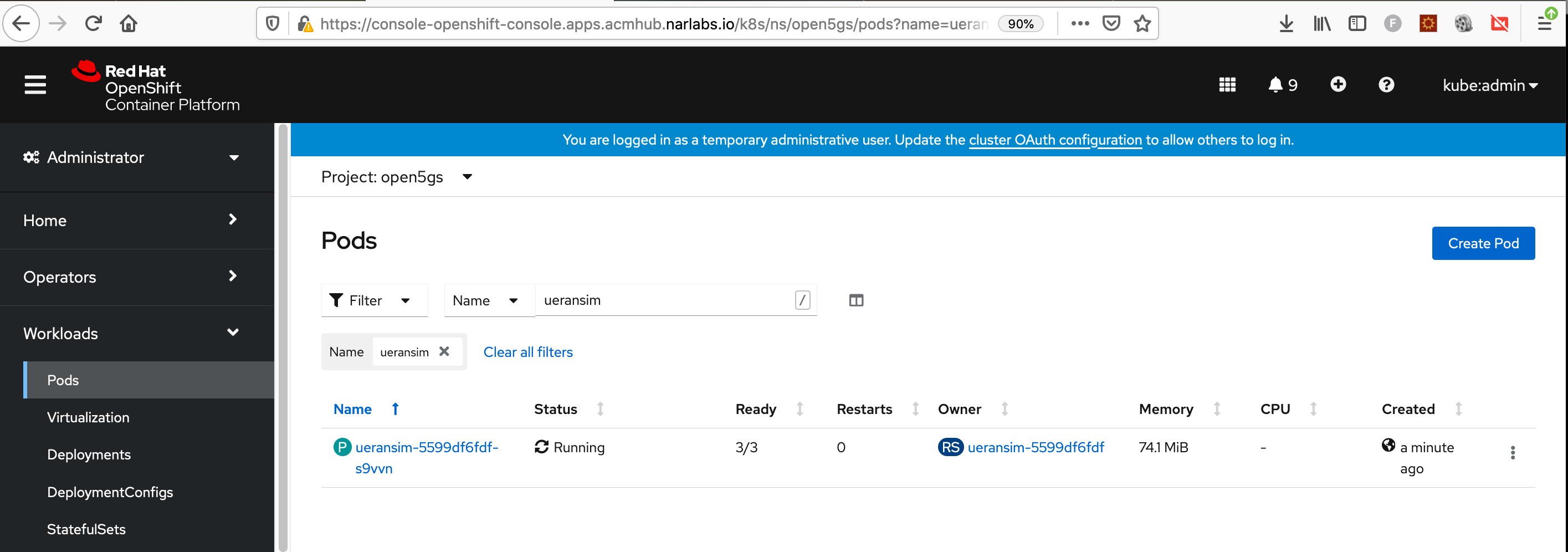

(3) Use 1-deploy5gran.sh that creates the config maps and UERANSIM deployment with one pod that has multiple containers (GnB, UE as separate containers inside the same pod)

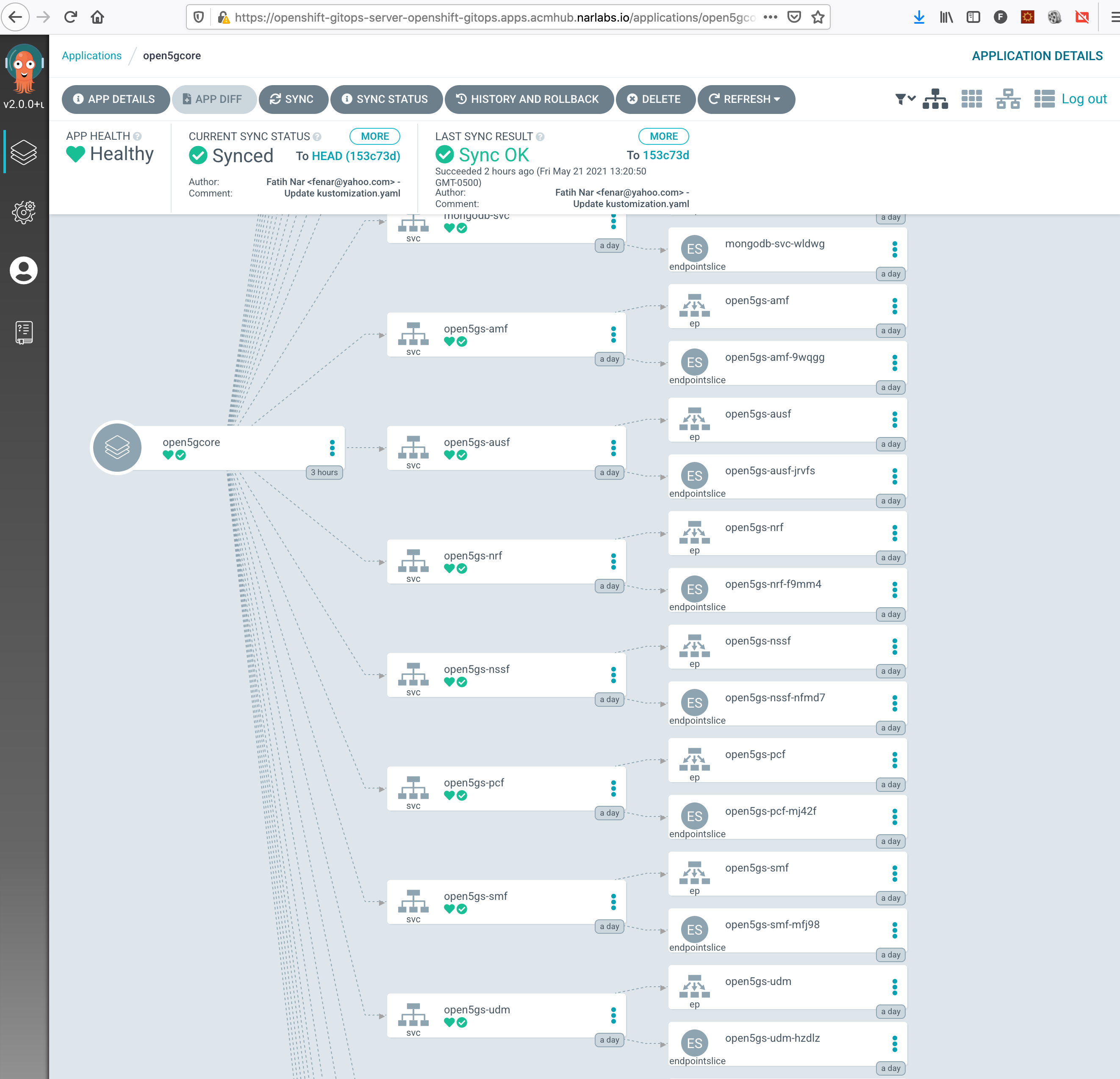

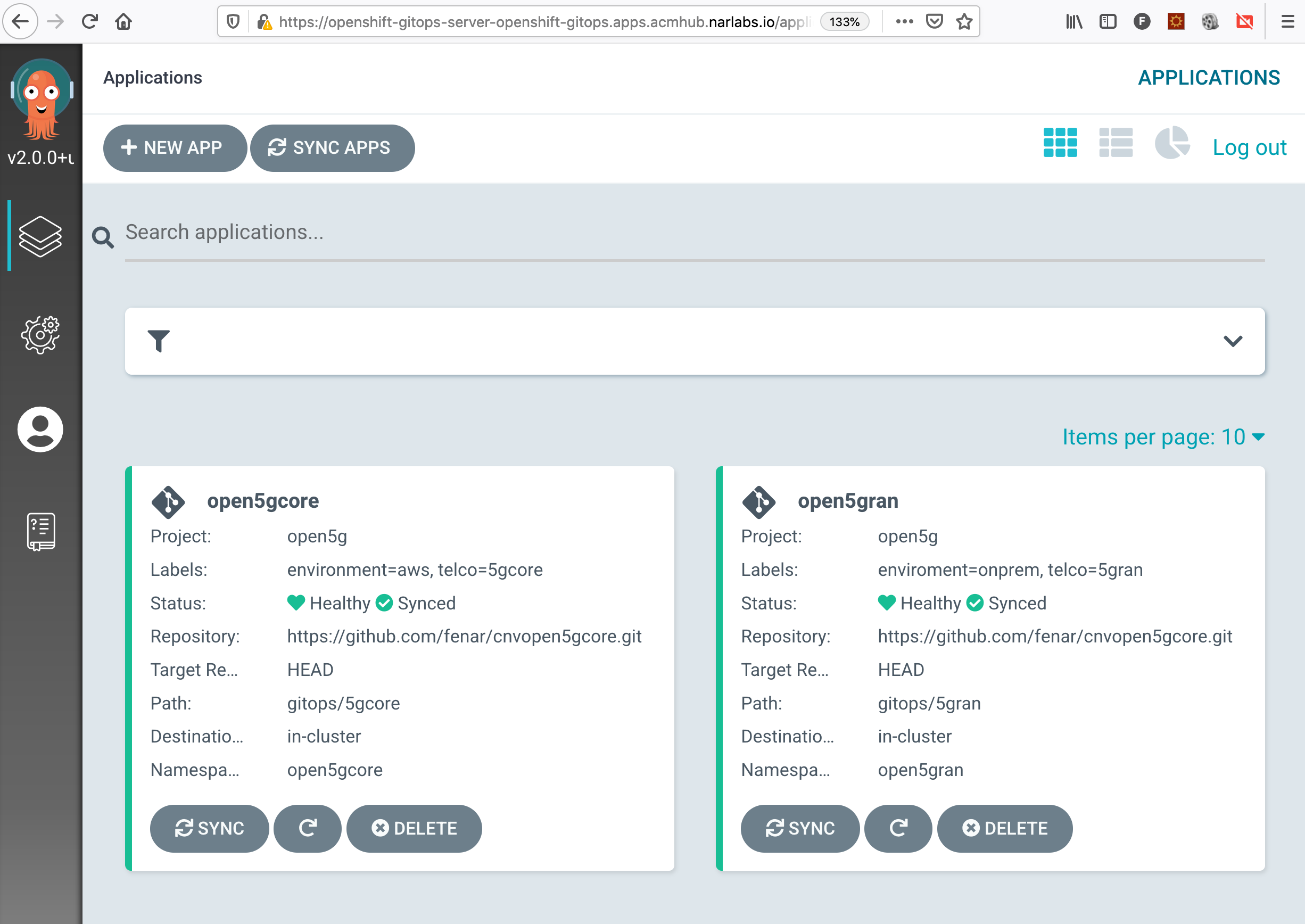

(4) If you like to leverage GitOps on your deployment you can use the Red Hat Openshift GitOps operator and simply point this repo with 5gcore helm path and kickstart your deployment.

Ref: Red Hat GitOps Operator

If you fail to use ArgoCD due to permission errors on your project, it is worth checking/adding the necessary role to your ArgoCD controller.

oc adm policy add-cluster-role-to-user cluster-admin -z openshift-gitops-argocd-application-controller -n openshift-gitops

ArgoCD Applications; 5GCore and 5GRAN

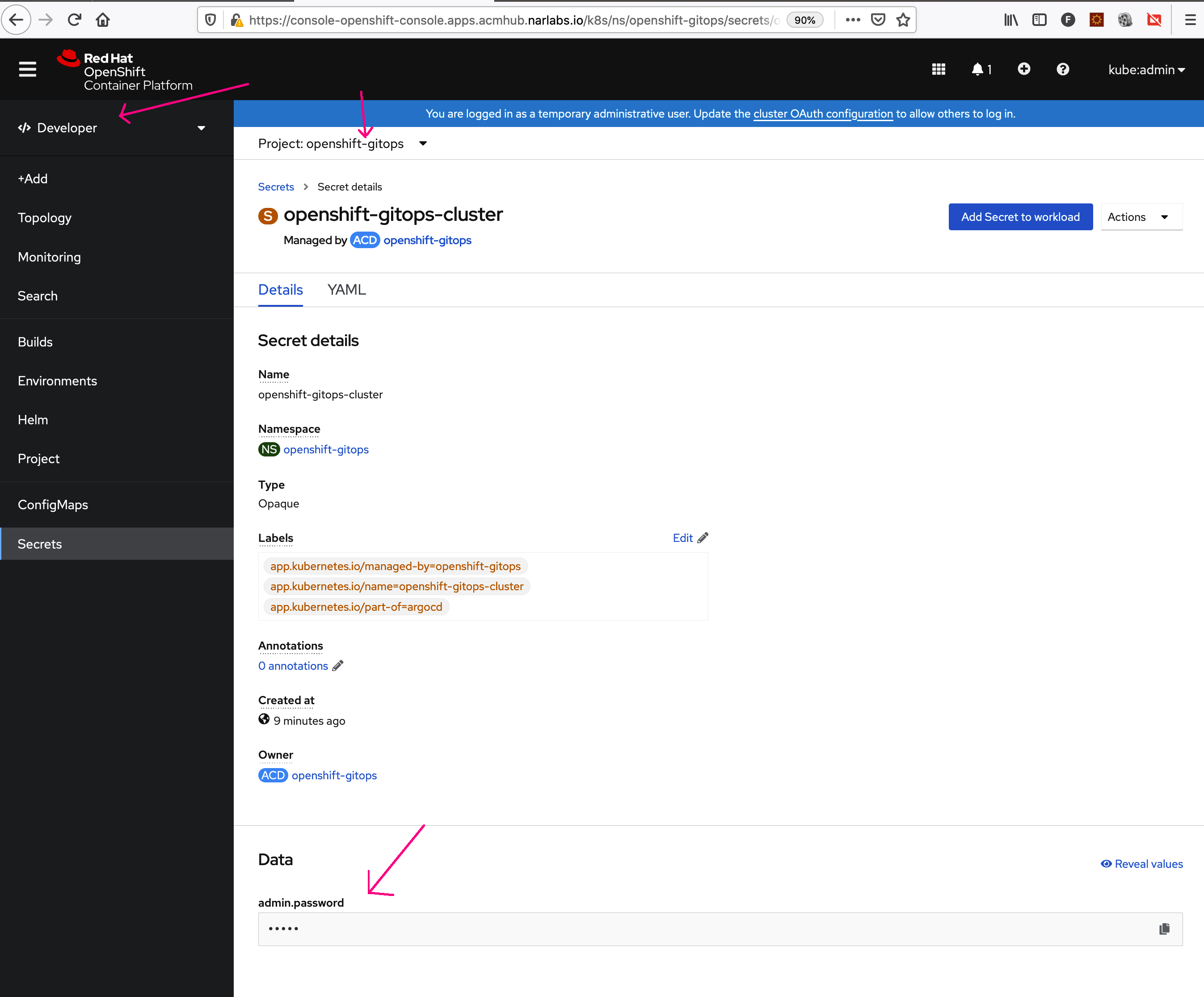

PS: If you wonder where to get the default ArgoCD admin password, here it is :-).

Alternatively, you can get at cli:

oc get secret openshift-gitops-cluster -n openshift-gitops -o jsonpath='{.data.admin\.password}' | base64 -d

>>> Adding More Target Clusters as target deployment environment

(5) Use ./3-delete5gran.sh to wipe UERANSIM microservices deployment

(6) Clear Enviroment run ./5-delete5gcore.sh to wipe 5gcore deployment