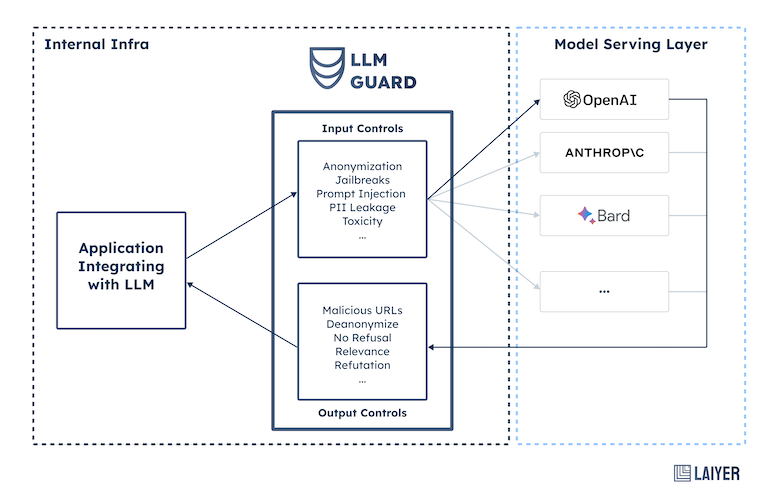

LLM Guard by Laiyer.ai is a comprehensive tool designed to fortify the security of Large Language Models (LLMs).

We're eager to provide personalized assistance when deploying your LLM Guard to a production environment.

By offering sanitization, detection of harmful language, prevention of data leakage, and resistance against prompt injection attacks, LLM-Guard ensures that your interactions with LLMs remain safe and secure.

Begin your journey with LLM Guard by downloading the package:

pip install llm-guardImportant Notes:

- LLM Guard is designed for easy integration and deployment in production environments. While it's ready to use out-of-the-box, please be informed that we're constantly improving and updating the repository.

- Base functionality requires a limited number of libraries. As you explore more advanced features, necessary libraries will be automatically installed.

- Ensure you're using Python version 3.8.1 or higher. Confirm with:

python --version. - Library installation issues? Consider upgrading pip:

python -m pip install --upgrade pip.

Examples:

- Get started with ChatGPT and LLM Guard.

- Deploy LLM Guard as API

- Anonymize

- BanSubstrings

- BanTopics

- Code

- Language

- PromptInjection

- Regex

- Secrets

- Sentiment

- TokenLimit

- Toxicity

- BanSubstrings

- BanTopics

- Bias

- Code

- Deanonymize

- JSON

- Language

- LanguageSame

- MaliciousURLs

- NoRefusal

- Refutation

- Regex

- Relevance

- Sensitive

- Sentiment

- Toxicity

You can find our roadmap here. Please don't hesitate to contribute or create issues, it helps us improve LLM Guard!

Got ideas, feedback, or wish to contribute? We'd love to hear from you! Email us.

For detailed guidelines on contributions, kindly refer to our contribution guide.