This repository contains accompanying code for the paper Gaussian Membership Inference Privacy by Tobias Leemann*, Martin Pawelczyk*, and Gjergji Kasneci (appeared at NeurIPS 2023).

The codebase was extended with to make the attack applicable to real-world models in the workshop paper Is My Data Safe? Predicting Instance-Level Membership Inference Success for White-box and Black-box Attacks (ICML 2024 NextGenAISafety Workshop). Details can be found at the end of this document.

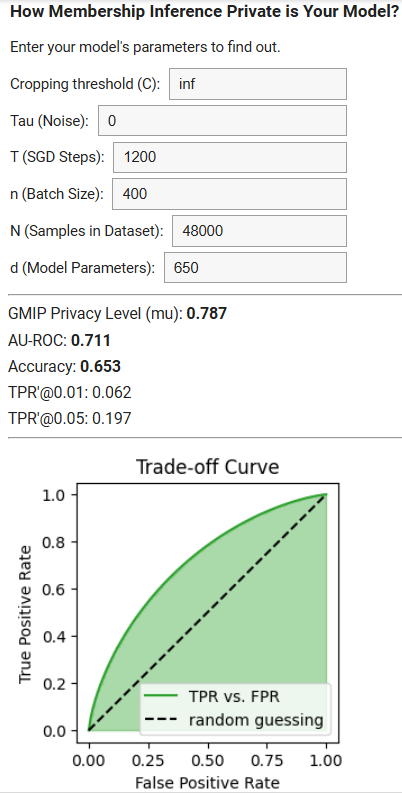

Find out how private your model is! Enter your models's parameters in our interactive calculator to valuate the maximal vulnerability of your model to membership inference attacks, and visualize the results using AU-ROC curve. No installation is required.

The following steps are required to run the code in this repository using a dedicated anaconda environment.

Make sure you have a working installation of anaconda on your system and go to the main directory of this repository in your terminal.

Then install the requirements into a new conda environment named gaussian_mip by running the following commands

conda env create -f environment.yml

Then run

conda activate gaussian_mip

The attack experiment is implemented in notebooks/GLiRAttack.ipynb. To add the kernel to an existing jupyter installation, activate the gaussian_mip python kernel and run

python -m ipykernel install --user --name gaussian_mip

The adult dataset is included in this repository and CIFAR-10 is available via pytorch. To download the purchase dataset, run the script

./tabular/download_datasets.sh

In our work, we present a novel membership inference attack. In Figure 2 of our paper, we run this attack on models that were trained with the train script train_scripts/train_models_audit.py <dataset>. See the notebook notebooks/GLiRAttack.ipynb for the full code of the attack and more details on how to run it on the trained models.

To reproduce our experiment on the utility of Membership Inference Private models vs. Differentially Private models, please consider the notebook notebooks/UtilityConsiderations.ipynb. To create the utility plot, it is first required to train corresponding models using the script train_scripts/train_models_util.sh <dataset>, which takes a single argument that defines the dataset (currently supported are "adult", "purchase", "cifar10"). Run the script for all three datasets to recreate the plots. Further instructions can be found in the notebook.

If you find our work or the ressources provided here useful, please consider citing our work, for instance using the following BibTeX entry:

@InProceedings{leemann2023gaussian,

title = {Gaussian Membership Inference Privacy},

author = {Leemann, Tobias and Pawelczyk, Martin and Kasneci, Gjergji},

booktitle = {37th Conference on Neural Information Processing Systems (NeurIPS)},

year = {2023}

}

Is My Data Safe? Predicting Instance-Level Membership Inference Success for White-box and Black-box Attacks

The repository additionally contains code for extending the attack to more realistic datasets and models such as BERT and a skin cancer prediction dataset as presented in the ICML workshop paper..

To reproduce the results in this work, the following steps are required:

The ML models used in our work can be trained by running the script

./train_scripts/train_risk_pred.sh <dataset>

where dataset can be either cancer, imdb, or cifar10.

To attack the trained models, we provide scripts in the folder .\attacks.

See the scripts for details on the arguments that they expect (usually the dataset and the number of models to run the attack on).

The scripts will save the resulting attack scores in files

results/mi_scores_<attack>_<dataset>.pt

We provide these files in the repository.

See script eval_predictions.py.

The plots and tables from the workshop paper are produced using the notebook notebooks\StudyRiskPredictors.ipynb.

Please refer to the workshop paper for more details on the predictors.

Use the following citation if you find this project insightful:

@InProceedings{leemann2024data,

title = {Is My Data Safe? Predicting Instance-Level Membership Inference Success for White-box and Black-box Attacks},

author = {Leemann, Tobias and Prenkaj, Bardh and Kasneci, Gjergji},

booktitle = {ICML 2024 Workshop on the Next Generation of AI Safety},

year = {2024}

}