💥 Please check my Ph.D. student's amazing ongoing work (with very high modularity): efficient_online_learning for autonomous driving! 💥

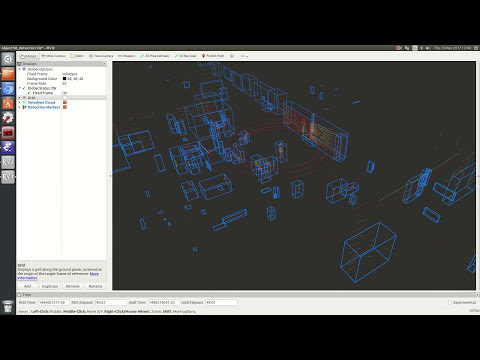

This is a ROS-based online learning framework for human classification in 3D LiDAR scans, taking advantage of robust multi-target tracking to avoid the need for data annotation by a human expert. Please watch the videos below for more details.

For a standalone implementation of the clustering method, please refer to: https://github.com/yzrobot/adaptive_clustering

cd catkin_ws/src

// Install prerequisite packages

git clone https://github.com/wg-perception/people.git

git clone https://github.com/DLu/wu_ros_tools.git

sudo apt-get install ros-kinetic-bfl

// The core

git clone https://github.com/yzrobot/online_learning

// Build

cd catkin_ws

catkin_makeAfter catkin_make succeed, modify 'line 3' of online_learning/object3d_detector/launch/object3d_detector.launch, and make the value is the path where your bag files are located:

<arg name="bag" value="/home/yq/Downloads/LCAS_20160523_1200_1218.bag"/>

The bag file offered by Lincoln Centre for Autonomous Systems is in velodyne_msgs/VelodyneScan message type, so we would need related velodyne packages in ROS:

$ sudo apt-get install ros-kinetic-velodyne*Now, the svm should be able to run:

$ cd catkin_ws

$ source devel/setup.bash

$ roslaunch object3d_detector object3d_detector.launchIf you are considering using this code, please reference the following:

@inproceedings{yz17iros,

author = {Zhi Yan and Tom Duckett and Nicola Bellotto},

title = {Online learning for human classification in {3D LiDAR-based} tracking},

booktitle = {Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages = {864--871},

address = {Vancouver, Canada},

month = {September},

year = {2017}

}